Which of the following changes can the data scientist make to address the concern?

A data scientist is using Spark ML to engineer features for an exploratory machine learning project.

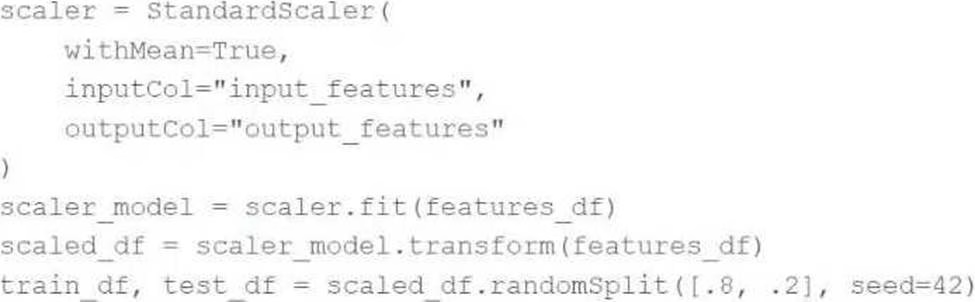

They decide they want to standardize their features using the following code block:

Upon code review, a colleague expressed concern with the features being standardized prior to splitting the data into a training set and a test set.

Which of the following changes can the data scientist make to address the concern?

A . Utilize the MinMaxScaler object to standardize the training data according to global minimum and maximum values

B . Utilize the MinMaxScaler object to standardize the test data according to global minimum and maximum values

C . Utilize a cross-validation process rather than a train-test split process to remove the need for standardizing data

D . Utilize the Pipeline API to standardize the training data according to the test data’s summary statistics

E . Utilize the Pipeline API to standardize the test data according to the training data’s summary statistics

Answer: E

Explanation:

To address the concern about standardizing features prior to splitting the data, the correct approach is to use the Pipeline API to ensure that only the training data’s summary statistics are used to standardize the test data. This is achieved by fitting the StandardScaler (or any scaler) on the training data and then transforming both the training and test data using the fitted scaler. This approach prevents information leakage from the test data into the model training process and ensures that the model is evaluated fairly.

Reference: Best Practices in Preprocessing in Spark ML (Handling Data Splits and Feature Standardization).

Latest Databricks Machine Learning Associate Dumps Valid Version with 74 Q&As

Latest And Valid Q&A | Instant Download | Once Fail, Full Refund