Which approach is the MOST APPROPRIATE for fine-tuning the pre-trained model with your custom dataset?

You are a data scientist at a marketing agency tasked with creating a sentiment analysis model to analyze customer reviews for a new product. The company wants to quickly deploy a solution with minimal training time and development effort. You decide to leverage a pre-trained natural language processing (NLP) model and fine-tune it using a custom dataset of labeled customer reviews. Your team has access to both Amazon Bedrock and SageMaker JumpStart.

Which approach is the MOST APPROPRIATE for fine-tuning the pre-trained model with your custom dataset?

A . Use SageMaker JumpStart to create a custom container for your pre-trained model and manually implement fine-tuning with TensorFlow

B . Use SageMaker JumpStart to deploy a pre-trained NLP model and use the built-in fine-tuning functionality with your custom dataset to create a customized sentiment analysis model

C . Use Amazon Bedrock to train a model from scratch using your custom dataset, as Bedrock is optimized for training large models efficiently

D . Use Amazon Bedrock to select a foundation model from a third-party provider, then fine-tune the

model directly in the Bedrock interface using your custom dataset

Answer: B

Explanation:

Correct option:

Use SageMaker JumpStart to deploy a pre-trained NLP model and use the built-in fine-tuning functionality with your custom dataset to create a customized sentiment analysis model

Amazon Bedrock is the easiest way to build and scale generative AI applications with foundation models. Amazon Bedrock is a fully managed service that offers a choice of high-performing foundation models (FMs) from leading AI companies like AI21 Labs, Anthropic, Cohere, Meta, Mistral AI, Stability AI, and

Amazon through a single API, along with a broad set of capabilities you need to build generative AI applications with security, privacy, and responsible AI.

Amazon SageMaker JumpStart is a machine learning (ML) hub that can help you accelerate your ML journey. With SageMaker JumpStart, you can evaluate, compare, and select FMs quickly based on pre-defined quality and responsibility metrics to perform tasks like article summarization and image generation. SageMaker JumpStart provides managed infrastructure and tools to accelerate scalable, reliable, and secure model building, training, and deployment of ML models.

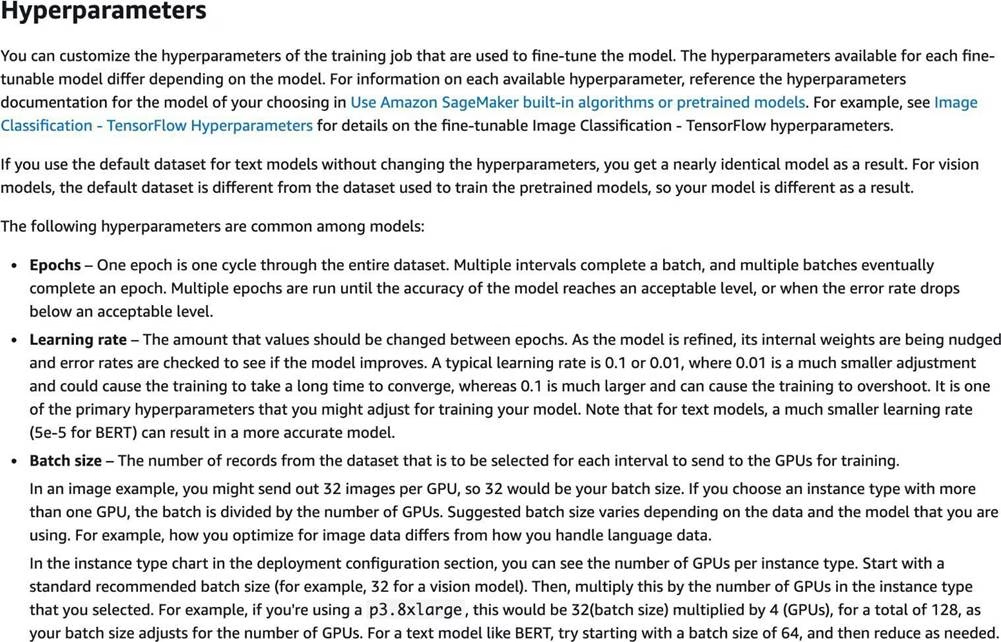

Fine-tuning trains a pretrained model on a new dataset without training from scratch. This process, also known as transfer learning, can produce accurate models with smaller datasets and less training time.

SageMaker JumpStart is specifically designed for scenarios like this, where you can quickly deploy a pre-trained model and fine-tune it using your custom dataset. This approach allows you to leverage existing NLP models, reducing both development time and computational resources needed for training from scratch.

via – https://docs.aws.amazon.com/sagemaker/latest/dg/jumpstart-fine-tune.html

Incorrect options:

Use Amazon Bedrock to select a foundation model from a third-party provider, then fine-tune the model directly in the Bedrock interface using your custom dataset – Amazon Bedrock provides access to foundation models from third-party providers, allowing for easy deployment and integration into

applications. However, as of now, Bedrock does not directly support fine-tuning these models within its interface. Fine-tuning is better suited for SageMaker JumpStart in this scenario.

Use Amazon Bedrock to train a model from scratch using your custom dataset, as Bedrock is optimized for training large models efficiently – Amazon Bedrock is not intended for training models from scratch, especially not for scenarios where fine-tuning a pre-trained model would be more efficient. Bedrock is optimized for deploying and scaling foundation models, not for raw model training.

Use SageMaker JumpStart to create a custom container for your pre-trained model and manually implement fine-tuning with TensorFlow – While it’s possible to create a custom container and manually fine-tune a model, SageMaker JumpStart already offers an integrated solution for fine-tuning pre-trained models without the need for custom containers or manual implementation. This makes it a more efficient and straightforward option for the task at hand.

Reference: https://docs.aws.amazon.com/sagemaker/latest/dg/jumpstart-fine-tune.html

Latest MLA-C01 Dumps Valid Version with 125 Q&As

Latest And Valid Q&A | Instant Download | Once Fail, Full Refund