Snowflake ARA-C01 SnowPro Advanced Architect Certification Online Training

Snowflake ARA-C01 Online Training

The questions for ARA-C01 were last updated at Mar 28,2025.

- Exam Code: ARA-C01

- Exam Name: SnowPro Advanced Architect Certification

- Certification Provider: Snowflake

- Latest update: Mar 28,2025

A Data Engineer is designing a near real-time ingestion pipeline for a retail company to ingest event logs into Snowflake to derive insights. A Snowflake Architect is asked to define security best practices to configure access control privileges for the data load for auto-ingest to Snowpipe.

What are the MINIMUM object privileges required for the Snowpipe user to execute Snowpipe?

- A . OWNERSHIP on the named pipe, USAGE on the named stage, target database, and schema, and INSERT and SELECT on the target table

- B . OWNERSHIP on the named pipe, USAGE and READ on the named stage, USAGE on the target database and schema, and INSERT end SELECT on the target table

- C . CREATE on the named pipe, USAGE and READ on the named stage, USAGE on the target database and schema, and INSERT end SELECT on the target table

- D . USAGE on the named pipe, named stage, target database, and schema, and INSERT and SELECT on the target table

The IT Security team has identified that there is an ongoing credential stuffing attack on many of their organization’s system.

What is the BEST way to find recent and ongoing login attempts to Snowflake?

- A . Call the LOGIN_HISTORY Information Schema table function.

- B . Query the LOGIN_HISTORY view in the ACCOUNT_USAGE schema in the SNOWFLAKE database.

- C . View the History tab in the Snowflake UI and set up a filter for SQL text that contains the text "LOGIN".

- D . View the Users section in the Account tab in the Snowflake UI and review the last login column.

An Architect has a VPN_ACCESS_LOGS table in the SECURITY_LOGS schema containing timestamps of the connection and disconnection, username of the user, and summary statistics.

What should the Architect do to enable the Snowflake search optimization service on this table?

- A . Assume role with OWNERSHIP on future tables and ADD SEARCH OPTIMIZATION on the SECURITY_LOGS schema.

- B . Assume role with ALL PRIVILEGES including ADD SEARCH OPTIMIZATION in the SECURITY LOGS schema.

- C . Assume role with OWNERSHIP on VPN_ACCESS_LOGS and ADD SEARCH OPTIMIZATION in the SECURITY_LOGS schema.

- D . Assume role with ALL PRIVILEGES on VPN_ACCESS_LOGS and ADD SEARCH OPTIMIZATION in the SECURITY_LOGS schema.

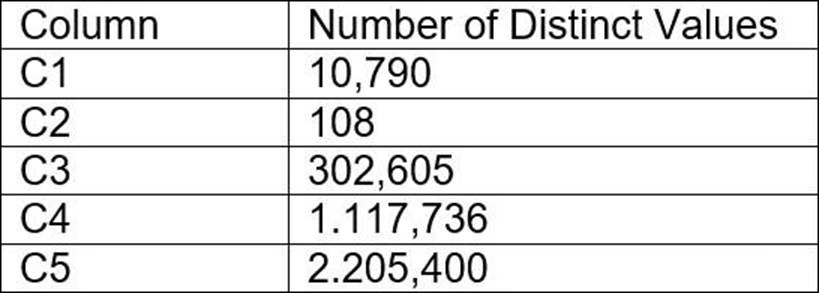

A table contains five columns and it has millions of records.

The cardinality distribution of the columns is shown below:

Column C4 and C5 are mostly used by SELECT queries in the GROUP BY and ORDER BY clauses.

Whereas columns C1, C2 and C3 are heavily used in filter and join conditions of SELECT queries.

The Architect must design a clustering key for this table to improve the query performance.

Based on Snowflake recommendations, how should the clustering key columns be ordered while defining the multi-column clustering key?

- A . C5, C4, C2

- B . C3, C4, C5

- C . C1, C3, C2

- D . C2, C1, C3

Which security, governance, and data protection features require, at a MINIMUM, the Business Critical edition of Snowflake? (Choose two.)

- A . Extended Time Travel (up to 90 days)

- B . Customer-managed encryption keys through Tri-Secret Secure

- C . Periodic rekeying of encrypted data

- D . AWS, Azure, or Google Cloud private connectivity to Snowflake

- E . Federated authentication and SSO

A company wants to deploy its Snowflake accounts inside its corporate network with no visibility on the internet. The company is using a VPN infrastructure and Virtual Desktop Infrastructure (VDI) for its Snowflake users. The company also wants to re-use the login credentials set up for the VDI to eliminate redundancy when managing logins.

What Snowflake functionality should be used to meet these requirements? (Choose two.)

- A . Set up replication to allow users to connect from outside the company VPN.

- B . Provision a unique company Tri-Secret Secure key.

- C . Use private connectivity from a cloud provider.

- D . Set up SSO for federated authentication.

- E . Use a proxy Snowflake account outside the VPN, enabling client redirect for user logins.

How do Snowflake databases that are created from shares differ from standard databases that are not created from shares? (Choose three.)

- A . Shared databases are read-only.

- B . Shared databases must be refreshed in order for new data to be visible.

- C . Shared databases cannot be cloned.

- D . Shared databases are not supported by Time Travel.

- E . Shared databases will have the PUBLIC or INFORMATION_SCHEMA schemas without explicitly granting these schemas to the share.

- F . Shared databases can also be created as transient databases.

What integration object should be used to place restrictions on where data may be exported?

- A . Stage integration

- B . Security integration

- C . Storage integration

- D . API integration

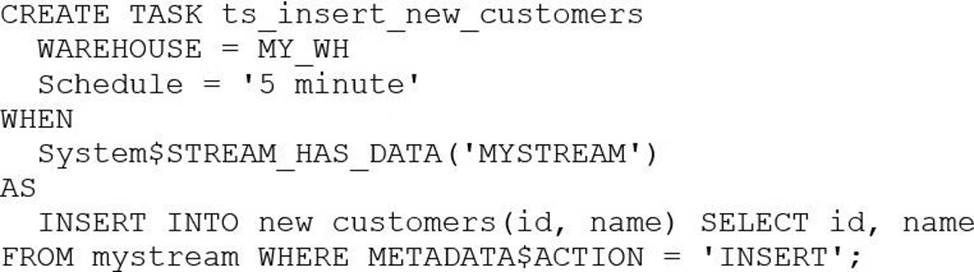

The following DDL command was used to create a task based on a stream:

Assuming MY_WH is set to auto_suspend C 60 and used exclusively for this task, which statement is true?

- A . The warehouse MY_WH will be made active every five minutes to check the stream.

- B . The warehouse MY_WH will only be active when there are results in the stream.

- C . The warehouse MY_WH will never suspend.

- D . The warehouse MY_WH will automatically resize to accommodate the size of the stream.

What is a characteristic of loading data into Snowflake using the Snowflake Connector for Kafka?

- A . The Connector only works in Snowflake regions that use AWS infrastructure.

- B . The Connector works with all file formats, including text, JSON, Avro, Ore, Parquet, and XML.

- C . The Connector creates and manages its own stage, file format, and pipe objects.

- D . Loads using the Connector will have lower latency than Snowpipe and will ingest data in real time.

Latest ARA-C01 Dumps Valid Version with 156 Q&As

Latest And Valid Q&A | Instant Download | Once Fail, Full Refund