Topic 1: Litware, inc

Case Study

This is a case study. Case studies are not timed separately. You can use as much exam time as you would like to complete each case. However, there may be additional case studies and sections on this exam. You must manage your time to ensure that you are able to complete all questions included on this exam in the time provided.

To answer the questions included in a case study, you will need to reference information that is provided in the case study. Case studies might contain exhibits and other resources that provide more information about the scenario that is described in the case study. Each question is independent of the other questions in this case study.

At the end of this case study, a review screen will appear. This screen allows you to review your answers and to make changes before you move to the next section of the exam. After you begin a new section, you cannot return to this section.

To start the case study

To display the first question in this case study, click the Next button. Use the buttons in the left pane to explore the content of the case study before you answer the questions. Clicking these buttons displays information such as business requirements, existing environment, and problem statements. If the case study has an All Information tab, note that the information displayed is identical to the information displayed on the subsequent tabs. When you are ready to answer a question, click the Question button to return to the question.

Overview

Litware, Inc. is a United States-based grocery retailer. Litware has a main office and a primary datacenter in Seattle. The company has 50 retail stores across the United States and an emerging online presence. Each store connects directly to the internet.

Existing environment. Cloud and Data Service Environments.

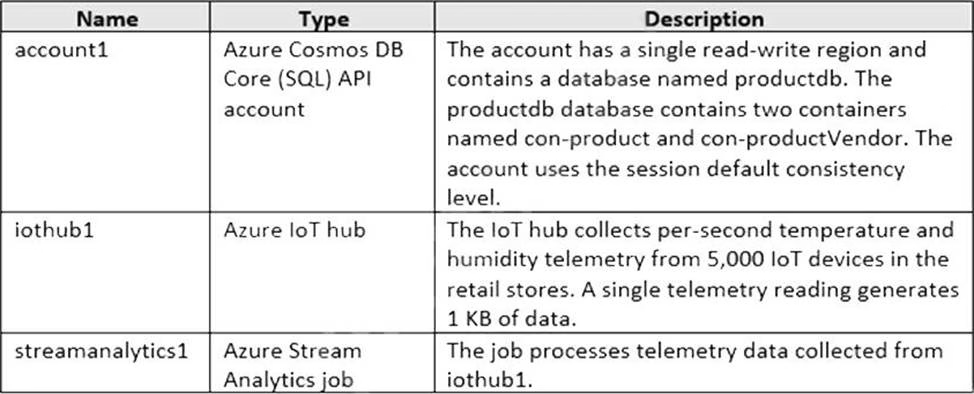

Litware has an Azure subscription that contains the resources shown in the following table.

Each container in productdb is configured for manual throughput.

The con-product container stores the company’s product catalog data. Each document in con-product includes a con-productvendor value. Most queries targeting the data in con-product are in the following format.

SELECT * FROM con-product p WHERE p.con-productVendor – ‘name’

Most queries targeting the data in the con-productVendor container are in the following format

SELECT * FROM con-productVendor pv

ORDER BY pv.creditRating, pv.yearFounded

Existing environment. Current Problems.

Litware identifies the following issues:

Updates to product categories in the con-productVendor container do not propagate automatically to documents in the con-product container.

Application updates in con-product frequently cause HTTP status code 429 "Too many requests". You discover that the 429 status code relates to excessive request unit (RU) consumption during the updates.

Requirements. Planned Changes

Litware plans to implement a new Azure Cosmos DB Core (SQL) API account named account2 that will contain a database named iotdb. The iotdb database will contain two containers named con-iot1 and con-iot2.

Litware plans to make the following changes:

Store the telemetry data in account2.

Configure account1 to support multiple read-write regions.

Implement referential integrity for the con-product container.

Use Azure Functions to send notifications about product updates to different recipients. Develop an app named App1 that will run from all locations and query the data in account1. Develop an app named App2 that will run from the retail stores and query the data in account2. App2 must be limited to a single DNS endpoint when accessing account2.

Requirements. Business Requirements

Litware identifies the following business requirements:

Whenever there are multiple solutions for a requirement, select the solution that provides the best performance, as long as there are no additional costs associated. Ensure that Azure Cosmos DB costs for IoT-related processing are predictable.

Minimize the number of firewall changes in the retail stores.

Requirements. Product Catalog Requirements

Litware identifies the following requirements for the product catalog:

Implement a custom conflict resolution policy for the product catalog data.

Minimize the frequency of errors during updates of the con-product container.

Once multi-region writes are configured, maximize the performance of App1 queries against the data in account1.

Trigger the execution of two Azure functions following every update to any document in the con-product container.

You are troubleshooting the current issues caused by the application updates.

Which action can address the application updates issue without affecting the functionality of the application?

- A . Enable time to live for the con-product container.

- B . Set the default consistency level of account1 to strong.

- C . Set the deault consistency level of account1 to bounded staleness.

- D . Add a custom indexing policy to the con-product container.

C

Explanation:

Bounded staleness is frequently chosen by globally distributed applications that expect low write latencies but require total global order guarantee. Bounded staleness is great for applications featuring group collaboration and sharing, stock ticker, publish-subscribe/queueing etc.

Scenario: Applicfation updates in con-product frequently cause HTTP status code 429 "Too many requests". You discover that the 429 status code relates to excessive request unit (RU) consumption during the updates.

Reference: https://docs.microsoft.com/en-us/azure/cosmos-db/consistency-levels

You need to select the partition key for con-iot1. The solution must meet the IoT telemetry requirements.

What should you select?

- A . the timestamp

- B . the humidity

- C . the temperature

- D . the device ID

D

Explanation:

The partition key is what will determine how data is routed in the various partitions by Cosmos DB and needs to make sense in the context of your specific scenario. The IoT Device ID is generally the "natural" partition key for IoT applications.

Scenario: The iotdb database will contain two containers named con-iot1 and con-iot2.

Ensure that Azure Cosmos DB costs for IoT-related processing are predictable.

Reference: https://docs.microsoft.com/en-us/azure/architecture/solution-ideas/articles/iot-using-cosmos-db

You need to identify which connectivity mode to use when implementing App2. The solution must support the planned changes and meet the business requirements.

Which connectivity mode should you identify?

- A . Direct mode over HTTPS

- B . Gateway mode (using HTTPS)

- C . Direct mode over TCP

C

Explanation:

Scenario: Develop an app named App2 that will run from the retail stores and query the data in account2. App2 must be limited to a single DNS endpoint when accessing account2.

By using Azure Private Link, you can connect to an Azure Cosmos account via a private endpoint. The private endpoint is a set of private IP addresses in a subnet within your virtual network.

When you’re using Private Link with an Azure Cosmos account through a direct mode connection, you can use only the TCP protocol. The HTTP protocol is not currently supported.

Reference: https://docs.microsoft.com/en-us/azure/cosmos-db/how-to-configure-private-endpoints

You configure multi-region writes for account1.

You need to ensure that App1 supports the new configuration for account1. The solution must meet the business requirements and the product catalog requirements.

What should you do?

- A . Set the default consistency level of accountl to bounded staleness.

- B . Create a private endpoint connection.

- C . Modify the connection policy of App1.

- D . Increase the number of request units per second (RU/s) allocated to the con-product and con-productVendor containers.

D

Explanation:

App1 queries the con-product and con-productVendor containers.

Note: Request unit is a performance currency abstracting the system resources such as CPU, IOPS, and memory that are required to perform the database operations supported by Azure Cosmos DB.

Scenario:

Develop an app named App1 that will run from all locations and query the data in account1.

Once multi-region writes are configured, maximize the performance of App1 queries against the data in account1.

Whenever there are multiple solutions for a requirement, select the solution that provides the best performance, as long as there are no additional costs associated.

Reference: https://docs.microsoft.com/en-us/azure/cosmos-db/consistency-levels

You need to provide a solution for the Azure Functions notifications following updates to con-product. The solution must meet the business requirements and the product catalog requirements.

Which two actions should you perform? Each correct answer presents part of the solution. NOTE: Each correct selection is worth one point.

- A . Configure the trigger for each function to use a different leaseCollectionPrefix

- B . Configure the trigger for each function to use the same leaseCollectionNair.e

- C . Configure the trigger for each function to use a different leaseCollectionName

- D . Configure the trigger for each function to use the same leaseCollectionPrefix

A, C

Explanation:

leaseCollectionPrefix: when set, the value is added as a prefix to the leases created in the Lease collection for this Function. Using a prefix allows two separate Azure Functions to share the same

Lease collection by using different prefixes.

Scenario: Use Azure Functions to send notifications about product updates to different recipients. Trigger the execution of two Azure functions following every update to any document in the con-product container.

Reference: https://docs.microsoft.com/en-us/azure/azure-functions/functions-bindings-cosmosdb-v2-trigger

You need to implement a solution to meet the product catalog requirements.

What should you do to implement the conflict resolution policy.

- A . Remove frequently changed field from the index policy of the con-product container.

- B . Disable indexing on all fields in the index policy of the con-product container.

- C . Set the default consistency level for account1 to eventual.

- D . Create a new container and migrate the product catalog data to the new container.

HOTSPOT

You need to recommend indexes for con-product and con-productVendor. The solution must meet the product catalog requirements and the business requirements.

Which type of index should you recommend for each container? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

HOTSPOT

You need to select the capacity mode and scale configuration for account2 to support the planned changes and meet the business requirements.

What should you select? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

Topic 2, Misc. Questions

You have an application named App1 that reads the data in an Azure Cosmos DB Core (SQL) API account. App1 runs the same read queries every minute. The default consistency level for the account is set to eventual.

You discover that every query consumes request units (RUs) instead of using the cache.

You verify the IntegratedCacheiteItemHitRate metric and the IntegratedCacheQueryHitRate metric.

Both metrics have values of 0.

You verify that the dedicated gateway cluster is provisioned and used in the connection string.

You need to ensure that App1 uses the Azure Cosmos DB integrated cache.

What should you configure?

- A . the indexing policy of the Azure Cosmos DB container

- B . the consistency level of the requests from App1

- C . the connectivity mode of the App1 CosmosClient

- D . the default consistency level of the Azure Cosmos DB account

C

Explanation:

Because the integrated cache is specific to your Azure Cosmos DB account and requires significant CPU and memory, it requires a dedicated gateway node. Connect to Azure Cosmos DB using gateway mode.

Reference: https://docs.microsoft.com/en-us/azure/cosmos-db/integrated-cache-faq

HOTSPOT

You provision Azure resources by using the following Azure Resource Manager (ARM) template.

For each of the following statements, select Yes if the statement is true. Otherwise, select No. NOTE: Each correct selection is worth one point.

Explanation:

Box 1: No

An alert is triggered when the DB key is regenerated, not when it is used.

Note: The az cosmosdb keys regenerate command regenerates an access key for a Azure Cosmos DB

database account.

Box 2: No

Only an SMS action will be taken.

Emailreceivers is empty so no email action is taken.

Box 3: Yes

Yes, an alert is triggered when the DB key is regenerated.

Reference: https://docs.microsoft.com/en-us/cli/azure/cosmosdb/keys

HOTSPOT

You have an Azure Cosmos DB Core (SQL) API account named account1 that has the disableKeyBasedMetadataWriteAccess property enabled.

You are developing an app named App1 that will be used by a user named DevUser1 to create containers in account1. DevUser1 has a non-privileged user account in the Azure Active Directory (Azure AD) tenant.

You need to ensure that DevUser1 can use App1 to create containers in account1.

What should you do? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

Explanation:

Box 1: Resource tokens

Resource tokens provide access to the application resources within a database. Resource tokens: Provide access to specific containers, partition keys, documents, attachments, stored procedures, triggers, and UDFs.

Box 2: Azure Resource Manager API

You can use Azure Resource Manager to help deploy and manage your Azure Cosmos DB accounts,

databases, and containers.

Incorrect Answers:

The Microsoft Graph API is a RESTful web API that enables you to access Microsoft Cloud service

resources.

Reference:

https://docs.microsoft.com/en-us/azure/cosmos-db/secure-access-to-data

https://docs.microsoft.com/en-us/rest/api/resources/

HOTSPOT

You have an Azure Cosmos DB Core (SQL) account that has a single write region in West Europe. You run the following Azure CLI script.

For each of the following statements, select Yes if the statement is true. Otherwise, select No. NOTE: Each correct selection is worth one point.

Explanation:

Box 1: Yes

The Automatic failover option allows Azure Cosmos DB to failover to the region with the highest failover priority with no user action should a region become unavailable.

Box 2: No

West Europe is used for failover. Only North Europe is writable. To Configure multi-region set UseMultipleWriteLocations to true. Box 3: Yes

Provisioned throughput with single write region costs $0.008/hour per 100 RU/s and provisioned throughput with multiple writable regions costs $0.016/per hour per 100 RU/s.

Reference:

https://docs.microsoft.com/en-us/azure/cosmos-db/sql/how-to-multi-master

https://docs.microsoft.com/en-us/azure/cosmos-db/optimize-cost-regions

You are developing an application that will use an Azure Cosmos DB Core (SQL) API account as a data source.

You need to create a report that displays the top five most ordered fruits as shown in the following table.

A collection that contains aggregated data already exists. The following is a sample document:

{

"name": "apple",

"type": ["fruit", "exotic"],

"orders": 10000

}

Which two queries can you use to retrieve data for the report? Each correct answer presents a complete solution. NOTE: Each correct selection is worth one point.

A)

B)

C)

D)

- A . Option A

- B . Option B

- C . Option C

- D . Option D

B, D

Explanation:

ARRAY_CONTAINS returns a Boolean indicating whether the array contains the specified value. You can check for a partial or full match of an object by using a boolean expression within the command.

Incorrect Answers:

A: Default sorting ordering is Ascending. Must use Descending order.

C: Order on Orders not on Type.

Reference: https://docs.microsoft.com/en-us/azure/cosmos-db/sql/sql-query-array-contains

HOTSPOT

You have a database in an Azure Cosmos DB Core (SQL) API account.

You plan to create a container that will store employee data for 5,000 small businesses. Each business will have up to 25 employees. Each employee item will have an email Address value. You need to ensure that the email Address value for each employee within the same company is unique.

To what should you set the partition key and the unique key? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

Explanation:

Box 1: CompanyID

After you create a container with a unique key policy, the creation of a new or an update of an existing item resulting in a duplicate within a logical partition is prevented, as specified by the unique key constraint. The partition key combined with the unique key guarantees the uniqueness of an item within the scope of the container.

For example, consider an Azure Cosmos container with Email address as the unique key constraint and CompanyID as the partition key. When you configure the user’s email address with a unique key, each item has a unique email address within a given CompanyID. Two items can’t be created with duplicate email addresses and with the same partition key value.

Box 2: emailAddress

Reference: https://docs.microsoft.com/en-us/azure/cosmos-db/unique-keys

HOTSPOT

You have a container named container1 in an Azure Cosmos DB Core (SQL) API account. The container1 container has 120 GB of data.

The following is a sample of a document in container1.

The orderId property is used as the partition key.

For each of the following statements, select Yes if the statement is true. Otherwise, select No. NOTE: Each correct selection is worth one point.

Explanation:

Box 1: Yes

Records with different OrderIDs will match.

Box 2: Yes

Records with different OrderIDs will match.

Box 3: No

Only records with one specific OrderId will match

You are designing an Azure Cosmos DB Core (SQL) API solution to store data from IoT devices. Writes from the devices will be occur every second.

The following is a sample of the data.

You need to select a partition key that meets the following requirements for writes:

– Minimizes the partition skew

– Avoids capacity limits

– Avoids hot partitions

What should you do?

- A . Use timestamp as the partition key.

- B . Create a new synthetic key that contains deviceId and sensor1Value.

- C . Create a new synthetic key that contains deviceId and deviceManufacturer.

- D . Create a new synthetic key that contains deviceId and a random number.

D

Explanation:

Use a partition key with a random suffix. Distribute the workload more evenly is to append a random

number at the end of the partition key value. When you distribute items in this way, you can perform

parallel write operations across partitions.

Incorrect Answers:

A: You will also not like to partition the data on “DateTime”, because this will create a hot partition. Imagine you have partitioned the data on time, then for a given minute, all the calls will hit one partition. If you need to retrieve the data for a customer, then it will be a fan-out query because data may be distributed on all the partitions.

B: Senser1Value has only two values.

C: All the devices could have the same manufacturer.

Reference: https://docs.microsoft.com/en-us/azure/cosmos-db/sql/synthetic-partition-keys

You maintain a relational database for a book publisher.

The database contains the following tables.

The most common query lists the books for a given authorId.

You need to develop a non-relational data model for Azure Cosmos DB Core (SQL) API that will replace the relational database. The solution must minimize latency and read operation costs.

What should you include in the solution?

- A . Create a container for Author and a container for Book. In each Author document, embed booked for each book by the author. In each Book document embed author of each author.

- B . Create Author, Book, and Bookauthorlnk documents in the same container.

- C . Create a container that contains a document for each Author and a document for each Book. In each Book document, embed authorId.

- D . Create a container for Author and a container for Book. In each Author document and Book document embed the data from Bookauthorlnk.

A

Explanation:

Store multiple entity types in the same container.

You have an Azure Cosmos DB Core (SQL) API account.

You run the following query against a container in the account.

SELECT

IS_NUMBER("1234") AS A,

IS_NUMBER(1234) AS B,

IS_NUMBER({prop: 1234}) AS C

What is the output of the query?

- A . [{"A": false, "B": true, "C": false}]

- B . [{"A": true, "B": false, "C": true}]

- C . [{"A": true, "B": true, "C": false}]

- D . [{"A": true, "B": true, "C": true}]

A

Explanation:

IS_NUMBER returns a Boolean value indicating if the type of the specified expression is a number.

"1234" is a string, not a number.

Reference: https://docs.microsoft.com/en-us/azure/cosmos-db/sql/sql-query-is-number

You need to implement a trigger in Azure Cosmos DB Core (SQL) API that will run before an item is inserted into a container.

Which two actions should you perform to ensure that the trigger runs? Each correct answer presents part of the solution. NOTE: Each correct selection is worth one point.

- A . Append pre to the name of the JavaScript function trigger.

- B . For each create request, set the access condition in RequestOptions.

- C . Register the trigger as a pre-trigger.

- D . For each create request, set the consistency level to session in RequestOptions.

- E . For each create request, set the trigger name in RequestOptions.

C, E

Explanation:

C: When triggers are registered, you can specify the operations that it can run with.

F: When executing, pre-triggers are passed in the RequestOptions object by specifying PreTriggerInclude and then passing the name of the trigger in a List object.

Reference: https://docs.microsoft.com/en-us/azure/cosmos-db/sql/how-to-use-stored-procedures-triggers-udfs

HOTSPOT

You have a container in an Azure Cosmos DB Core (SQL) API account.

You need to use the Azure Cosmos DB SDK to replace a document by using optimistic concurrency.

What should you include in the code? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

Explanation:

Box 1: ConsistencyLevel

The ItemRequestOptions Class ConsistencyLevel property gets or sets the consistency level required for the request in the Azure Cosmos DB service.

Azure Cosmos DB offers 5 different consistency levels. Strong, Bounded Staleness, Session, Consistent Prefix and Eventual – in order of strongest to weakest consistency.

Box 2: _etag

The ItemRequestOptions class helped us implement optimistic concurrency by specifying that we wanted the SDK to use the If-Match header to allow the server to decide whether a resource should be updated. The If-Match value is the ETag value to be checked against. If the ETag value matches the server ETag value, the resource is updated.

Reference:

https://docs.microsoft.com/en-us/dotnet/api/microsoft.azure.cosmos.itemrequestoptions

https://cosmosdb.github.io/labs/dotnet/labs/10-concurrency-control.html

HOTSPOT

You are creating a database in an Azure Cosmos DB Core (SQL) API account. The database will be used by an application that will provide users with the ability to share online posts. Users will also be able to submit comments on other users’ posts.

You need to store the data shown in the following table.

The application has the following characteristics:

– Users can submit an unlimited number of posts.

– The average number of posts submitted by a user will be more than 1,000.

– Posts can have an unlimited number of comments from different users.

The average number of comments per post will be 100, but many posts will exceed 1,000 comments.

Users will be limited to having a maximum of 20 interests.

For each of the following statements, select Yes if the statement is true. Otherwise, select No. NOTE: Each correct selection is worth one point.

Explanation:

Box 1: Yes

Non-relational data increases write costs, but can decrease read costs.

Box 2: Yes

Non-relational data increases write costs, but can decrease read costs.

Box 3: No

Non-relational data increases write costs, but can decrease read costs.

DRAG DROP

You have an app that stores data in an Azure Cosmos DB Core (SQL) API account The app performs queries that return large result sets.

You need to return a complete result set to the app by using pagination. Each page of results must return 80 items.

Which three actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

Explanation:

Step 1: Configure the MaxItemCount in QueryRequestOptions

You can specify the maximum number of items returned by a query by setting the MaxItemCount. The MaxItemCount is specified per request and tells the query engine to return that number of items or fewer.

Box 2: Run the query and provide a continuation token

In the .NET SDK and Java SDK you can optionally use continuation tokens as a bookmark for your query’s progress. Azure Cosmos DB query executions are stateless at the server side and can be resumed at any time using the continuation token.

If the query returns a continuation token, then there are additional query results.

Step 3: Append the results to a variable

Reference: https://docs.microsoft.com/en-us/azure/cosmos-db/sql/sql-query-pagination

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have an Azure Cosmos DB Core (SQL) API account named account 1 that uses autoscale throughput.

You need to run an Azure function when the normalized request units per second for a container in account1 exceeds a specific value.

Solution: You configure an Azure Monitor alert to trigger the function.

Does this meet the goal?

- A . Yes

- B . No

A

Explanation:

You can set up alerts from the Azure Cosmos DB pane or the Azure Monitor service in the Azure portal.

Note: Alerts are used to set up recurring tests to monitor the availability and responsiveness of your Azure Cosmos DB resources. Alerts can send you a notification in the form of an email, or execute an Azure Function when one of your metrics reaches the threshold or if a specific event is logged in the activity log.

Reference: https://docs.microsoft.com/en-us/azure/cosmos-db/create-alerts

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have an Azure Cosmos DB Core (SQL) API account named account 1 that uses autoscale throughput.

You need to run an Azure function when the normalized request units per second for a container in account1 exceeds a specific value.

Solution: You configure the function to have an Azure CosmosDB trigger.

Does this meet the goal?

- A . Yes

- B . No

B

Explanation:

Instead configure an Azure Monitor alert to trigger the function.

You can set up alerts from the Azure Cosmos DB pane or the Azure Monitor service in the Azure portal.

Reference: https://docs.microsoft.com/en-us/azure/cosmos-db/create-alerts

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have an Azure Cosmos DB Core (SQL) API account named account 1 that uses autoscale throughput.

You need to run an Azure function when the normalized request units per second for a container in account1 exceeds a specific value.

Solution: You configure an application to use the change feed processor to read the change feed and you configure the application to trigger the function.

Does this meet the goal?

- A . Yes

- B . No

B

Explanation:

Instead configure an Azure Monitor alert to trigger the function.

You can set up alerts from the Azure Cosmos DB pane or the Azure Monitor service in the Azure portal.

Reference: https://docs.microsoft.com/en-us/azure/cosmos-db/create-alerts

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have an Azure Cosmos DB Core (SQL) API account named account 1 that uses autoscale throughput.

You need to run an Azure function when the normalized request units per second for a container in account1 exceeds a specific value.

Solution: You configure an application to use the change feed processor to read the change feed and you configure the application to trigger the function.

Does this meet the goal?

- A . Yes

- B . No

B

Explanation:

Instead configure an Azure Monitor alert to trigger the function.

You can set up alerts from the Azure Cosmos DB pane or the Azure Monitor service in the Azure portal.

Reference: https://docs.microsoft.com/en-us/azure/cosmos-db/create-alerts

730610 and longitude: -73.935242.

Administrative effort must be minimized to implement the solution.

What should you configure for each container? To answer, drag the appropriate configurations to the correct containers. Each configuration may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content. NOTE: Each correct selection is worth one point.

Explanation:

Box 1: Last Write Wins (LWW) (default) mode

Last Write Wins (LWW): This resolution policy, by default, uses a system-defined timestamp property.

It’s based on the time-synchronization clock protocol.

Box 2: Merge Procedures (custom) mode

Custom: This resolution policy is designed for application-defined semantics for reconciliation of conflicts. When you set this policy on your Azure Cosmos container, you also need to register a merge stored procedure. This procedure is automatically invoked when conflicts are detected under a database transaction on the server. The system provides exactly once guarantee for the execution of a merge procedure as part of the commitment protocol.

Reference:

https://docs.microsoft.com/en-us/azure/cosmos-db/conflict-resolution-policies

https://docs.microsoft.com/en-us/azure/cosmos-db/sql/how-to-manage-conflicts

You have a container in an Azure Cosmos DB Core (SQL) API account. The container stores telemetry data from IoT devices. The container uses telemetryId as the partition key and has a throughput of 1,000 request units per second (RU/s). Approximately 5,000 IoT devices submit data every five minutes by using the same telemetryId value.

You have an application that performs analytics on the data and frequently reads telemetry data for a single IoT device to perform trend analysis.

The following is a sample of a document in the container.

You need to reduce the amount of request units (RUs) consumed by the analytics application.

What should you do?

- A . Decrease the offerThroughput value for the container.

- B . Increase the offerThroughput value for the container.

- C . Move the data to a new container that has a partition key of deviceId.

- D . Move the data to a new container that uses a partition key of date.

C

Explanation:

The partition key is what will determine how data is routed in the various partitions by Cosmos DB and needs to make sense in the context of your specific scenario. The IoT Device ID is generally the "natural" partition key for IoT applications.

Reference: https://docs.microsoft.com/en-us/azure/architecture/solution-ideas/articles/iot-using-cosmos-db

HOTSPOT

You have an Azure Cosmos DB Core (SQL) API account named storage1 that uses provisioned throughput capacity mode.

The storage1 account contains the databases shown in the following table.

The databases contain the containers shown in the following table.

For each of the following statements, select Yes if the statement is true. Otherwise, select No. NOTE: Each correct selection is worth one point.

Explanation:

Box 1: No

Four containers with 1000 RU/s each.

Box 2: No

Max 8000 RU/s for db2. 8 containers, so 1000 RU/s for each container.

Box 3: Yes

Max 8000 RU/s for db2. 8 containers, so 1000 RU/s for each container. Can very well add an additional container.

Reference:

https://docs.microsoft.com/en-us/azure/cosmos-db/plan-manage-costs

https://azure.microsoft.com/en-us/pricing/details/cosmos-db/

HOTSPOT

You have a database named telemetry in an Azure Cosmos DB Core (SQL) API account that stores IoT data. The database contains two containers named readings and devices.

Documents in readings have the following structure.

id

deviceid

timestamp

ownerid

measures (array)

– type

– value

– metricid

Documents in devices have the following structure.

id

deviceid

owner

– ownerid

– emailaddress

– name brand model

For each of the following statements, select Yes if the statement is true. Otherwise, select No. NOTE: Each correct selection is worth one point.

Explanation:

Box 1: Yes

Need to join readings and devices.

Box 2: No

Only readings is required. All required fields are in readings.

Box 3: No

Only devices is required. All required fields are in devices.

The settings for a container in an Azure Cosmos DB Core (SQL) API account are configured as shown in the following exhibit.

Which statement describes the configuration of the container?

- A . All items will be deleted after one year.

- B . Items stored in the collection will be retained always, regardless of the items time to live value.

- C . Items stored in the collection will expire only if the item has a time to live value.

- D . All items will be deleted after one hour.

C

Explanation:

When DefaultTimeToLive is -1 then your Time to Live setting is On (No default)

Time to Live on a container, if present and the value is set to "-1", it is equal to infinity, and items don’t expire by default.

Time to Live on an item:

This Property is applicable only if DefaultTimeToLive is present and it is not set to null for the parent container.

If present, it overrides the DefaultTimeToLive value of the parent container.

Reference: https://docs.microsoft.com/en-us/azure/cosmos-db/sql/time-to-live

You have an Azure Cosmos DB Core (SQL) API account that uses a custom conflict resolution policy.

The account has a registered merge procedure that throws a runtime exception.

The runtime exception prevents conflicts from being resolved.

You need to use an Azure function to resolve the conflicts.

What should you use?

- A . a function that pulls items from the conflicts feed and is triggered by a timer trigger

- B . a function that receives items pushed from the change feed and is triggered by an Azure Cosmos DB trigger

- C . a function that pulls items from the change feed and is triggered by a timer trigger

- D . a function that receives items pushed from the conflicts feed and is triggered by an Azure Cosmos DB trigger

D

Explanation:

The Azure Cosmos DB Trigger uses the Azure Cosmos DB Change Feed to listen for inserts and updates across partitions. The change feed publishes inserts and updates, not deletions.

Reference: https://docs.microsoft.com/en-us/azure/azure-functions/functions-bindings-cosmosdb

The following is a sample of a document in orders.

The orders container uses customer as the partition key.

You need to provide a report of the total items ordered per month by item type.

The solution must meet the following requirements:

Ensure that the report can run as quickly as possible.

Minimize the consumption of request units (RUs).

What should you do?

- A . Configure the report to query orders by using a SQL query.

- B . Configure the report to query a new aggregate container. Populate the aggregates by using the change feed.

- C . Configure the report to query orders by using a SQL query through a dedicated gateway.

- D . Configure the report to query a new aggregate container. Populate the aggregates by using SQL queries that run daily.

B

Explanation:

You can facilitate aggregate data by using Change Feed and Azure Functions, and then use it for reporting.

Reference: https://docs.microsoft.com/en-us/azure/cosmos-db/change-feed

HOTSPOT

You have three containers in an Azure Cosmos DB Core (SQL) API account as shown in the following table.

You have the following Azure functions:

– A function named Fn1 that reads the change feed of cn1

– A function named Fn2 that reads the change feed of cn2

– A function named Fn3 that reads the change feed of cn3

You perform the following actions:

– Delete an item named item1 from cn1.

– Update an item named item2 in cn2.

For an item named item3 in cn3, update the item time to live to 3,600 seconds.

For each of the following statements, select Yes if the statement is true. Otherwise, select No. NOTE: Each correct selection is worth one point.

Explanation:

Box 1: No

Azure Cosmos DB’s change feed is a great choice as a central data store in event sourcing architectures where all data ingestion is modeled as writes (no updates or deletes).

Note: The change feed does not capture deletes. If you delete an item from your container, it is also removed from the change feed. The most common method of handling this is adding a soft marker on the items that are being deleted. You can add a property called "deleted" and set it to "true" at the time of deletion. This document update will show up in the change feed. You can set a TTL on this item so that it can be automatically deleted later.

Box 2: No

The _etag format is internal and you should not take dependency on it, because it can change anytime.

Box 3: Yes

Change feed support in Azure Cosmos DB works by listening to an Azure Cosmos container for any changes.

Reference:

https://docs.microsoft.com/en-us/azure/cosmos-db/sql/change-feed-design-patterns

https://docs.microsoft.com/en-us/azure/cosmos-db/change-feed

HOTSPOT

You configure Azure Cognitive Search to index a container in an Azure Cosmos DB Core (SQL) API account as shown in the following exhibit.

Use the drop-down menus to select the answer choice that completes each statement based on the information presented in the graphic. NOTE: Each correct selection is worth one point.

Explanation:

Box 1: country

The country field is filterable.

Note: filterable: Indicates whether to enable the field to be referenced in $filter queries.

Filterable differs from searchable in how strings are handled. Fields of type Edm.String or Collection (Edm.String) that are filterable do not undergo lexical analysis, so comparisons are for exact matches only.

Box 2: name

The name field is not Retrievable.

Retrievable: Indicates whether the field can be returned in a search result. Set this attribute to false if you want to use a field (for example, margin) as a filter, sorting, or scoring mechanism but do not want the field to be visible to the end user.

Note: searchable: Indicates whether the field is full-text searchable and can be referenced in search queries.

Reference: https://docs.microsoft.com/en-us/rest/api/searchservice/create-index

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have a container named container1 in an Azure Cosmos DB Core (SQL) API account.

You need to make the contents of container1 available as reference data for an Azure Stream Analytics job.

Solution: You create an Azure Synapse pipeline that uses Azure Cosmos DB Core (SQL) API as the input and Azure Blob Storage as the output.

Does this meet the goal?

- A . Yes

- B . No

B

Explanation:

Instead create an Azure function that uses Azure Cosmos DB Core (SQL) API change feed as a trigger and Azure event hub as the output.

The Azure Cosmos DB change feed is a mechanism to get a continuous and incremental feed of records from an Azure Cosmos container as those records are being created or modified. Change feed support works by listening to container for any changes. It then outputs the sorted list of documents that were changed in the order in which they were modified.

The following diagram represents the data flow and components involved in the solution:

Reference: https://docs.microsoft.com/en-us/azure/cosmos-db/sql/changefeed-ecommerce-solution

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have a container named container1 in an Azure Cosmos DB Core (SQL) API account.

You need to make the contents of container1 available as reference data for an Azure Stream Analytics job.

Solution: You create an Azure Data Factory pipeline that uses Azure Cosmos DB Core (SQL) API as the input and Azure Blob Storage as the output.

Does this meet the goal?

- A . Yes

- B . No

B

Explanation:

Instead create an Azure function that uses Azure Cosmos DB Core (SQL) API change feed as a trigger and Azure event hub as the output.

The Azure Cosmos DB change feed is a mechanism to get a continuous and incremental feed of records from an Azure Cosmos container as those records are being created or modified. Change feed support works by listening to container for any changes. It then outputs the sorted list of documents that were changed in the order in which they were modified.

The following diagram represents the data flow and components involved in the solution:

Reference: https://docs.microsoft.com/en-us/azure/cosmos-db/sql/changefeed-ecommerce-solution

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have a container named container1 in an Azure Cosmos DB Core (SQL) API account.

You need to make the contents of container1 available as reference data for an Azure Stream Analytics job.

Solution: You create an Azure function that uses Azure Cosmos DB Core (SQL) API change feed as a

trigger and Azure event hub as the output.

Does this meet the goal?

- A . Yes

- B . No

A

Explanation:

The Azure Cosmos DB change feed is a mechanism to get a continuous and incremental feed of records from an Azure Cosmos container as those records are being created or modified. Change feed support works by listening to container for any changes. It then outputs the sorted list of documents that were changed in the order in which they were modified.

The following diagram represents the data flow and components involved in the solution:

Reference: https://docs.microsoft.com/en-us/azure/cosmos-db/sql/changefeed-ecommerce-solution

HOTSPOT

You have the indexing policy shown in the following exhibit.

Use the drop-down menus to select the answer choice that answers each question based on the information presented in the graphic. NOTE: Each correct selection is worth one point.

Explanation:

Box 1: ORDER BY c.name DESC, c.age DESC

Queries that have an ORDER BY clause with two or more properties require a composite index.

The following considerations are used when using composite indexes for queries with an ORDER BY clause with two or more properties:

If the composite index paths do not match the sequence of the properties in the ORDER BY clause, then the composite index can’t support the query.

The order of composite index paths (ascending or descending) should also match the order in the ORDER BY clause.

The composite index also supports an ORDER BY clause with the opposite order on all paths.

Box 2: At the same time as the item creation

Azure Cosmos DB supports two indexing modes:

Consistent: The index is updated synchronously as you create, update or delete items. This means that the consistency of your read queries will be the consistency configured for the account.

None: Indexing is disabled on the container.

Reference: https://docs.microsoft.com/en-us/azure/cosmos-db/index-policy

You have a container named container1 in an Azure Cosmos DB Core (SQL) API account. Upserts of items in container1 occur every three seconds.

You have an Azure Functions app named function1 that is supposed to run whenever items are

inserted or replaced in container1.

You discover that function1 runs, but not on every upsert.

You need to ensure that function1 processes each upsert within one second of the upsert.

Which property should you change in the Function.json file of function1?

- A . checkpointInterval

- B . leaseCollectionsThroughput

- C . maxItemsPerInvocation

- D . feedPollDelay

D

Explanation:

With an upsert operation we can either insert or update an existing record at the same time. FeedPollDelay: The time (in milliseconds) for the delay between polling a partition for new changes on the feed, after all current changes are drained. Default is 5,000 milliseconds, or 5 seconds.

Incorrect Answers:

A: checkpointInterval: When set, it defines, in milliseconds, the interval between lease checkpoints. Default is always after each Function call.

C: maxItemsPerInvocation: When set, this property sets the maximum number of items received per Function call. If operations in the monitored collection are performed through stored procedures, transaction scope is preserved when reading items from the change feed. As a result, the number of items received could be higher than the specified value so that the items changed by the same transaction are returned as part of one atomic batch.

Reference: https://docs.microsoft.com/en-us/azure/azure-functions/functions-bindings-cosmosdb-v2-trigger

HOTSPOT

You have a container named container1 in an Azure Cosmos DB Core (SQL) API account.

The following is a sample of a document in container1.

{

"studentId": "631282",

"firstName": "James",

"lastName": "Smith",

"enrollmentYear": 1990,

"isActivelyEnrolled": true,

"address": {

"street": "",

"city": "",

"stateProvince": "",

"postal": "",

}

}

The container1 container has the following indexing policy.

{

"indexingMode": "consistent",

"includePaths": [

{

"path": "/*"

},

{

"path": "/address/city/?"

}

],

"excludePaths": [

{

"path": "/address/*"

},

{

"path": "/firstName/?"

}

]

}

For each of the following statements, select Yes if the statement is true. Otherwise, select No. NOTE: Each correct selection is worth one point.

Explanation:

Box 1: Yes

"path": "/*" is in includePaths.

Include the root path to selectively exclude paths that don’t need to be indexed. This is the recommended approach as it lets Azure Cosmos DB proactively index any new property that may be added to your model.

Box 2: No

"path": "/firstName/?" is in excludePaths.

Box 3: Yes

"path": "/address/city/?" is in includePaths

Reference: https://docs.microsoft.com/en-us/azure/cosmos-db/index-policy

You have the following query.

SELECT * FROM с

WHERE c.sensor = "TEMP1"

AND c.value < 22

AND c.timestamp >= 1619146031231

You need to recommend a composite index strategy that will minimize the request units (RUs)

consumed by the query.

What should you recommend?

- A . a composite index for (sensor ASC, value ASC) and a composite index for (sensor ASC, timestamp ASC)

- B . a composite index for (sensor ASC, value ASC, timestamp ASC) and a composite index for (sensor DESC, value DESC, timestamp DESC)

- C . a composite index for (value ASC, sensor ASC) and a composite index for (timestamp ASC, sensor ASC)

- D . a composite index for (sensor ASC, value ASC, timestamp ASC)

A

Explanation:

If a query has a filter with two or more properties, adding a composite index will improve performance.

Consider the following query:

SELECT * FROM c WHERE c.name = “Tim” and c.age > 18

In the absence of a composite index on (name ASC, and age ASC), we will utilize a range index for this query. We can improve the efficiency of this query by creating a composite index for name and age. Queries with multiple equality filters and a maximum of one range filter (such as >, <, <=, >=, !=) will utilize the composite index.

Reference: https://azure.microsoft.com/en-us/blog/three-ways-to-leverage-composite-indexes-in-azure-cosmos-db/

HOTSPOT

You have a database in an Azure Cosmos DB SQL API Core (SQL) account that is used for development.

The database is modified once per day in a batch process.

You need to ensure that you can restore the database if the last batch process fails. The solution must minimize costs.

How should you configure the backup settings? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

HOTSPOT

You have an Azure Cosmos DB Core (SQL) API account named account1.

You have the Azure virtual networks and subnets shown in the following table.

The vnet1 and vnet2 networks are connected by using a virtual network peer.

The Firewall and virtual network settings for account1 are configured as shown in the exhibit.

For each of the following statements, select Yes if the statement is true. Otherwise, select No. NOTE: Each correct selection is worth one point.

Explanation:

Box 1: Yes

VM1 is on vnet1.subnet1 which has the Endpoint Status enabled.

Box 2: No

Only virtual network and their subnets added to Azure Cosmos account have access. Their peered VNets cannot access the account until the subnets within peered virtual networks are added to the account.

Box 3: No

Only virtual network and their subnets added to Azure Cosmos account have access.

Reference: https://docs.microsoft.com/en-us/azure/cosmos-db/how-to-configure-vnet-service-endpoint

You plan to create an Azure Cosmos DB Core (SQL) API account that will use customer-managed keys stored in Azure Key Vault.

You need to configure an access policy in Key Vault to allow Azure Cosmos DB access to the keys.

Which three permissions should you enable in the access policy? Each correct answer presents part of the solution. NOTE: Each correct selection is worth one point.

- A . Wrap Key

- B . Get

- C . List

- D . Update

- E . Sign

- F . Verify

- G . Unwrap Key

A, B, G

Explanation:

Reference: https://docs.microsoft.com/en-us/azure/cosmos-db/how-to-setup-cmk

You need to configure an Apache Kafka instance to ingest data from an Azure Cosmos DB Core (SQL)

API account. The data from a container named telemetry must be added to a Kafka topic named iot.

The solution must store the data in a compact binary format.

Which three configuration items should you include in the solution? Each correct answer presents part of the solution. NOTE: Each correct selection is worth one point.

- A . "connector.class": "com.azure.cosmos.kafka.connect.source.CosmosDBSourceConnector"

- B . "key.converter": "org.apache.kafka.connect.json.JsonConverter"

- C . "key.converter": "io.confluent.connect.avro.AvroConverter"

- D . "connect.cosmos.containers.topicmap": "iot#telemetry"

- E . "connect.cosmos.containers.topicmap": "iot"

- F . "connector.class": "com.azure.cosmos.kafka.connect.source.CosmosDBSinkConnector"

C, D, F

Explanation:

C: Avro is binary format, while JSON is text.

F: Kafka Connect for Azure Cosmos DB is a connector to read from and write data to Azure Cosmos DB. The Azure Cosmos DB sink connector allows you to export data from Apache Kafka topics to an Azure Cosmos DB database. The connector polls data from Kafka to write to containers in the database based on the topics subscription.

D: Create the Azure Cosmos DB sink connector in Kafka Connect. The following JSON body defines config for the sink connector.

Extract:

"connector.class": "com.azure.cosmos.kafka.connect.sink.CosmosDBSinkConnector",

"key.converter": "org.apache.kafka.connect.json.AvroConverter"

"connect.cosmos.containers.topicmap": "hotels#kafka" Incorrect Answers:

B: JSON is plain text. Note, full example:

{

"name": "cosmosdb-sink-connector",

"config": {

"connector.class": "com.azure.cosmos.kafka.connect.sink.CosmosDBSinkConnector",

"tasks.max": "1",

"topics": [ "hotels" ],

"value.converter": "org.apache.kafka.connect.json.AvroConverter",

"value.converter.schemas.enable": "false",

"key.converter": "org.apache.kafka.connect.json.AvroConverter",

"key.converter.schemas.enable": "false",

"connect.cosmos.connection.endpoint": "https://<cosmosinstance-name>.documents.azure.com:443/",

"connect.cosmos.master.key": "<cosmosdbprimarykey>",

"connect.cosmos.databasename": "kafkaconnect",

"connect.cosmos.containers.topicmap": "hotels#kafka"

}

}

Reference:

https://docs.microsoft.com/en-us/azure/cosmos-db/sql/kafka-connector-sink

https://www.confluent.io/blog/kafka-connect-deep-dive-converters-serialization-explained/

You are implementing an Azure Data Factory data flow that will use an Azure Cosmos DB (SQL API) sink to write a dataset. The data flow will use 2,000 Apache Spark partitions.

You need to ensure that the ingestion from each Spark partition is balanced to optimize throughput.

Which sink setting should you configure?

- A . Throughput

- B . Write throughput budget

- C . Batch size

- D . Collection action

C

Explanation:

Batch size: An integer that represents how many objects are being written to Cosmos DB collection in each batch. Usually, starting with the default batch size is sufficient. To further tune this value, note: Cosmos DB limits single request’s size to 2MB. The formula is "Request Size = Single Document Size * Batch Size". If you hit error saying "Request size is too large", reduce the batch size value.

The larger the batch size, the better throughput the service can achieve, while make sure you allocate enough RUs to empower your workload.

Incorrect Answers:

A: Throughput: Set an optional value for the number of RUs you’d like to apply to your CosmosDB collection for each execution of this data flow. Minimum is 400.

B: Write throughput budget: An integer that represents the RUs you want to allocate for this Data Flow write operation, out of the total throughput allocated to the collection.

D: Collection action: Determines whether to recreate the destination collection prior to writing. None: No action will be done to the collection.

Recreate: The collection will get dropped and recreated

Reference: https://docs.microsoft.com/en-us/azure/data-factory/connector-azure-cosmos-db

You have a container named container1 in an Azure Cosmos DB Core (SQL) API account.

You need to provide a user named User1 with the ability to insert items into container1 by using role-based access control (RBAC). The solution must use the principle of least privilege.

Which roles should you assign to User1?

- A . CosmosDB Operator only

- B . DocumentDB Account Contributor and Cosmos DB Built-in Data Contributor

- C . DocumentDB Account Contributor only

- D . Cosmos DB Built-in Data Contributor only

A

Explanation:

Cosmos DB Operator: Can provision Azure Cosmos accounts, databases, and containers. Cannot

access any data or use Data Explorer.

Incorrect Answers:

B: DocumentDB Account Contributor can manage Azure Cosmos DB accounts. Azure Cosmos DB is formerly known as DocumentDB.

C: DocumentDB Account Contributor: Can manage Azure Cosmos DB accounts.

Reference: https://docs.microsoft.com/en-us/azure/cosmos-db/role-based-access-control

You have an Azure Cosmos DB Core (SQL) API account.

You configure the diagnostic settings to send all log information to a Log Analytics workspace.

You need to identify when the provisioned request units per second (RU/s) for resources within the account were modified.

You write the following query.

AzureDiagnostics

| where Category == "ControlPlaneRequests"

What should you include in the query?

- A . | where OperationName startswith "AccountUpdateStart"

- B . | where OperationName startswith "SqlContainersDelete"

- C . | where OperationName startswith "MongoCollectionsThroughputUpdate"

- D . | where OperationName startswith "SqlContainersThroughputUpdate"

A

Explanation:

The following are the operation names in diagnostic logs for different operations:

RegionAddStart, RegionAddComplete

RegionRemoveStart, RegionRemoveComplete

AccountDeleteStart, AccountDeleteComplete

RegionFailoverStart, RegionFailoverComplete AccountCreateStart, AccountCreateComplete *AccountUpdateStart*, AccountUpdateComplete VirtualNetworkDeleteStart, VirtualNetworkDeleteComplete DiagnosticLogUpdateStart, DiagnosticLogUpdateComplete

Reference: https://docs.microsoft.com/en-us/azure/cosmos-db/audit-control-plane-logs

You have a database in an Azure Cosmos DB Core (SQL) API account. The database is backed up every two hours.

You need to implement a solution that supports point-in-time restore.

What should you do first?

- A . Enable Continuous Backup for the account.

- B . Configure the Backup & Restore settings for the account.

- C . Create a new account that has a periodic backup policy.

- D . Configure the Point in Time Restore settings for the account.

A

Explanation:

Reference: https://docs.microsoft.com/en-us/azure/cosmos-db/provision-account-continuous-backup

HOTSPOT

You have an Azure Cosmos DB Core (SQL) API account used by an application named App1.

You open the Insights pane for the account and see the following chart.

Use the drop-down menus to select the answer choice that answers each question based on the information presented in the graphic. NOTE: Each correct selection is worth one point.

Explanation:

Box 1: incorrect connection URLs

400 Bad Request: Returned when there is an error in the request URI, headers, or body. The response body will contain an error message explaining what the specific problem is.

The HyperText Transfer Protocol (HTTP) 400 Bad Request response status code indicates that the server cannot or will not process the request due to something that is perceived to be a client error (for example, malformed request syntax, invalid request message framing, or deceptive request routing).

Box 2: 6 thousand

201 Created: Success on PUT or POST. Object created or updated successfully.

Note:

200 OK: Success on GET, PUT, or POST. Returned for a successful response.

404 Not Found: Returned when a resource does not exist on the server. If you are managing or querying an index, check the syntax and verify the index name is specified correctly.

Reference: https://docs.microsoft.com/en-us/rest/api/searchservice/http-status-codes