Microsoft DP-300 Administering Relational Databases on Microsoft Azure Online Training

Microsoft DP-300 Online Training

The questions for DP-300 were last updated at Feb 22,2026.

- Exam Code: DP-300

- Exam Name: Administering Relational Databases on Microsoft Azure

- Certification Provider: Microsoft

- Latest update: Feb 22,2026

You need to provide an implementation plan to configure data retention for ResearchDB1. The solution must meet the security and compliance requirements.

What should you include in the plan?

- A . Configure the Deleted databases settings for ResearchSrvOL

- B . Deploy and configure an Azure Backup server.

- C . Configure the Advanced Data Security settings for ResearchDBL

- D . Configure the Manage Backups settings for ResearchSrvOL

Topic 2, Contoso Ltd

Case study

This is a case study. Case studies are not timed separately. You can use as much exam time as you would like to complete each case. However, there may be additional case studies and sections on this exam. You must manage your time to ensure that you are able to complete all questions included on this exam in the time provided.

To answer the questions included in a case study, you will need to reference information that is provided in the case study. Case studies might contain exhibits and other resources that provide more information about the scenario that is described in the case study. Each question is independent of the other questions in this case study.

At the end of this case study, a review screen will appear. This screen allows you to review your answers and to make changes before you move to the next section of the exam. After you begin a new section, you cannot return to this section.

To start the case study

To display the first question in this case study, click the Next button. Use the buttons in the left pane to explore the content of the case study before you answer the questions. Clicking these buttons displays information such as business requirements, existing environment, and problem statements. If the case study has an All Information tab, note that the information displayed is identical to the information displayed on the subsequent tabs. When you are ready to answer a question, click the Question button to return to the question.

Overview

Existing Environment

Contoso, Ltd. is a financial data company that has 100 employees. The company delivers financial data to customers.

Active Directory

Contoso has a hybrid Azure Active Directory (Azure AD) deployment that syncs to on-premises Active Directory.

Database Environment

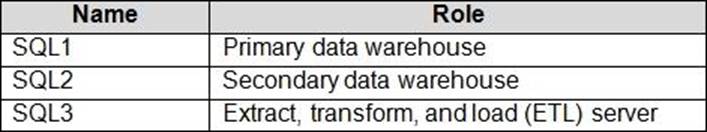

Contoso has SQL Server 2017 on Azure virtual machines shown in the following table.

SQL1 and SQL2 are in an Always On availability group and are actively queried. SQL3 runs jobs, provides historical data, and handles the delivery of data to customers.

The on-premises datacenter contains a PostgreSQL server that has a 50-TB database.

Current Business Model

Contoso uses Microsoft SQL Server Integration Services (SSIS) to create flat files for customers. The customers receive the files by using FTP.

Requirements

Planned Changes

Contoso plans to move to a model in which they deliver data to customer databases that run as platform as a service (PaaS) offerings. When a customer establishes a service agreement with Contoso, a separate resource group that contains an Azure SQL database will be provisioned for the customer. The database will have a complete copy of the financial data. The data to which each customer will have access will depend on the service agreement tier. The customers can change tiers by changing their service agreement.

The estimated size of each PaaS database is 1 TB.

Contoso plans to implement the following changes:

Move the PostgreSQL database to Azure Database for PostgreSQL during the next six months.

Upgrade SQL1, SQL2, and SQL3 to SQL Server 2019 during the next few months.

Start onboarding customers to the new PaaS solution within six months.

Business Goals

Contoso identifies the following business requirements:

Use built-in Azure features whenever possible.

Minimize development effort whenever possible.

Minimize the compute costs of the PaaS solutions.

Provide all the customers with their own copy of the database by using the PaaS solution. Provide the customers with different table and row access based on the customer’s service agreement.

In the event of an Azure regional outage, ensure that the customers can access the PaaS solution with minimal downtime. The solution must provide automatic failover.

Ensure that users of the PaaS solution can create their own database objects but he prevented from modifying any of the existing database objects supplied by Contoso.

Technical Requirements

Contoso identifies the following technical requirements:

Users of the PaaS solution must be able to sign in by using their own corporate Azure AD credentials or have Azure AD credentials supplied to them by Contoso. The solution must avoid using the internal Azure AD of Contoso to minimize guest users.

All customers must have their own resource group, Azure SQL server, and Azure SQL database. The deployment of resources for each customer must be done in a consistent fashion.

Users must be able to review the queries issued against the PaaS databases and identify any new objects created.

Downtime during the PostgreSQL database migration must be minimized.

Monitoring Requirements

Contoso identifies the following monitoring requirements:

Notify administrators when a PaaS database has a higher than average CPU usage.

Use a single dashboard to review security and audit data for all the PaaS databases.

Use a single dashboard to monitor query performance and bottlenecks across all the PaaS databases.

Monitor the PaaS databases to identify poorly performing queries and resolve query performance issues automatically whenever possible.

PaaS Prototype

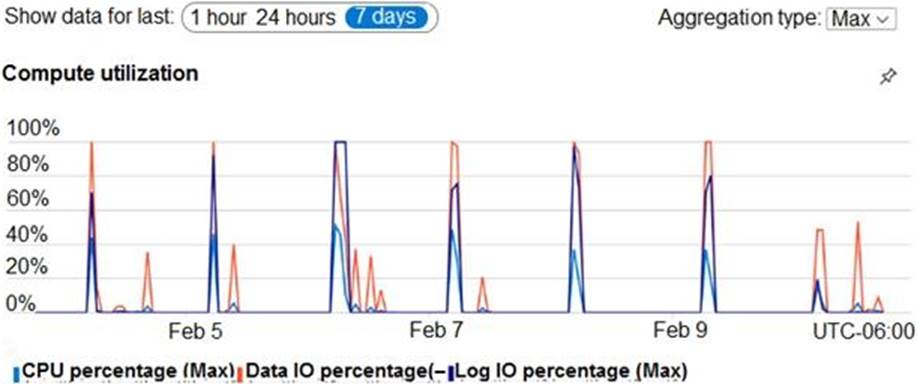

During prototyping of the PaaS solution in Azure, you record the compute utilization of a customer’s Azure SQL database as shown in the following exhibit.

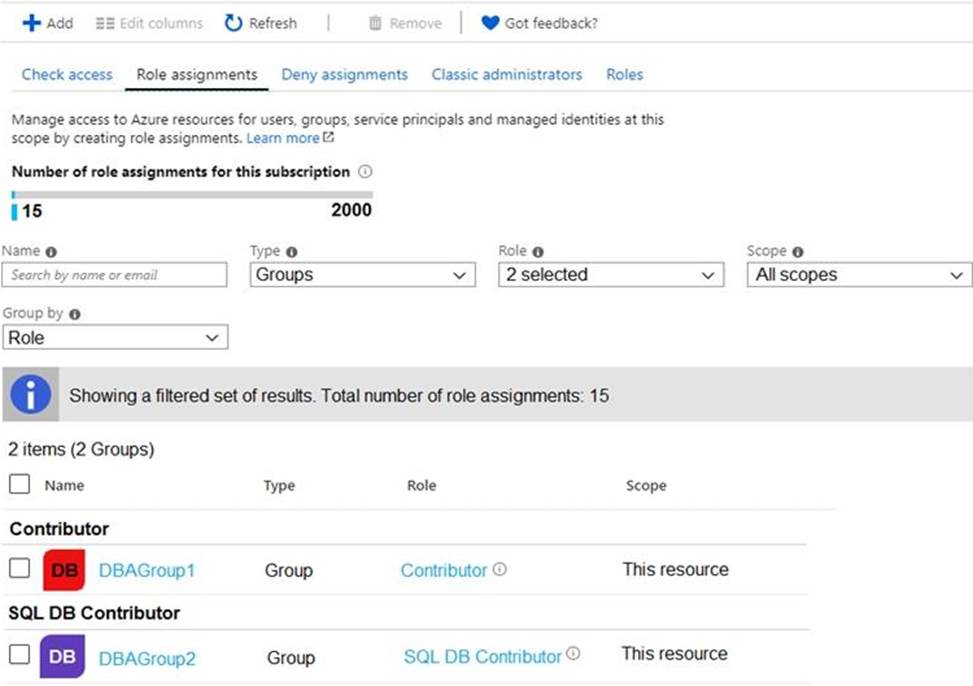

Role Assignments

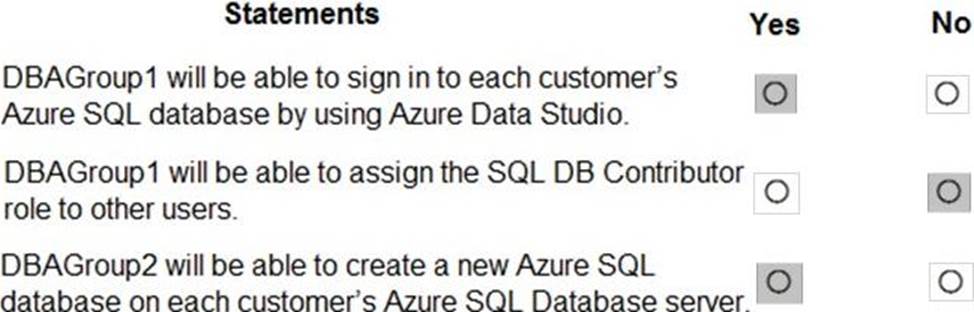

For each customer’s Azure SQL Database server, you plan to assign the roles shown in the following exhibit.

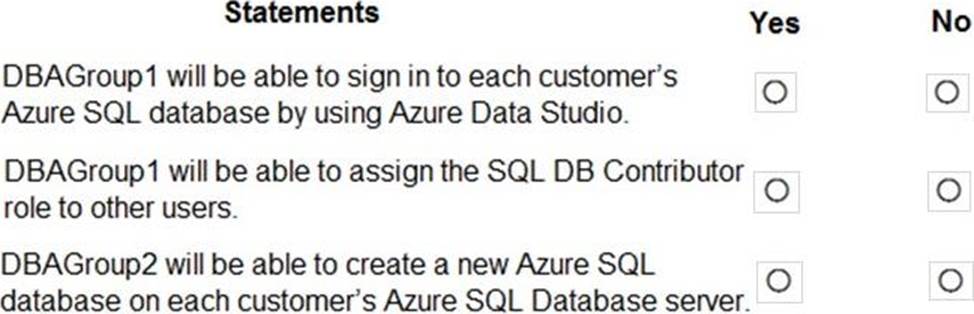

HOTSPOT

You are evaluating the role assignments.

For each of the following statements, select Yes if the statement is true. Otherwise, select No. NOTE: Each correct selection is worth one point.

Based on the PaaS prototype, which Azure SQL Database compute tier should you use?

- A . Business Critical 4-vCore

- B . Hyperscale

- C . General Purpose v-vCore

- D . Serverless

Which audit log destination should you use to meet the monitoring requirements?

- A . Azure Storage

- B . Azure Event Hubs

- C . Azure Log Analytics

What should you implement to meet the disaster recovery requirements for the PaaS solution?

- A . Availability Zones

- B . failover groups

- C . Always On availability groups

- D . geo-replication

What should you use to migrate the PostgreSQL database?

- A . Azure Data Box

- B . AzCopy

- C . Azure Database Migration Service

- D . Azure Site Recovery

You need to implement a solution to notify the administrators. The solution must meet the monitoring requirements.

What should you do?

- A . Create an Azure Monitor alert rule that has a static threshold and assign the alert rule to an action group.

- B . Add a diagnostic setting that logs QueryStoreRuntimeStatistics and streams to an Azure event hub.

- C . Add a diagnostic setting that logs Timeouts and streams to an Azure event hub.

- D . Create an Azure Monitor alert rule that has a dynamic threshold and assign the alert rule to an action group.

Topic 3, ADatum Corporation

This is a case study. Case studies are not timed separately. You can use as much exam time as you would like to complete each case. However, there may be additional case studies and sections on this exam. You must manage your time to ensure that you are able to complete all questions included on this exam in the time provided.

To answer the questions included in a case study, you will need to reference information that is provided in the case study. Case studies might contain exhibits and other resources that provide more information about the scenario that is described in the case study. Each question is independent of the other questions in this case study.

At the end of this case study, a review screen will appear. This screen allows you to review your answers and to make changes before you move to the next section of the exam. After you begin a new section, you cannot return to this section.

To start the case study

To display the first question in this case study, click the Next button. Use the buttons in the left pane to explore the content of the case study before you answer the questions. Clicking these buttons displays information such as business requirements, existing environment, and problem statements. If the case study has an All Information tab, note that the information displayed is identical to the information displayed on the subsequent tabs. When you are ready to answer a question, click the Question button to return to the question.

Overview

ADatum Corporation is a retailer that sells products through two sales channels: retail stores and a website.

Existing Environment

ADatum has one database server that has Microsoft SQL Server 2016 installed. The server hosts three mission-critical databases named SALESDB, DOCDB, and REPORTINGDB.

SALESDB collects data from the stores and the website.

DOCDB stores documents that connect to the sales data in SALESDB. The documents are stored in two different JSON formats based on the sales channel.

REPORTINGDB stores reporting data and contains several columnstore indexes. A daily process creates reporting data in REPORTINGDB from the data in SALESDB. The process is implemented as a SQL Server Integration Services (SSIS) package that runs a stored procedure from SALESDB.

Requirements

Planned Changes

ADatum plans to move the current data infrastructure to Azure.

The new infrastructure has the following requirements:

✑ Migrate SALESDB and REPORTINGDB to an Azure SQL database.

✑ Migrate DOCDB to Azure Cosmos DB.

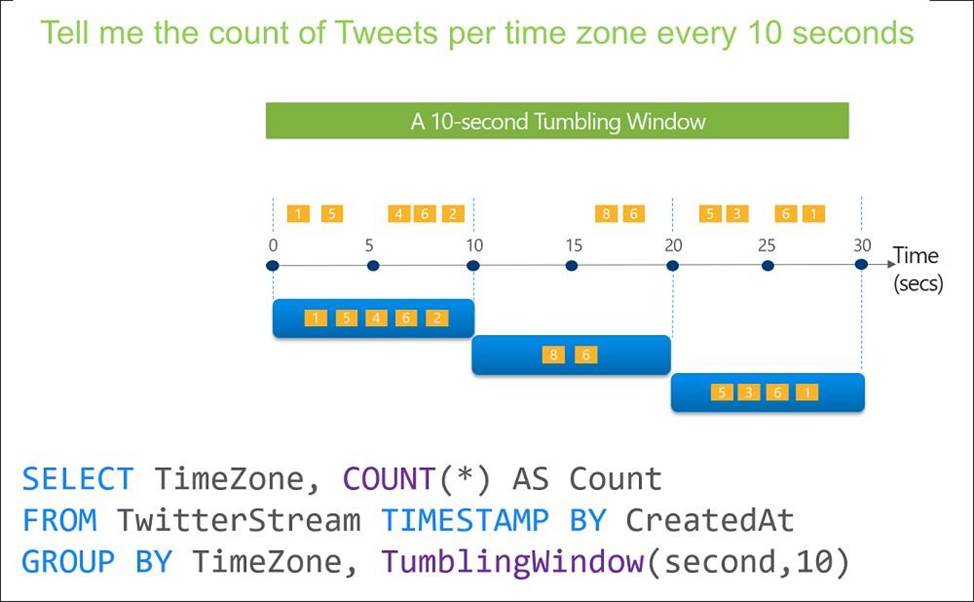

✑ The sales data, including the documents in JSON format, must be gathered as it arrives and analyzed online by using Azure Stream Analytics. The analytics process will perform aggregations that must be done continuously, without gaps, and without overlapping.

✑ As they arrive, all the sales documents in JSON format must be transformed into one consistent format.

✑ Azure Data Factory will replace the SSIS process of copying the data from SALESDB to REPORTINGDB.

Technical Requirements

The new Azure data infrastructure must meet the following technical requirements:

✑ Data in SALESDB must encrypted by using Transparent Data Encryption (TDE). The encryption must use your own key.

✑ SALESDB must be restorable to any given minute within the past three weeks.

✑ Real-time processing must be monitored to ensure that workloads are sized properly based on actual usage patterns.

✑ Missing indexes must be created automatically for REPORTINGDB.

✑ Disk IO, CPU, and memory usage must be monitored for SALESDB.

Which windowing function should you use to perform the streaming aggregation of the sales data?

- A . Sliding

- B . Hopping

- C . Session

- D . Tumbling

Which counter should you monitor for real-time processing to meet the technical requirements?

- A . SU% Utilization

- B . CPU% utilization

- C . Concurrent users

- D . Data Conversion Errors

Topic 4, Contoso Ltd Clothing Store

You need to design a data retention solution for the Twitter feed data records. The solution must meet the customer sentiment analytics requirements.

Which Azure Storage functionality should you include in the solution?

- A . time-based retention

- B . change feed

- C . lifecycle management

- D . soft delete

Latest DP-300 Dumps Valid Version with 176 Q&As

Latest And Valid Q&A | Instant Download | Once Fail, Full Refund