Microsoft DP-203 Data Engineering on Microsoft Azure Online Training

Microsoft DP-203 Online Training

The questions for DP-203 were last updated at Feb 19,2026.

- Exam Code: DP-203

- Exam Name: Data Engineering on Microsoft Azure

- Certification Provider: Microsoft

- Latest update: Feb 19,2026

You need to design an Azure Synapse Analytics dedicated SQL pool that meets the following requirements:

✑ Can return an employee record from a given point in time.

✑ Maintains the latest employee information.

✑ Minimizes query complexity.

How should you model the employee data?

- A . as a temporal table

- B . as a SQL graph table

- C . as a degenerate dimension table

- D . as a Type 2 slowly changing dimension (SCD) table

You have an enterprise-wide Azure Data Lake Storage Gen2 account. The data lake is accessible only through an Azure virtual network named VNET1.

You are building a SQL pool in Azure Synapse that will use data from the data lake.

Your company has a sales team. All the members of the sales team are in an Azure Active Directory group named Sales. POSIX controls are used to assign the Sales group access to the files in the data lake.

You plan to load data to the SQL pool every hour.

You need to ensure that the SQL pool can load the sales data from the data lake.

Which three actions should you perform? Each correct answer presents part of the solution. NOTE: Each area selection is worth one point.

- A . Add the managed identity to the Sales group.

- B . Use the managed identity as the credentials for the data load process.

- C . Create a shared access signature (SAS).

- D . Add your Azure Active Directory (Azure AD) account to the Sales group.

- E . Use the snared access signature (SAS) as the credentials for the data load process.

- F . Create a managed identity.

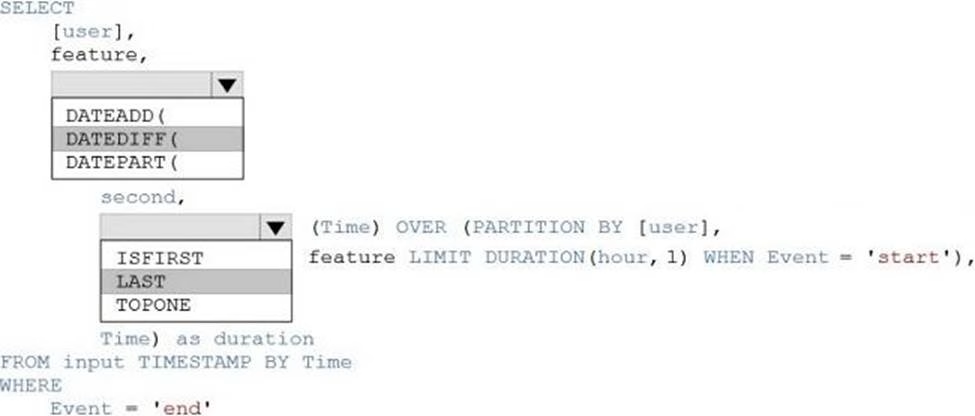

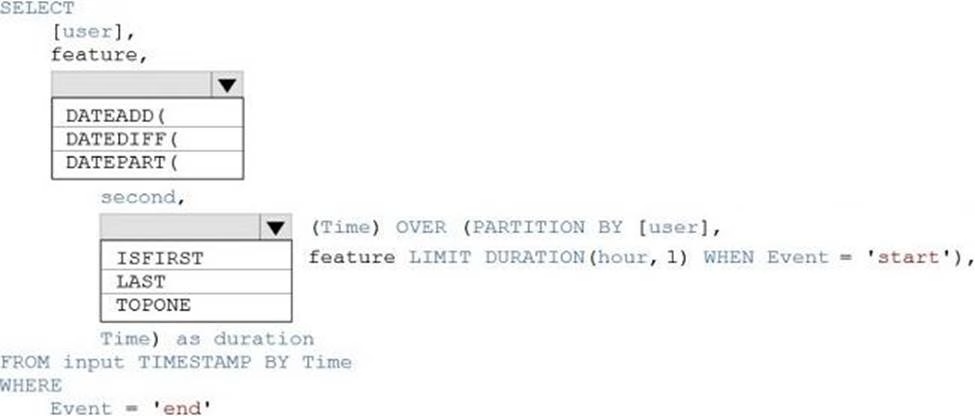

HOTSPOT

You are building an Azure Stream Analytics job to identify how much time a user spends interacting with a feature on a webpage.

The job receives events based on user actions on the webpage. Each row of data represents an event. Each event has a type of either ‘start’ or ‘end’.

You need to calculate the duration between start and end events.

How should you complete the query? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

You are creating an Azure Data Factory data flow that will ingest data from a CSV file, cast columns to specified types of data, and insert the data into a table in an Azure Synapse Analytic dedicated SQL pool. The CSV file contains three columns named username, comment, and date.

The data flow already contains the following:

✑ A source transformation.

✑ A Derived Column transformation to set the appropriate types of data.

✑ A sink transformation to land the data in the pool.

You need to ensure that the data flow meets the following requirements:

✑ All valid rows must be written to the destination table.

✑ Truncation errors in the comment column must be avoided proactively.

✑ Any rows containing comment values that will cause truncation errors upon insert must be written to a file in blob storage.

Which two actions should you perform? Each correct answer presents part of the solution . NOTE: Each correct selection is worth one point.

- A . To the data flow, add a sink transformation to write the rows to a file in blob storage.

- B . To the data flow, add a Conditional Split transformation to separate the rows that will cause truncation errors.

- C . To the data flow, add a filter transformation to filter out rows that will cause truncation errors.

- D . Add a select transformation to select only the rows that will cause truncation errors.

You are creating an Azure Data Factory data flow that will ingest data from a CSV file, cast columns to specified types of data, and insert the data into a table in an Azure Synapse Analytic dedicated SQL pool. The CSV file contains three columns named username, comment, and date.

The data flow already contains the following:

✑ A source transformation.

✑ A Derived Column transformation to set the appropriate types of data.

✑ A sink transformation to land the data in the pool.

You need to ensure that the data flow meets the following requirements:

✑ All valid rows must be written to the destination table.

✑ Truncation errors in the comment column must be avoided proactively.

✑ Any rows containing comment values that will cause truncation errors upon insert must be written to a file in blob storage.

Which two actions should you perform? Each correct answer presents part of the solution . NOTE: Each correct selection is worth one point.

- A . To the data flow, add a sink transformation to write the rows to a file in blob storage.

- B . To the data flow, add a Conditional Split transformation to separate the rows that will cause truncation errors.

- C . To the data flow, add a filter transformation to filter out rows that will cause truncation errors.

- D . Add a select transformation to select only the rows that will cause truncation errors.

You are creating an Azure Data Factory data flow that will ingest data from a CSV file, cast columns to specified types of data, and insert the data into a table in an Azure Synapse Analytic dedicated SQL pool. The CSV file contains three columns named username, comment, and date.

The data flow already contains the following:

✑ A source transformation.

✑ A Derived Column transformation to set the appropriate types of data.

✑ A sink transformation to land the data in the pool.

You need to ensure that the data flow meets the following requirements:

✑ All valid rows must be written to the destination table.

✑ Truncation errors in the comment column must be avoided proactively.

✑ Any rows containing comment values that will cause truncation errors upon insert must be written to a file in blob storage.

Which two actions should you perform? Each correct answer presents part of the solution . NOTE: Each correct selection is worth one point.

- A . To the data flow, add a sink transformation to write the rows to a file in blob storage.

- B . To the data flow, add a Conditional Split transformation to separate the rows that will cause truncation errors.

- C . To the data flow, add a filter transformation to filter out rows that will cause truncation errors.

- D . Add a select transformation to select only the rows that will cause truncation errors.

You are creating an Azure Data Factory data flow that will ingest data from a CSV file, cast columns to specified types of data, and insert the data into a table in an Azure Synapse Analytic dedicated SQL pool. The CSV file contains three columns named username, comment, and date.

The data flow already contains the following:

✑ A source transformation.

✑ A Derived Column transformation to set the appropriate types of data.

✑ A sink transformation to land the data in the pool.

You need to ensure that the data flow meets the following requirements:

✑ All valid rows must be written to the destination table.

✑ Truncation errors in the comment column must be avoided proactively.

✑ Any rows containing comment values that will cause truncation errors upon insert must be written to a file in blob storage.

Which two actions should you perform? Each correct answer presents part of the solution . NOTE: Each correct selection is worth one point.

- A . To the data flow, add a sink transformation to write the rows to a file in blob storage.

- B . To the data flow, add a Conditional Split transformation to separate the rows that will cause truncation errors.

- C . To the data flow, add a filter transformation to filter out rows that will cause truncation errors.

- D . Add a select transformation to select only the rows that will cause truncation errors.

You are creating an Azure Data Factory data flow that will ingest data from a CSV file, cast columns to specified types of data, and insert the data into a table in an Azure Synapse Analytic dedicated SQL pool. The CSV file contains three columns named username, comment, and date.

The data flow already contains the following:

✑ A source transformation.

✑ A Derived Column transformation to set the appropriate types of data.

✑ A sink transformation to land the data in the pool.

You need to ensure that the data flow meets the following requirements:

✑ All valid rows must be written to the destination table.

✑ Truncation errors in the comment column must be avoided proactively.

✑ Any rows containing comment values that will cause truncation errors upon insert must be written to a file in blob storage.

Which two actions should you perform? Each correct answer presents part of the solution . NOTE: Each correct selection is worth one point.

- A . To the data flow, add a sink transformation to write the rows to a file in blob storage.

- B . To the data flow, add a Conditional Split transformation to separate the rows that will cause truncation errors.

- C . To the data flow, add a filter transformation to filter out rows that will cause truncation errors.

- D . Add a select transformation to select only the rows that will cause truncation errors.

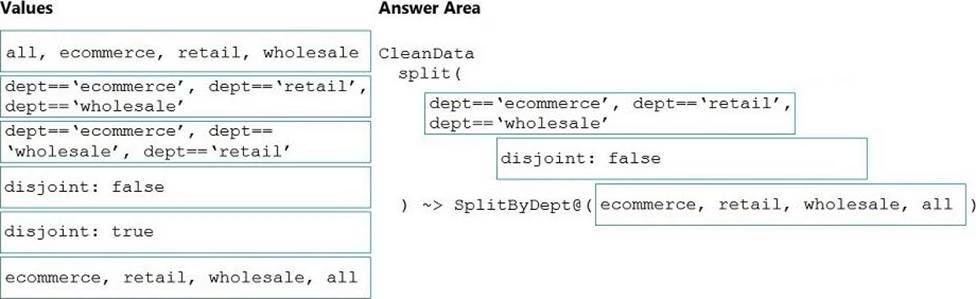

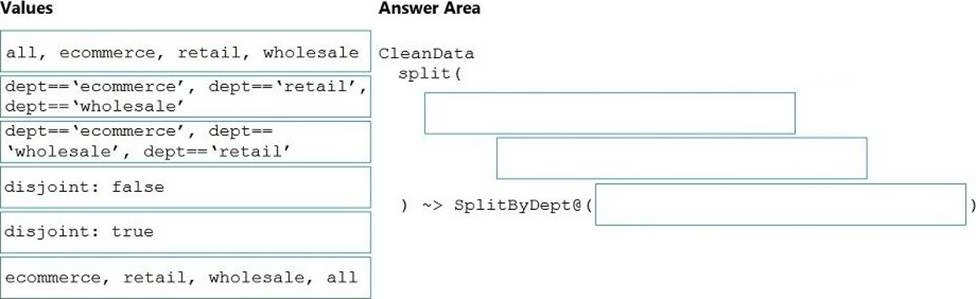

DRAG DROP

You need to create an Azure Data Factory pipeline to process data for the following three departments at your company: Ecommerce, retail, and wholesale. The solution must ensure that data can also be processed for the entire company.

How should you complete the Data Factory data flow script? To answer, drag the appropriate values to the correct targets. Each value may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content. NOTE: Each correct selection is worth one point.

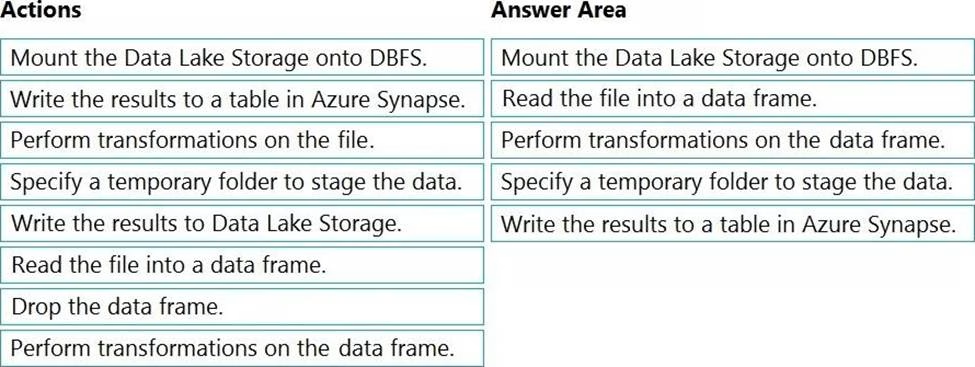

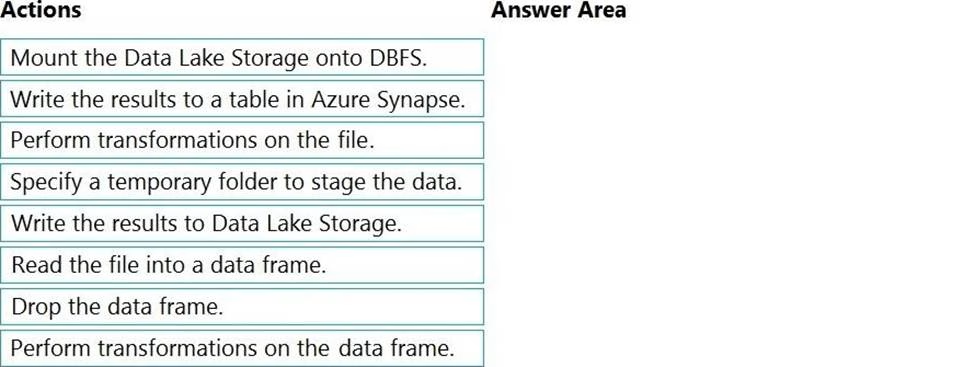

DRAG DROP

You have an Azure Data Lake Storage Gen2 account that contains a JSON file for customers. The file contains two attributes named FirstName and LastName.

You need to copy the data from the JSON file to an Azure Synapse Analytics table by using Azure Databricks. A new column must be created that concatenates the FirstName and LastName values.

You create the following components:

✑ A destination table in Azure Synapse

✑ An Azure Blob storage container

✑ A service principal

Which five actions should you perform in sequence next in is Databricks notebook? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

Latest DP-203 Dumps Valid Version with 116 Q&As

Latest And Valid Q&A | Instant Download | Once Fail, Full Refund