Microsoft DP-203 Data Engineering on Microsoft Azure Online Training

Microsoft DP-203 Online Training

The questions for DP-203 were last updated at Feb 20,2026.

- Exam Code: DP-203

- Exam Name: Data Engineering on Microsoft Azure

- Certification Provider: Microsoft

- Latest update: Feb 20,2026

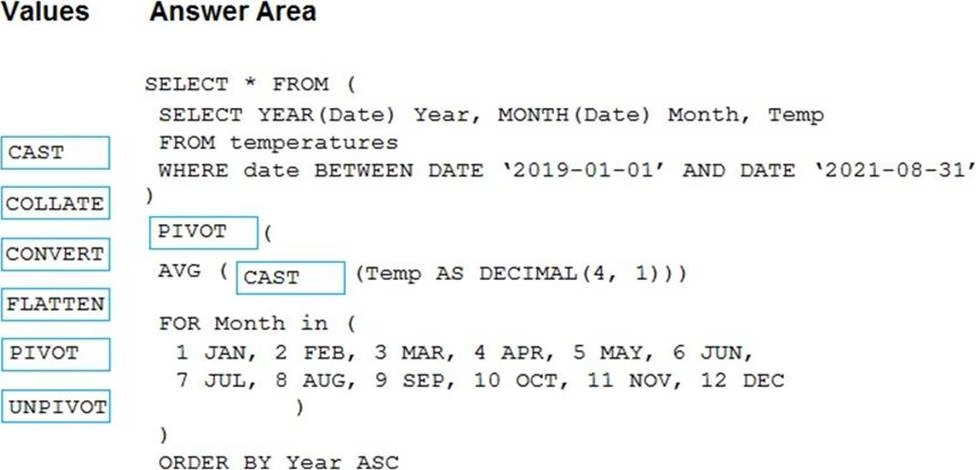

DRAG DROP

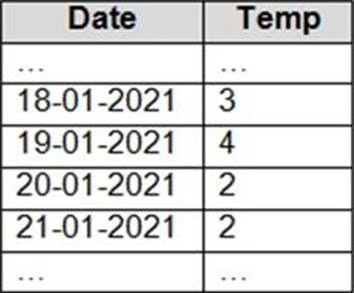

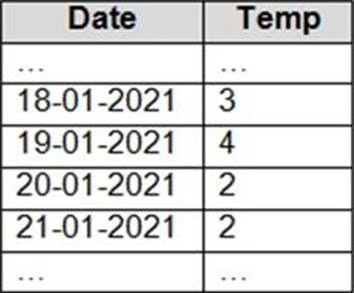

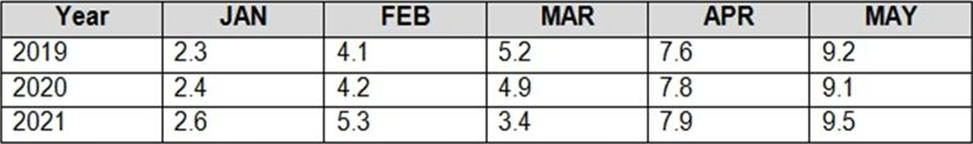

You have an Apache Spark DataFrame named temperatures.

A sample of the data is shown in the following table.

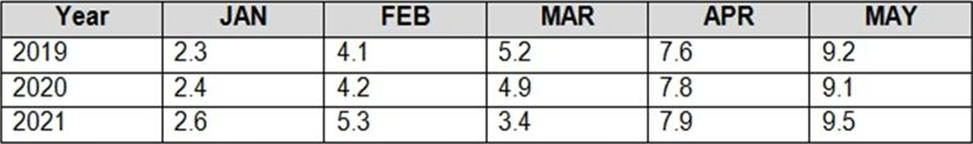

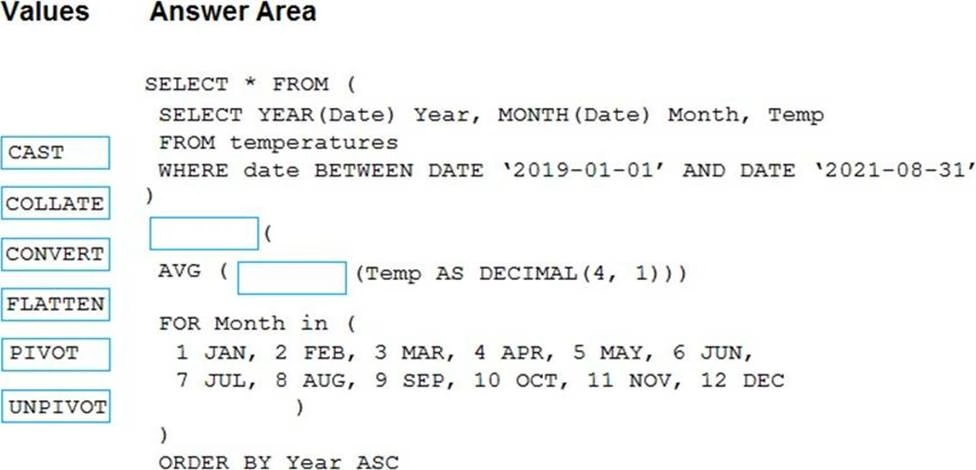

You need to produce the following table by using a Spark SQL query.

How should you complete the query? To answer, drag the appropriate values to the correct targets. Each value may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content. NOTE: Each correct selection is worth one point.

DRAG DROP

You have an Apache Spark DataFrame named temperatures.

A sample of the data is shown in the following table.

You need to produce the following table by using a Spark SQL query.

How should you complete the query? To answer, drag the appropriate values to the correct targets. Each value may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content. NOTE: Each correct selection is worth one point.

You have a C# application that process data from an Azure IoT hub and performs complex transformations.

You need to replace the application with a real-time solution. The solution must reuse as much code as possible from the existing application.

- A . Azure Databricks

- B . Azure Event Grid

- C . Azure Stream Analytics

- D . Azure Data Factory

You have several Azure Data Factory pipelines that contain a mix of the following types of activities.

* Wrangling data flow

* Notebook

* Copy

* jar

Which two Azure services should you use to debug the activities? Each correct answer presents part of the solution NOTE: Each correct selection is worth one point.

- A . Azure HDInsight

- B . Azure Databricks

- C . Azure Machine Learning

- D . Azure Data Factory

- E . Azure Synapse Analytics

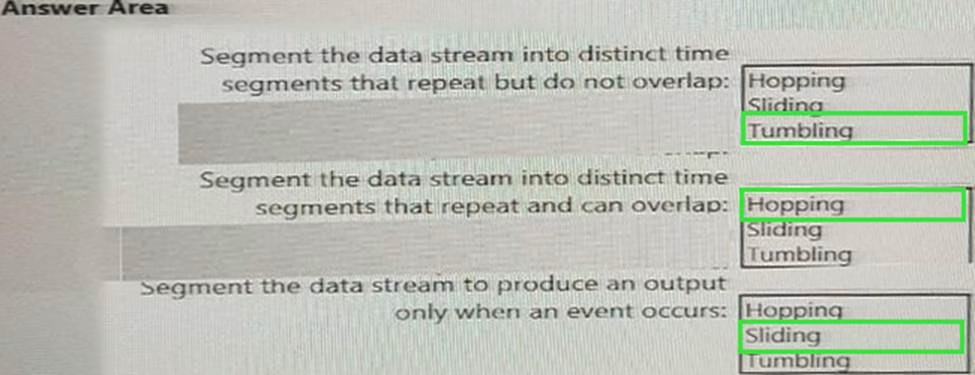

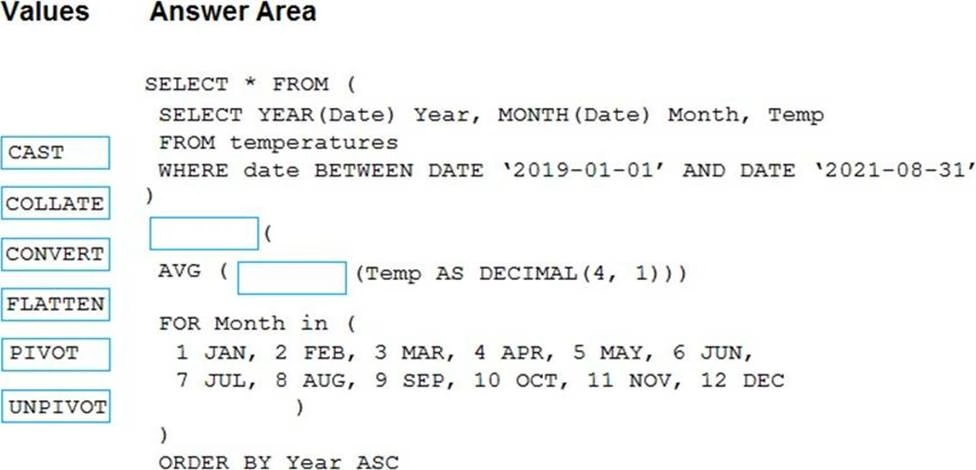

HOTSPOT

You are implementing Azure Stream Analytics windowing functions.

Which windowing function should you use for each requirement? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

You use Azure Stream Analytics to receive Twitter data from Azure Event Hubs and to output the data to an Azure Blob storage account.

You need to output the count of tweets during the last five minutes every five minutes. Each tweet must only be counted once.

Which windowing function should you use?

- A . a five-minute Session window

- B . a five-minute Sliding window

- C . a five-minute Tumbling window

- D . a five-minute Hopping window that has one-minute hop

You have an Azure Stream Analytics query. The query returns a result set that contains 10,000 distinct values for a column named clusterID.

You monitor the Stream Analytics job and discover high latency.

You need to reduce the latency.

Which two actions should you perform? Each correct answer presents a complete solution. NOTE: Each correct selection is worth one point.

- A . Add a pass-through query.

- B . Add a temporal analytic function.

- C . Scale out the query by using PARTITION BY.

- D . Convert the query to a reference query.

- E . Increase the number of streaming units.

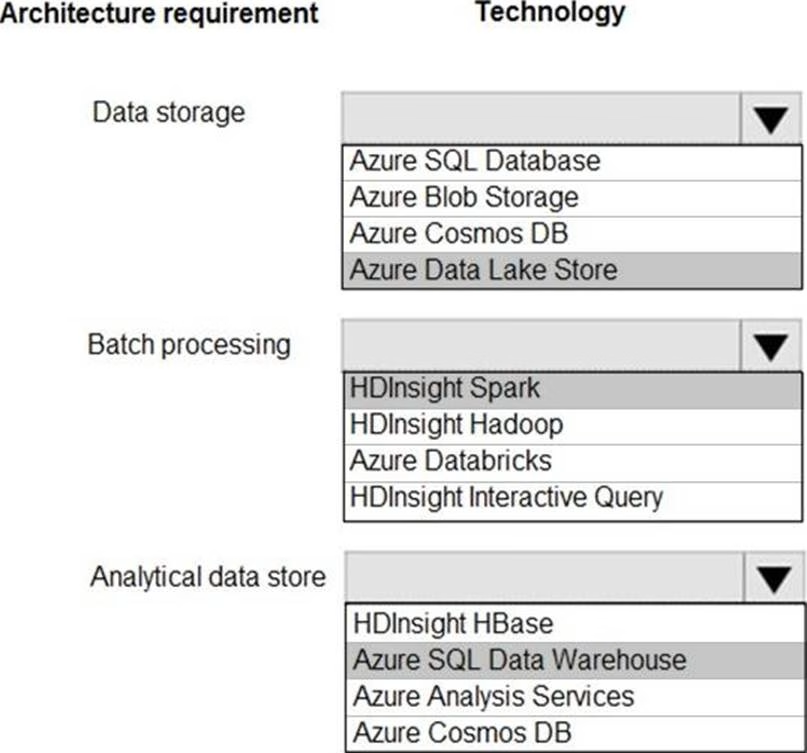

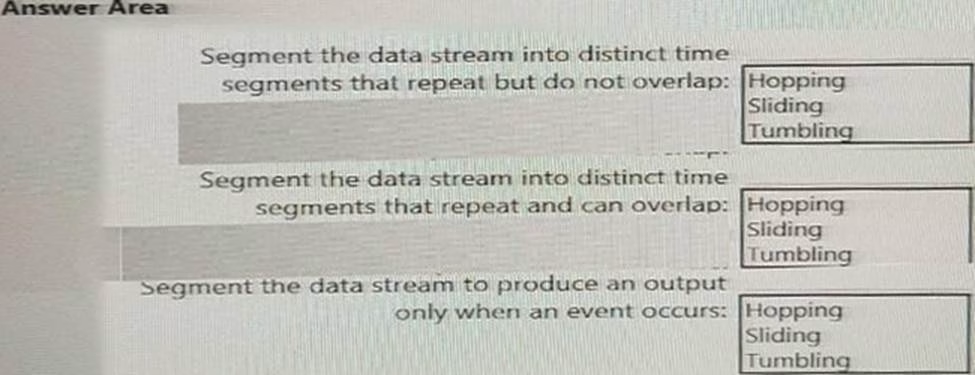

HOTSPOT

You are developing a solution using a Lambda architecture on Microsoft Azure.

The data at test layer must meet the following requirements:

Data storage:

• Serve as a repository (or high volumes of large files in various formats.

• Implement optimized storage for big data analytics workloads.

• Ensure that data can be organized using a hierarchical structure.

Batch processing:

• Use a managed solution for in-memory computation processing.

• Natively support Scala, Python, and R programming languages.

• Provide the ability to resize and terminate the cluster automatically.

Analytical data store:

• Support parallel processing.

• Use columnar storage.

• Support SQL-based languages.

You need to identify the correct technologies to build the Lambda architecture.

Which technologies should you use? To answer, select the appropriate options in the answer area NOTE: Each correct selection is worth one point.

You are designing a solution that will copy Parquet files stored in an Azure Blob storage account to an Azure Data Lake Storage Gen2 account.

The data will be loaded daily to the data lake and will use a folder structure of {Year}/{Month}/{Day}/.

You need to design a daily Azure Data Factory data load to minimize the data transfer between the

Two accounts.

Which two configurations should you include in the design? Each correct answer presents part of the solution. NOTE: Each correct selection is worth one point.

- A . Delete the files in the destination before loading new data.

- B . Filter by the last modified date of the source files.

- C . Delete the source files after they are copied.

- D . Specify a file naming pattern for the destination.

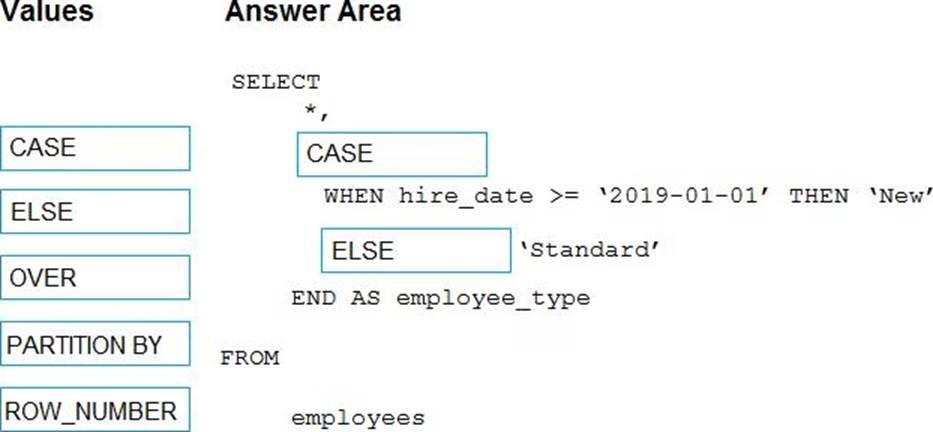

DRAG DROP

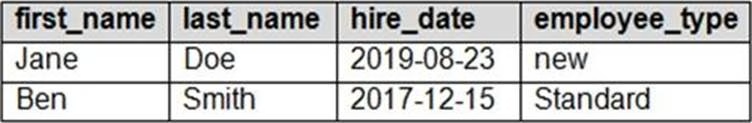

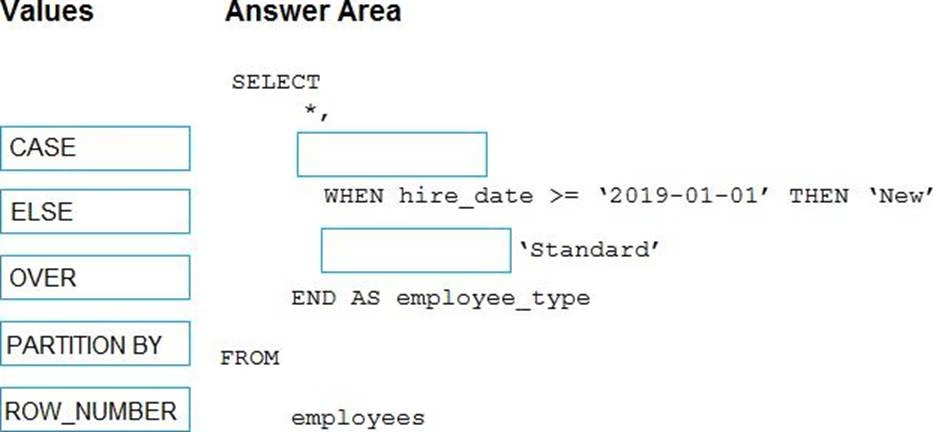

You have the following table named Employees.

You need to calculate the employee_type value based on the hire_date value.

How should you complete the Transact-SQL statement? To answer, drag the appropriate values to the correct targets. Each value may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content. NOTE: Each correct selection is worth one point.

Latest DP-203 Dumps Valid Version with 116 Q&As

Latest And Valid Q&A | Instant Download | Once Fail, Full Refund