Microsoft DP-203 Data Engineering on Microsoft Azure Online Training

Microsoft DP-203 Online Training

The questions for DP-203 were last updated at Feb 20,2026.

- Exam Code: DP-203

- Exam Name: Data Engineering on Microsoft Azure

- Certification Provider: Microsoft

- Latest update: Feb 20,2026

HOTSPOT

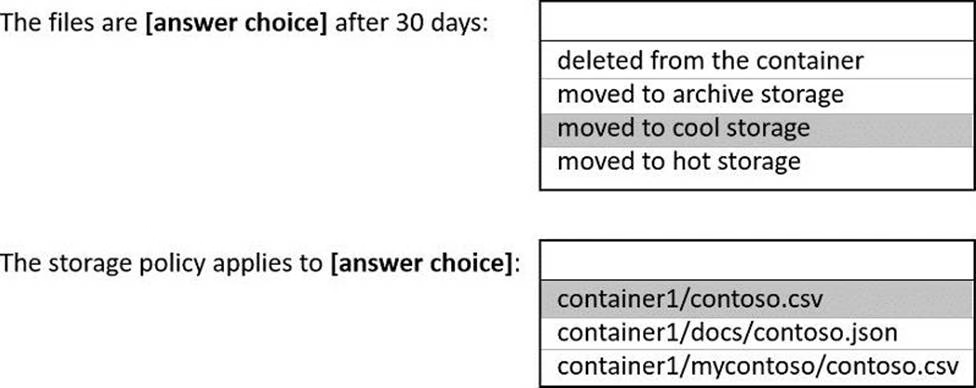

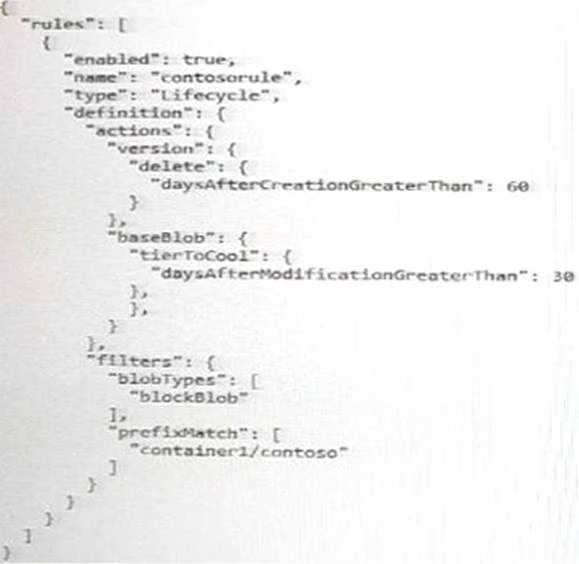

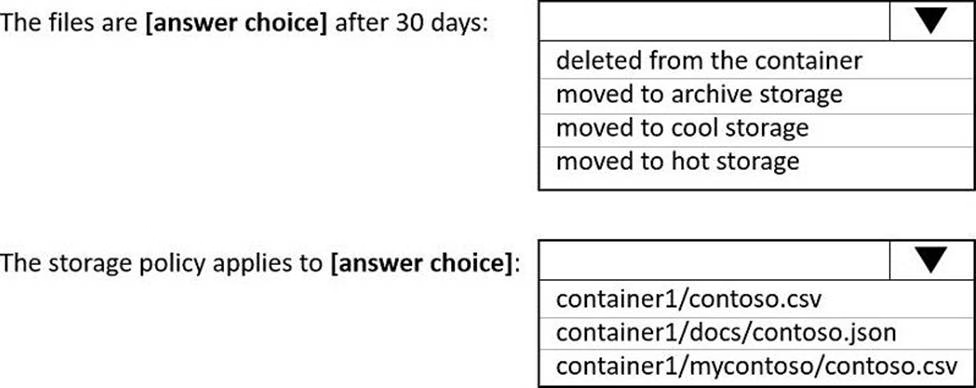

You store files in an Azure Data Lake Storage Gen2 container.

The container has the storage policy shown in the following exhibit.

Use the drop-down menus to select the answer choice that completes each statement based on the

information presented in the graphic. NOTE: Each correct selection Is worth one point.

You plan to ingest streaming social media data by using Azure Stream Analytics. The data will be stored in files in Azure Data Lake Storage, and then consumed by using Azure Datiabricks and PolyBase in Azure Synapse Analytics.

You need to recommend a Stream Analytics data output format to ensure that the queries from Databricks and PolyBase against the files encounter the fewest possible errors. The solution must ensure that the tiles can be queried quickly and that the data type information is retained.

What should you recommend?

- A . Parquet

- B . Avro

- C . CSV

- D . JSON

You have an Azure Data Lake Storage Gen2 container that contains 100 TB of data.

You need to ensure that the data in the container is available for read workloads in a secondary region if an outage occurs in the primary region. The solution must minimize costs.

Which type of data redundancy should you use?

- A . zone-redundant storage (ZRS)

- B . read-access geo-redundant storage (RA-GRS)

- C . locally-redundant storage (LRS)

- D . geo-redundant storage (GRS)

You have an Azure Synapse Analytics dedicated SQL Pool1. Pool1 contains a partitioned fact table named dbo.Sales and a staging table named stg.Sales that has the matching table and partition definitions.

You need to overwrite the content of the first partition in dbo.Sales with the content of the same partition in stg.Sales. The solution must minimize load times.

What should you do?

- A . Switch the first partition from dbo.Sales to stg.Sales.

- B . Switch the first partition from stg.Sales to dbo. Sales.

- C . Update dbo.Sales from stg.Sales.

- D . Insert the data from stg.Sales into dbo.Sales.

You are designing a partition strategy for a fact table in an Azure Synapse Analytics dedicated SQL pool.

The table has the following specifications:

• Contain sales data for 20,000 products.

• Use hash distribution on a column named ProduclID,

• Contain 2.4 billion records for the years 20l9 and 2020.

Which number of partition ranges provides optimal compression and performance of the clustered columnstore index?

- A . 40

- B . 240

- C . 400

- D . 2,400

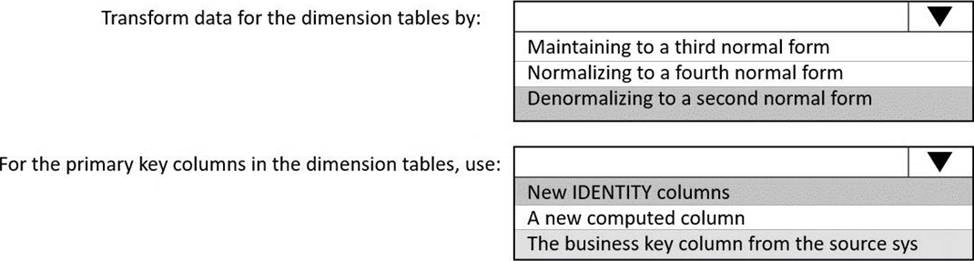

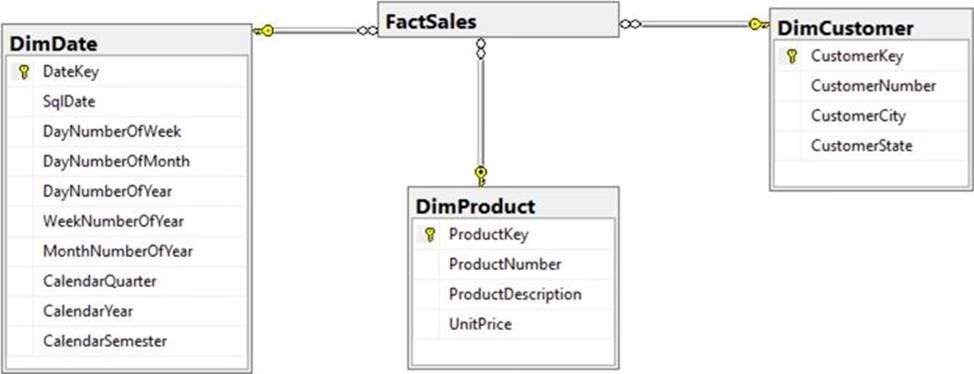

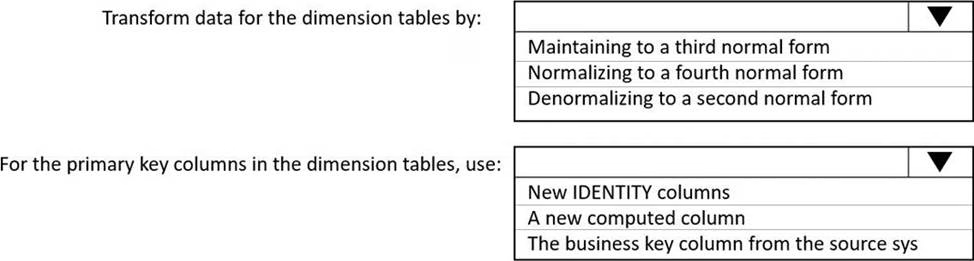

HOTSPOT

You have a Microsoft SQL Server database that uses a third normal form schema.

You plan to migrate the data in the database to a star schema in an Azure Synapse Analytics dedicated SQI pool.

You need to design the dimension tables. The solution must optimize read operations.

What should you include in the solution? to answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

You have an Azure Synapse Analytics serverless SQL pool named Pool1 and an Azure Data Lake Storage Gen2 account named storage1. The AllowedBlobpublicAccess porperty is disabled for storage1.

You need to create an external data source that can be used by Azure Active Directory (Azure AD)

users to access storage1 from Pool1.

What should you create first?

- A . an external resource pool

- B . a remote service binding

- C . database scoped credentials

- D . an external library

You plan to implement an Azure Data Lake Storage Gen2 container that will contain CSV files. The size of the files will vary based on the number of events that occur per hour. File sizes range from 4.KB to 5 GB.

You need to ensure that the files stored in the container are optimized for batch processing.

What should you do?

- A . Compress the files.

- B . Merge the files.

- C . Convert the files to JSON

- D . Convert the files to Avro.

You have an Azure Factory instance named DF1 that contains a pipeline named PL1.PL1 includes a tumbling window trigger.

You create five clones of PL1. You configure each clone pipeline to use a different data source.

You need to ensure that the execution schedules of the clone pipeline match the execution schedule of PL1.

What should you do?

- A . Add a new trigger to each cloned pipeline

- B . Associate each cloned pipeline to an existing trigger.

- C . Create a tumbling window trigger dependency for the trigger of PL1.

- D . Modify the Concurrency setting of each pipeline.

You are planning a streaming data solution that will use Azure Databricks. The solution will stream sales transaction data from an online store.

The solution has the following specifications:

* The output data will contain items purchased, quantity, line total sales amount, and line total tax amount.

* Line total sales amount and line total tax amount will be aggregated in Databricks.

* Sales transactions will never be updated. Instead, new rows will be added to adjust a sale.

You need to recommend an output mode for the dataset that will be processed by using Structured Streaming. The solution must minimize duplicate data.

What should you recommend?

- A . Append

- B . Update

- C . Complete

Latest DP-203 Dumps Valid Version with 116 Q&As

Latest And Valid Q&A | Instant Download | Once Fail, Full Refund