Microsoft DP-203 Data Engineering on Microsoft Azure Online Training

Microsoft DP-203 Online Training

The questions for DP-203 were last updated at Feb 20,2026.

- Exam Code: DP-203

- Exam Name: Data Engineering on Microsoft Azure

- Certification Provider: Microsoft

- Latest update: Feb 20,2026

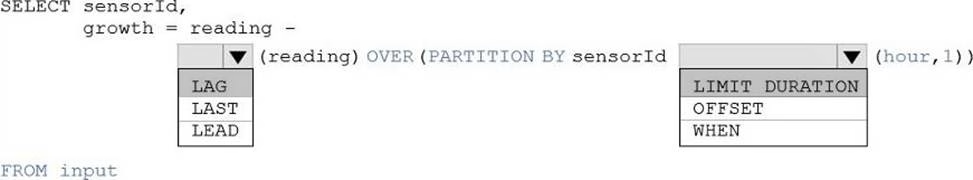

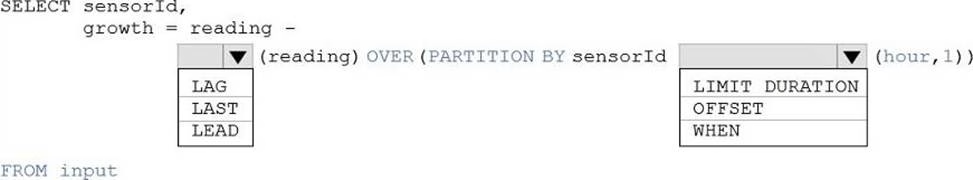

HOTSPOT

You are building an Azure Analytics query that will receive input data from Azure IoT Hub and write the results to Azure Blob storage.

You need to calculate the difference in readings per sensor per hour.

How should you complete the query? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

You need to schedule an Azure Data Factory pipeline to execute when a new file arrives in an Azure Data Lake Storage Gen2 container.

Which type of trigger should you use?

- A . on-demand

- B . tumbling window

- C . schedule

- D . storage event

You have two Azure Data Factory instances named ADFdev and ADFprod. ADFdev connects to an Azure DevOps Git repository.

You publish changes from the main branch of the Git repository to ADFdev.

You need to deploy the artifacts from ADFdev to ADFprod.

What should you do first?

- A . From ADFdev, modify the Git configuration.

- B . From ADFdev, create a linked service.

- C . From Azure DevOps, create a release pipeline.

- D . From Azure DevOps, update the main branch.

You are developing a solution that will stream to Azure Stream Analytics. The solution will have both streaming data and reference data.

Which input type should you use for the reference data?

- A . Azure Cosmos DB

- B . Azure Blob storage

- C . Azure IoT Hub

- D . Azure Event Hubs

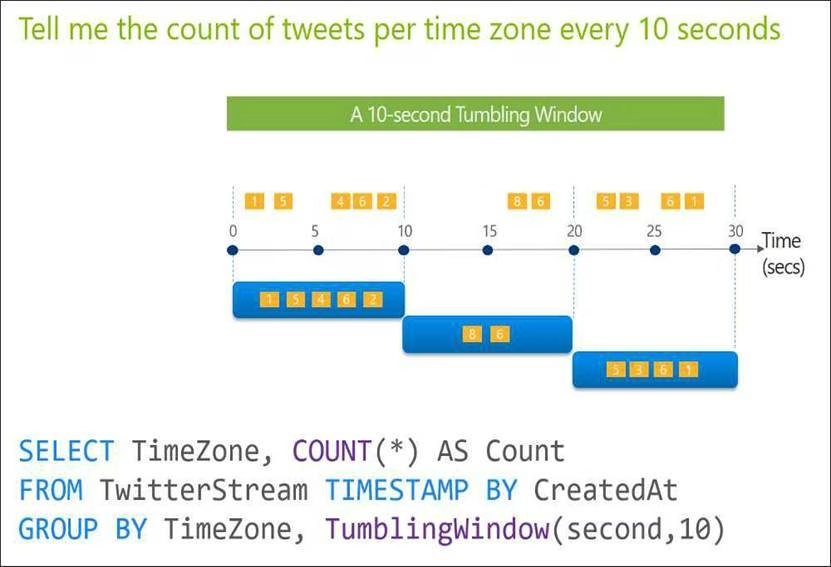

You are designing an Azure Stream Analytics job to process incoming events from sensors in retail environments.

You need to process the events to produce a running average of shopper counts during the previous 15 minutes, calculated at five-minute intervals.

Which type of window should you use?

- A . snapshot

- B . tumbling

- C . hopping

- D . sliding

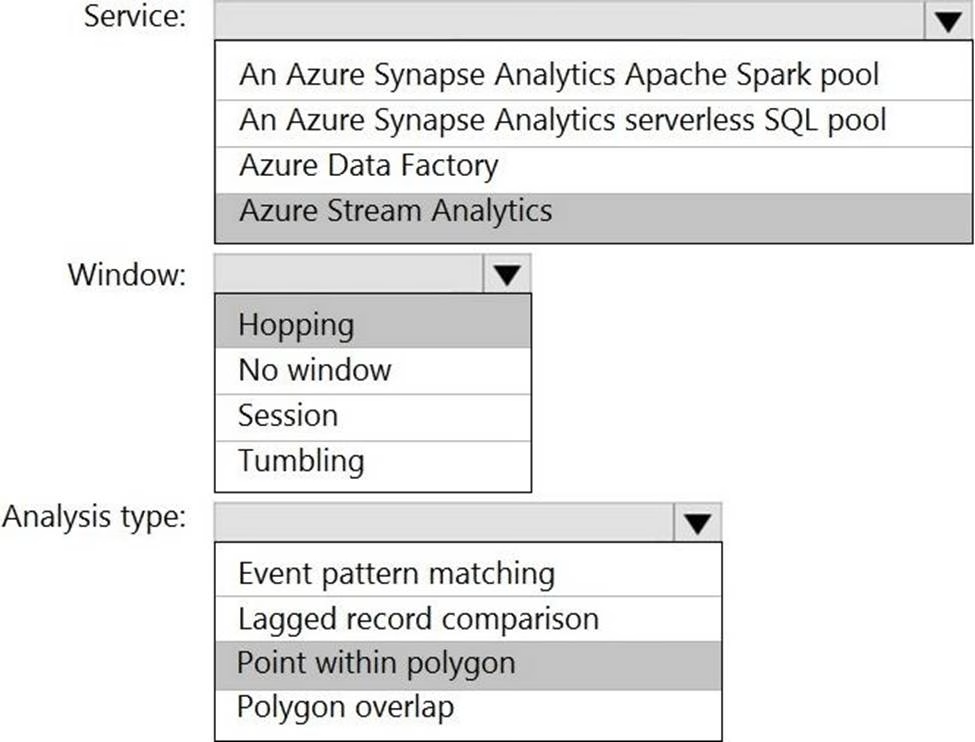

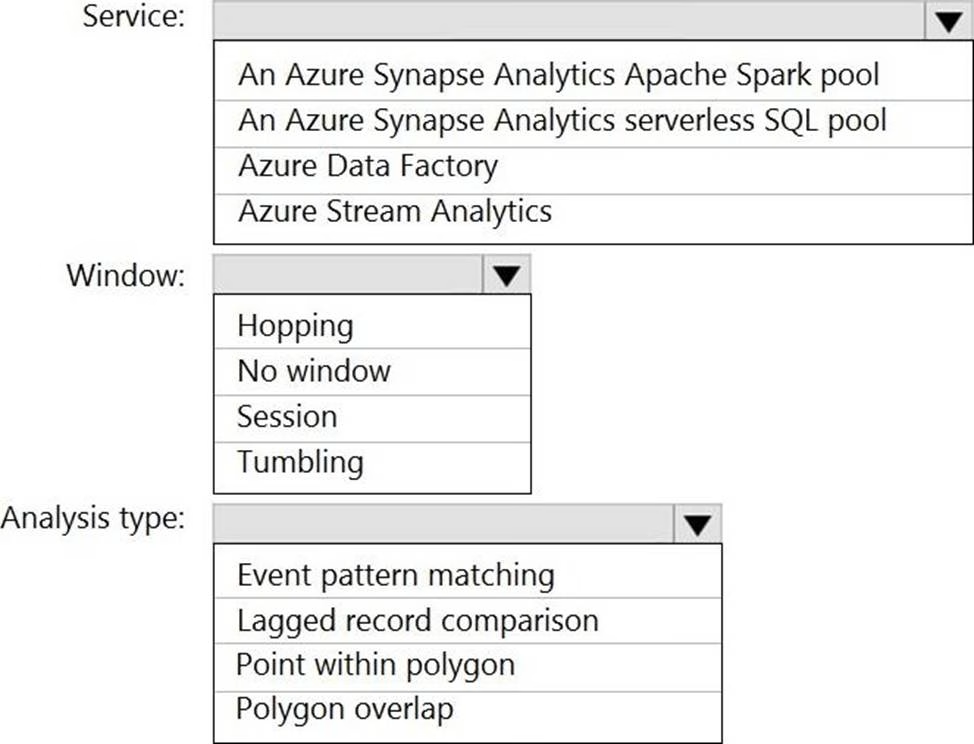

HOTSPOT

You are designing a monitoring solution for a fleet of 500 vehicles. Each vehicle has a GPS tracking device that sends data to an Azure event hub once per minute.

You have a CSV file in an Azure Data Lake Storage Gen2 container. The file maintains the expected geographical area in which each vehicle should be.

You need to ensure that when a GPS position is outside the expected area, a message is added to another event hub for processing within 30 seconds. The solution must minimize cost.

What should you include in the solution? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

You are designing an Azure Databricks table. The table will ingest an average of 20 million streaming events per day.

You need to persist the events in the table for use in incremental load pipeline jobs in Azure Databricks. The solution must minimize storage costs and incremental load times.

What should you include in the solution?

- A . Partition by DateTime fields.

- B . Sink to Azure Queue storage.

- C . Include a watermark column.

- D . Use a JSON format for physical data storage.

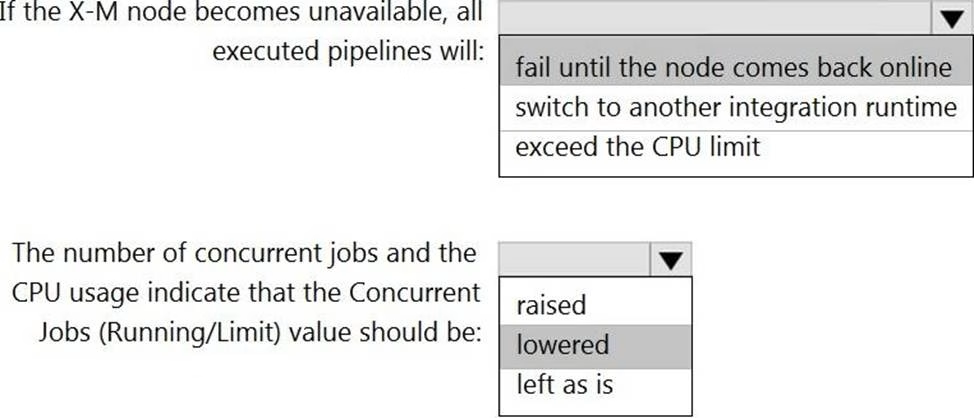

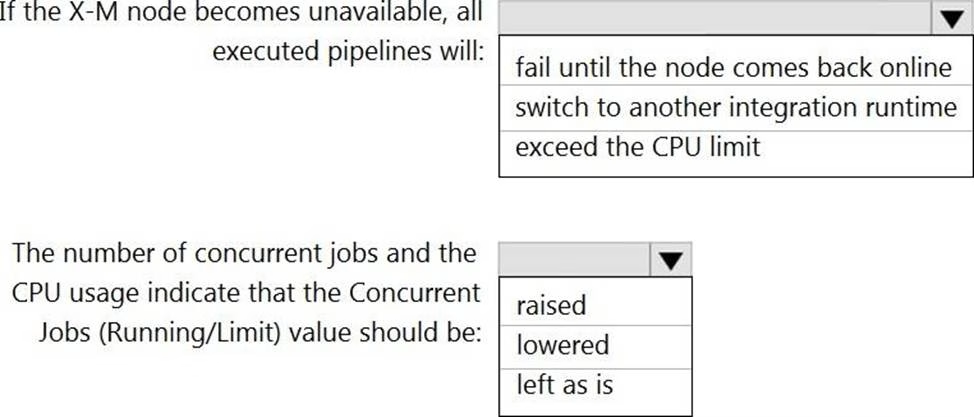

HOTSPOT

You have a self-hosted integration runtime in Azure Data Factory.

The current status of the integration runtime has the following configurations:

✑ Status: Running

✑ Type: Self-Hosted

✑ Version: 4.4.7292.1

✑ Running / Registered Node(s): 1/1

✑ High Availability Enabled: False

✑ Linked Count: 0

✑ Queue Length: 0

✑ Average Queue Duration. 0.00s

The integration runtime has the following node details:

✑ Name: X-M

✑ Status: Running

✑ Version: 4.4.7292.1

✑ Available Memory: 7697MB

✑ CPU Utilization: 6%

✑ Network (In/Out): 1.21KBps/0.83KBps

✑ Concurrent Jobs (Running/Limit): 2/14

✑ Role: Dispatcher/Worker

✑ Credential Status: In Sync

Use the drop-down menus to select the answer choice that completes each statement based on the information presented. NOTE: Each correct selection is worth one point.

You have an Azure Databricks workspace named workspace1 in the Standard pricing tier.

You need to configure workspace1 to support autoscaling all-purpose clusters.

The solution must meet the following requirements:

✑ Automatically scale down workers when the cluster is underutilized for three minutes.

✑ Minimize the time it takes to scale to the maximum number of workers.

✑ Minimize costs.

What should you do first?

- A . Enable container services for workspace1.

- B . Upgrade workspace1 to the Premium pricing tier.

- C . Set Cluster Mode to High Concurrency.

- D . Create a cluster policy in workspace1.

Latest DP-203 Dumps Valid Version with 116 Q&As

Latest And Valid Q&A | Instant Download | Once Fail, Full Refund