Microsoft DP-203 Data Engineering on Microsoft Azure Online Training

Microsoft DP-203 Online Training

The questions for DP-203 were last updated at Feb 21,2026.

- Exam Code: DP-203

- Exam Name: Data Engineering on Microsoft Azure

- Certification Provider: Microsoft

- Latest update: Feb 21,2026

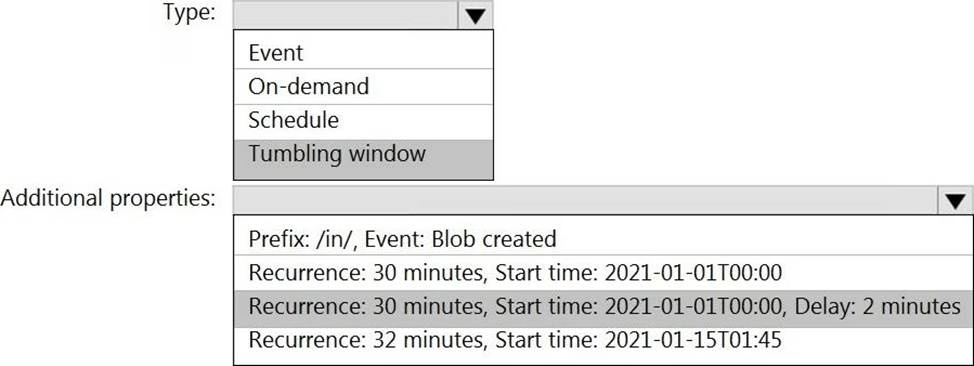

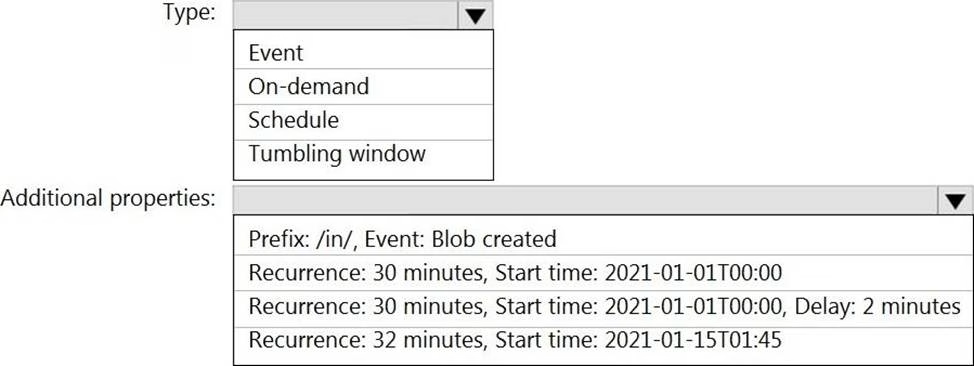

HOTSPOT

You build an Azure Data Factory pipeline to move data from an Azure Data Lake Storage Gen2 container to a database in an Azure Synapse Analytics dedicated SQL pool.

Data in the container is stored in the following folder structure.

/in/{YYYY}/{MM}/{DD}/{HH}/{mm}

The earliest folder is /in/2021/01/01/00/00. The latest folder is /in/2021/01/15/01/45.

You need to configure a pipeline trigger to meet the following requirements:

✑ Existing data must be loaded.

✑ Data must be loaded every 30 minutes.

✑ Late-arriving data of up to two minutes must he included in the load for the time at which the data should have arrived.

How should you configure the pipeline trigger? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

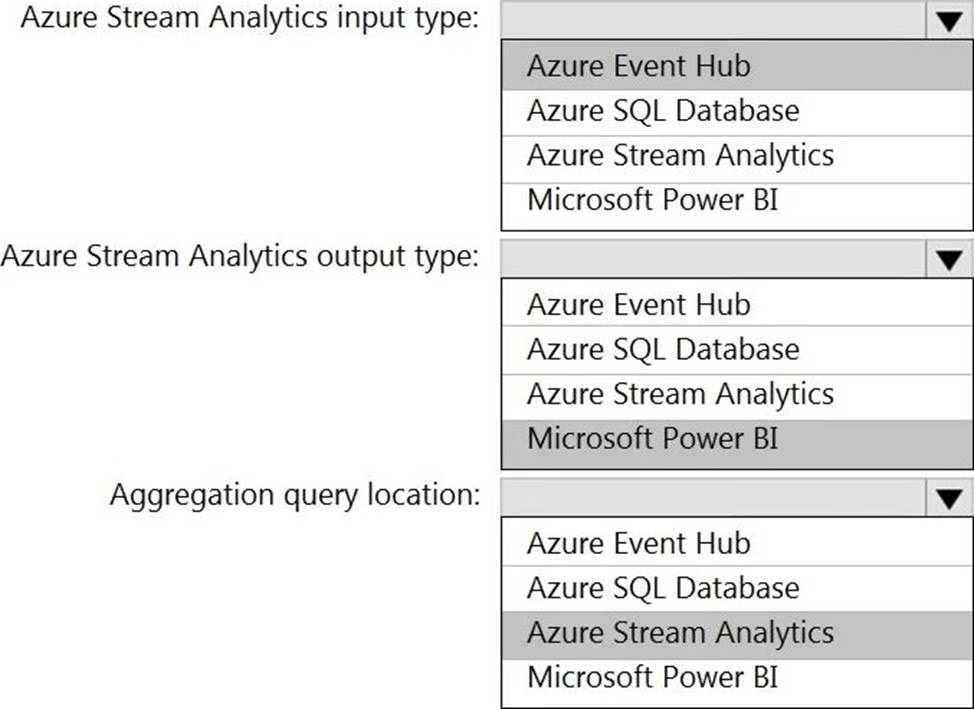

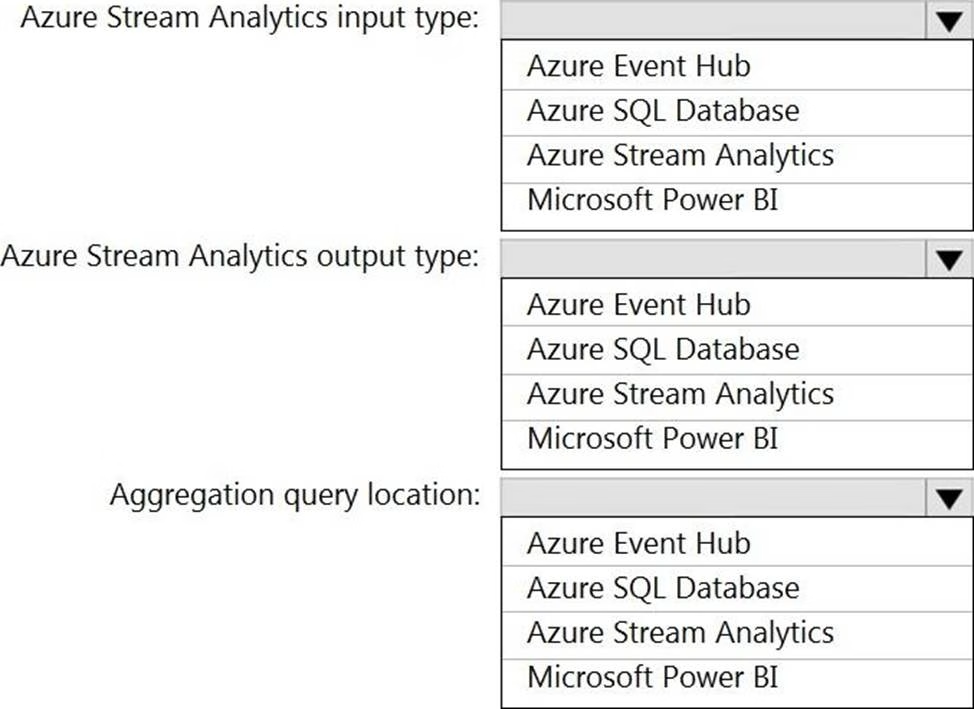

HOTSPOT

You are designing a real-time dashboard solution that will visualize streaming data from remote sensors that connect to the internet. The streaming data must be aggregated to show the average value of each 10-second interval. The data will be discarded after being displayed in the dashboard.

The solution will use Azure Stream Analytics and must meet the following requirements:

✑ Minimize latency from an Azure Event hub to the dashboard.

✑ Minimize the required storage.

✑ Minimize development effort.

What should you include in the solution? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point

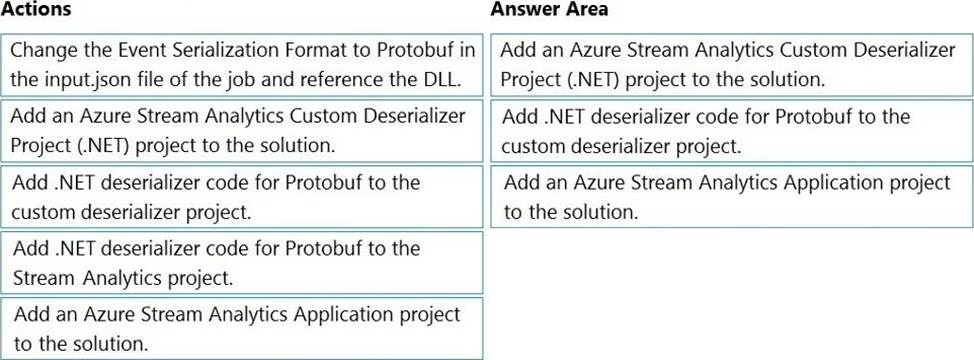

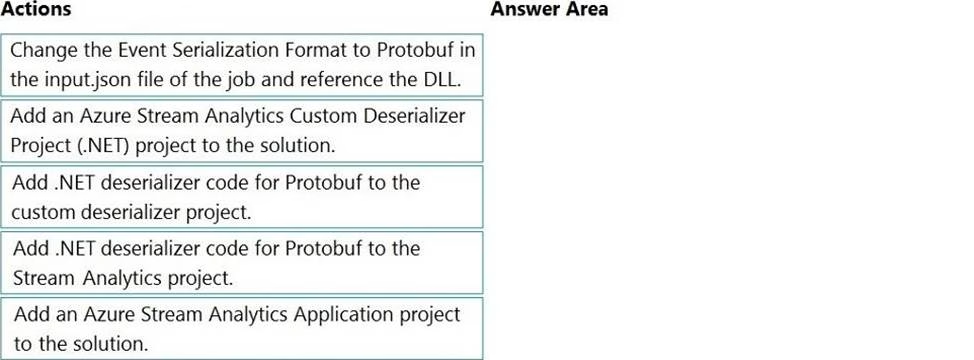

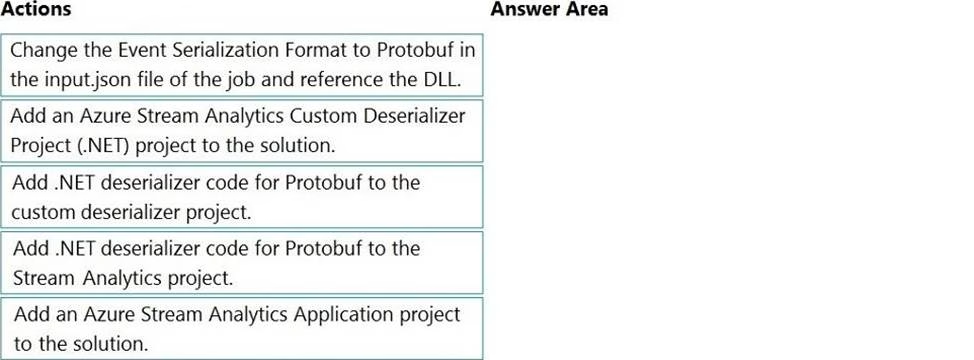

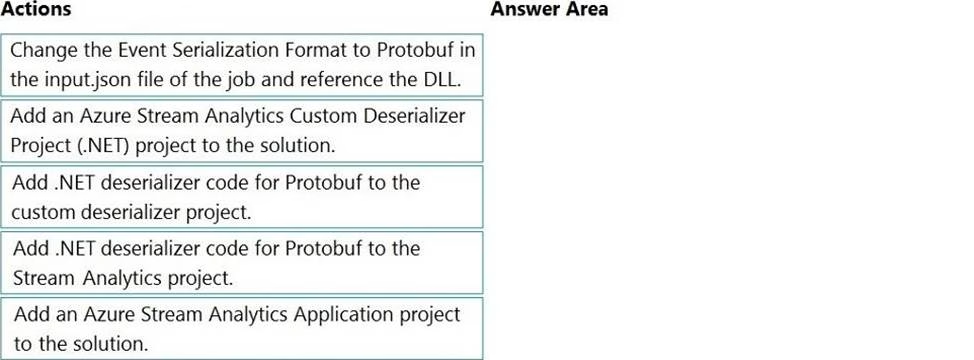

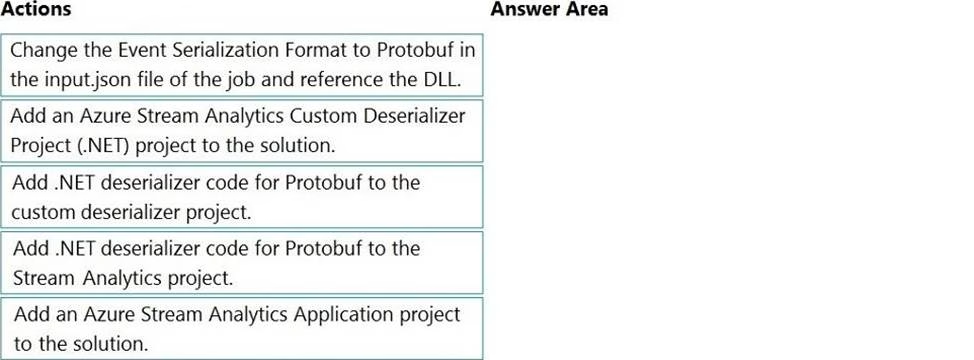

DRAG DROP

You have an Azure Stream Analytics job that is a Stream Analytics project solution in Microsoft Visual Studio. The job accepts data generated by IoT devices in the JSON format.

You need to modify the job to accept data generated by the IoT devices in the Protobuf format.

Which three actions should you perform from Visual Studio on sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

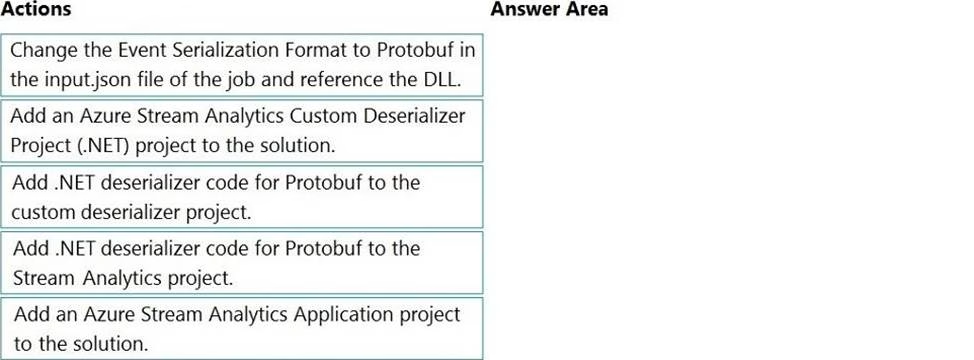

DRAG DROP

You have an Azure Stream Analytics job that is a Stream Analytics project solution in Microsoft Visual Studio. The job accepts data generated by IoT devices in the JSON format.

You need to modify the job to accept data generated by the IoT devices in the Protobuf format.

Which three actions should you perform from Visual Studio on sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

DRAG DROP

You have an Azure Stream Analytics job that is a Stream Analytics project solution in Microsoft Visual Studio. The job accepts data generated by IoT devices in the JSON format.

You need to modify the job to accept data generated by the IoT devices in the Protobuf format.

Which three actions should you perform from Visual Studio on sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

DRAG DROP

You have an Azure Stream Analytics job that is a Stream Analytics project solution in Microsoft Visual Studio. The job accepts data generated by IoT devices in the JSON format.

You need to modify the job to accept data generated by the IoT devices in the Protobuf format.

Which three actions should you perform from Visual Studio on sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

DRAG DROP

You have an Azure Stream Analytics job that is a Stream Analytics project solution in Microsoft Visual Studio. The job accepts data generated by IoT devices in the JSON format.

You need to modify the job to accept data generated by the IoT devices in the Protobuf format.

Which three actions should you perform from Visual Studio on sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

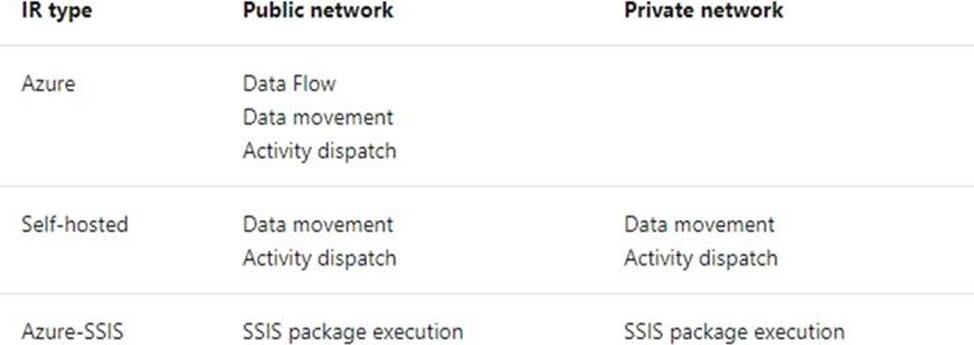

You have an Azure Storage account and a data warehouse in Azure Synapse Analytics in the UK South region.

You need to copy blob data from the storage account to the data warehouse by using Azure Data Factory.

The solution must meet the following requirements:

✑ Ensure that the data remains in the UK South region at all times.

✑ Minimize administrative effort.

Which type of integration runtime should you use?

- A . Azure integration runtime

- B . Azure-SSIS integration runtime

- C . Self-hosted integration runtime

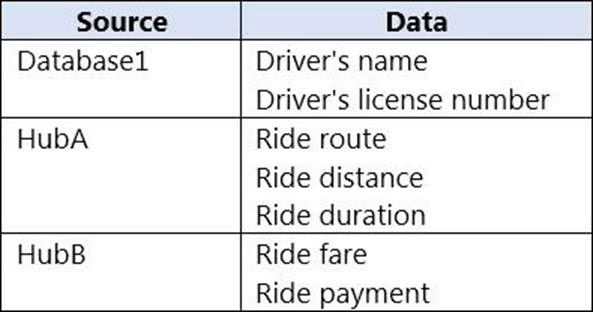

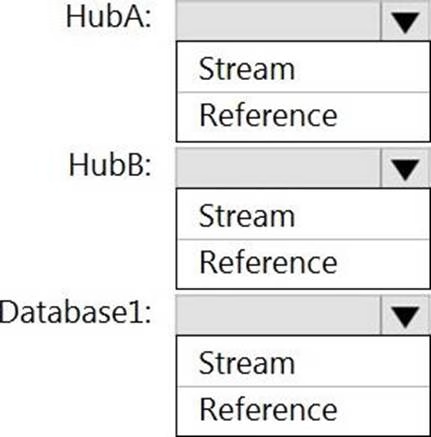

HOTSPOT

You have an Azure SQL database named Database1 and two Azure event hubs named HubA and HubB.

The data consumed from each source is shown in the following table.

You need to implement Azure Stream Analytics to calculate the average fare per mile by driver.

How should you configure the Stream Analytics input for each source? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

You have an Azure Stream Analytics job that receives clickstream data from an Azure event hub. You need to define a query in the Stream Analytics job.

The query must meet the following requirements:

✑ Count the number of clicks within each 10-second window based on the country of a visitor.

✑ Ensure that each click is NOT counted more than once.

How should you define the Query?

- A . SELECT Country, Avg(*) AS Average

FROM ClickStream TIMESTAMP BY CreatedAt

GROUP BY Country, SlidingWindow(second, 10) - B . SELECT Country, Count(*) AS Count

FROM ClickStream TIMESTAMP BY CreatedAt

GROUP BY Country, TumblingWindow(second, 10) - C . SELECT Country, Avg(*) AS Average

FROM ClickStream TIMESTAMP BY CreatedAt

GROUP BY Country, HoppingWindow(second, 10, 2) - D . SELECT Country, Count(*) AS Count

FROM ClickStream TIMESTAMP BY CreatedAt

GROUP BY Country, SessionWindow(second, 5, 10)

Latest DP-203 Dumps Valid Version with 116 Q&As

Latest And Valid Q&A | Instant Download | Once Fail, Full Refund