Microsoft DP-200 Implementing an Azure Data Solution Online Training

Microsoft DP-200 Online Training

The questions for DP-200 were last updated at Feb 15,2026.

- Exam Code: DP-200

- Exam Name: Implementing an Azure Data Solution

- Certification Provider: Microsoft

- Latest update: Feb 15,2026

HOTSPOT

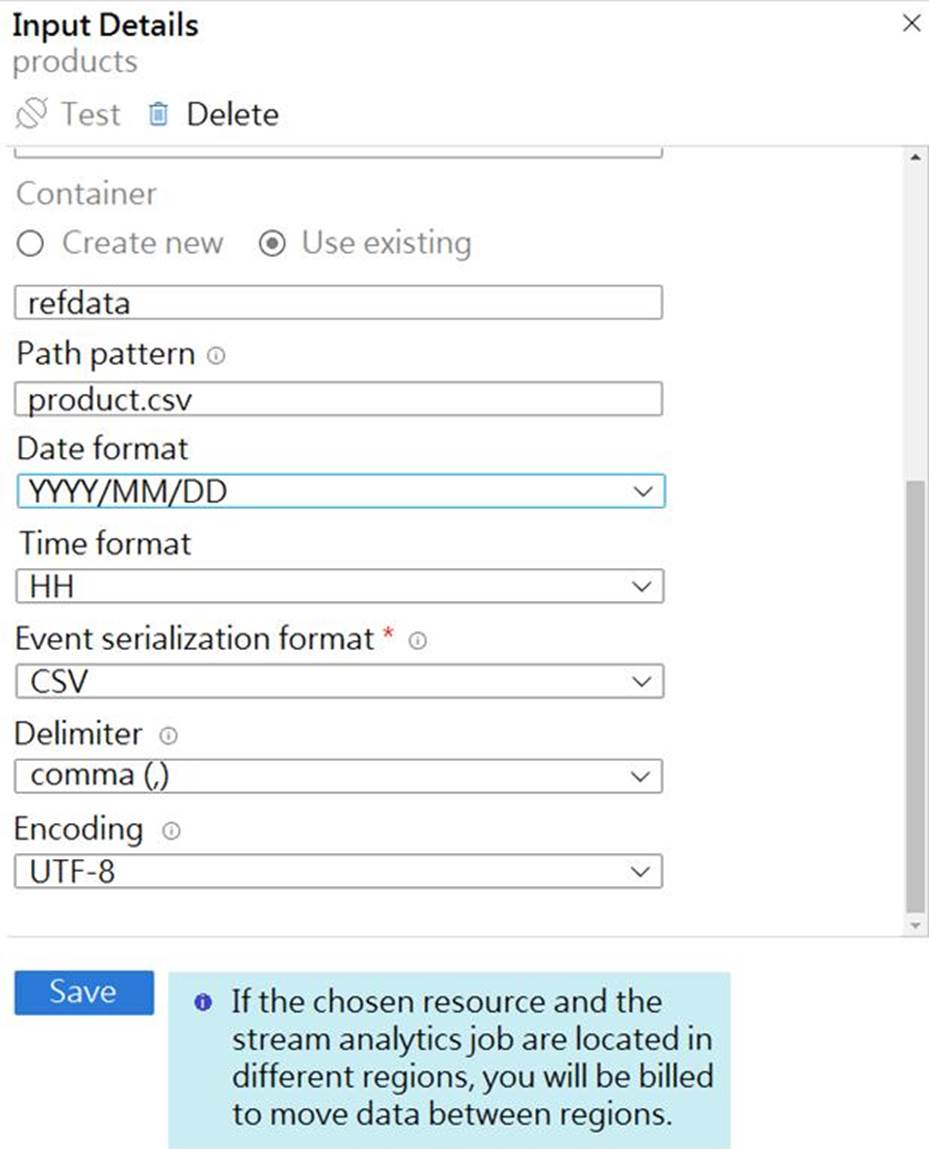

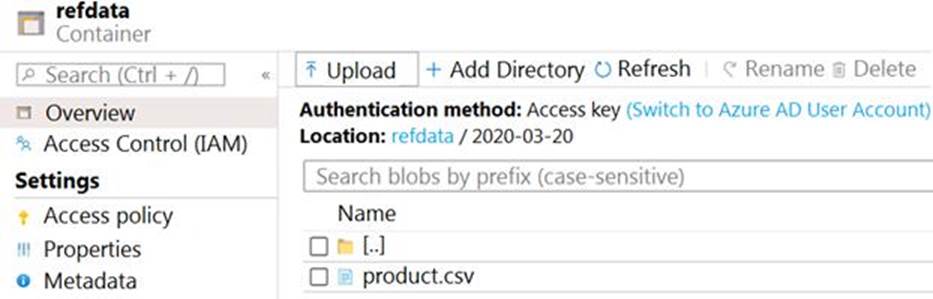

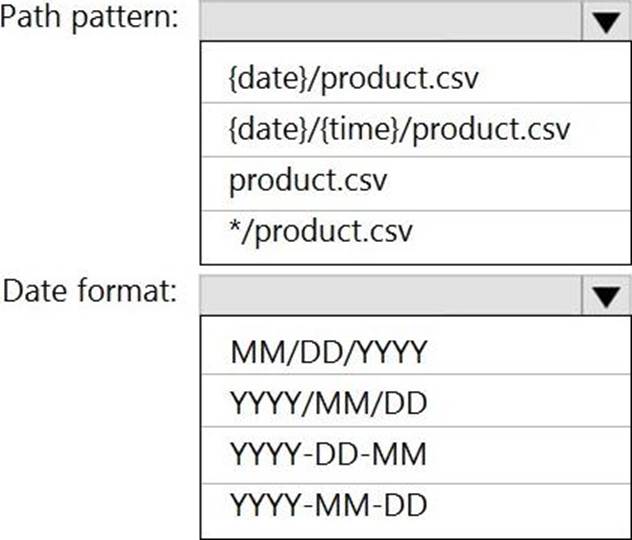

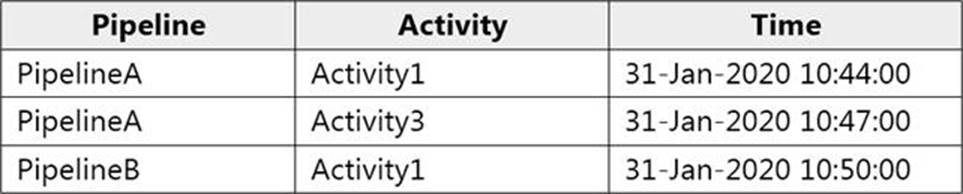

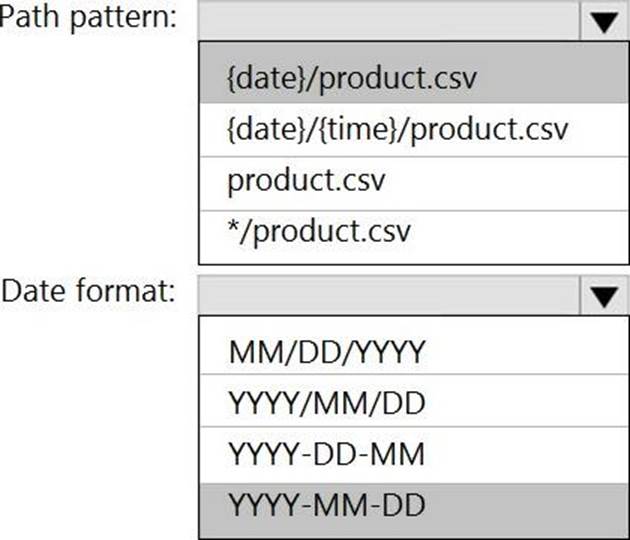

You are building an Azure Stream Analytics job that queries reference data from a product catalog file. The file is updated daily.

The reference data input details for the file are shown in the Input exhibit.

The storage account container view is shown in the Refdata exhibit.

You need to configure the Stream Analytics job to pick up the new reference data.

What should you configure? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

Your company uses several Azure HDInsight clusters.

The data engineering team reports several errors with some application using these clusters.

You need to recommend a solution to review the health of the clusters.

What should you include in you recommendation?

- A . Azure Automation

- B . Log Analytics

- C . Application Insights

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some questions sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have an Azure subscription that contains an Azure Storage account.

You plan to implement changes to a data storage solution to meet regulatory and compliance standards.

Every day, Azure needs to identify and delete blobs that were NOT modified during the last 100 days.

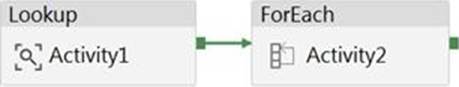

Solution: You schedule an Azure Data Factory pipeline.

Does this meet the goal?

- A . Yes

- B . No

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this scenario, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You plan to create an Azure Databricks workspace that has a tiered structure.

The workspace will contain the following three workloads:

✑ A workload for data engineers who will use Python and SQL

✑ A workload for jobs that will run notebooks that use Python, Spark, Scala, and SQL

✑ A workload that data scientists will use to perform ad hoc analysis in Scala and R

The enterprise architecture team at your company identifies the following standards for Databricks environments:

✑ The data engineers must share a cluster.

✑ The job cluster will be managed by using a request process whereby data scientists and data engineers provide packaged notebooks for deployment to the cluster.

✑ All the data scientists must be assigned their own cluster that terminates automatically after 120 minutes of inactivity. Currently, there are three data scientists.

You need to create the Databrick clusters for the workloads.

Solution: You create a Standard cluster for each data scientist, a High Concurrency cluster for the data engineers, and a Standard cluster for the jobs.

Does this meet the goal?

- A . Yes

- B . No

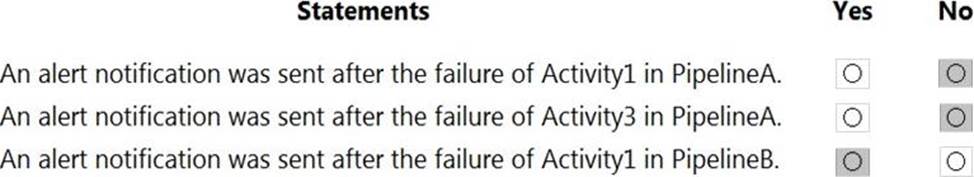

HOTSPOT

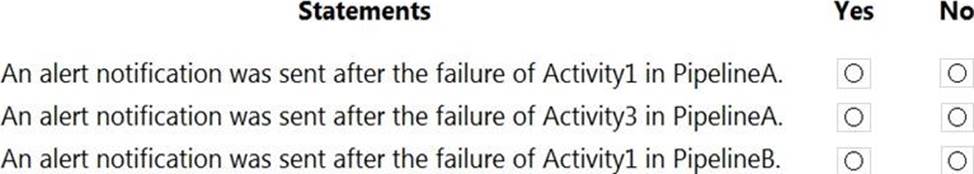

You have an Azure data factory that has two pipelines named PipelineA and PipelineB.

PipelineA has four activities as shown in the following exhibit.

PipelineB has two activities as shown in the following exhibit.

You create an alert for the data factory that uses Failed pipeline runs metrics for both pipelines and all failure types.

The metric has the following settings:

✑ Operator: Greater than

✑ Aggregation type: Total

✑ Threshold value: 2

✑ Aggregation granularity (Period): 5 minutes

✑ Frequency of evaluation: Every 5 minutes

Data Factory monitoring records the failures shown in the following table.

For each of the following statements, select yes if the statement is true. Otherwise, select no. NOTE: Each correct answer selection is worth one point.

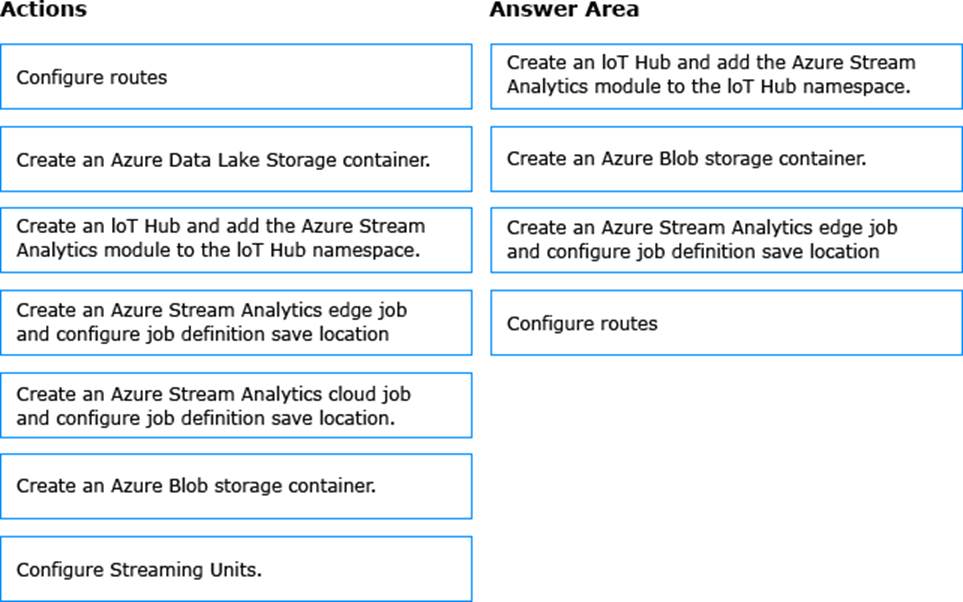

DRAG DROP

You develop data engineering solutions for a company.

You need to deploy a Microsoft Azure Stream Analytics job for an IoT solution.

The solution must:

• Minimize latency.

• Minimize bandwidth usage between the job and IoT device.

Which four actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

You are designing an enterprise data warehouse in Azure Synapse Analytics. You plan to load millions of rows of data into the data warehouse each day.

You must ensure that staging tables are optimized for data loading.

You need to design the staging tables.

What type of tables should you recommend?

- A . Round-robin distributed table

- B . Hash-distributed table

- C . Replicated table

- D . External table

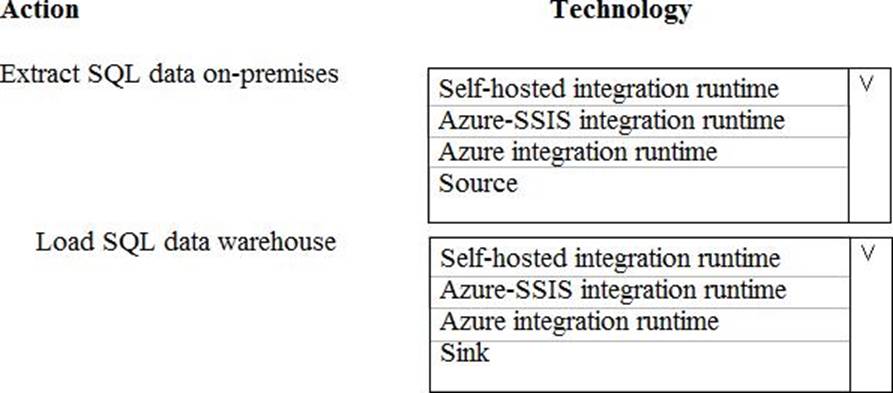

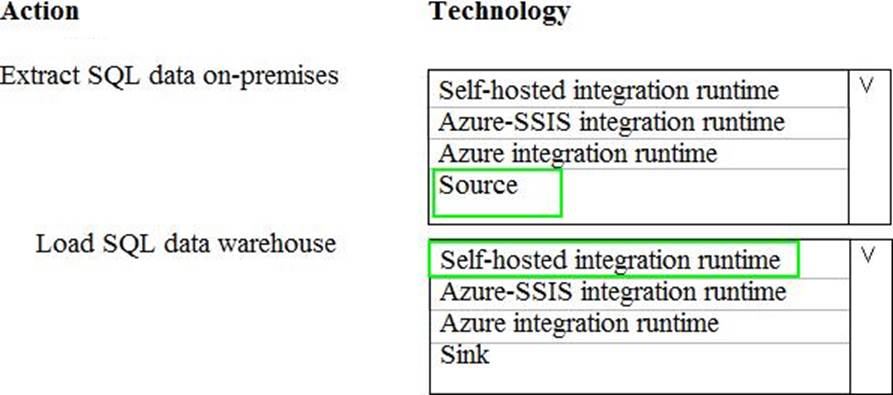

HOTSPOT

A company runs Microsoft Dynamics CRM with Microsoft SQL Server on-premises. SQL Server Integration Services (SSIS) packages extract data from Dynamics CRM APIs, and load the data into a SQL Server data warehouse.

The datacenter is running out of capacity. Because of the network configuration, you must extract on premises data to the cloud over https. You cannot open any additional ports. The solution must implement the least amount of effort.

You need to create the pipeline system.

Which component should you use? To answer, select the appropriate technology in the dialog box in the answer area. NOTE: Each correct selection is worth one point.

A company is designing a hybrid solution to synchronize data and on-premises Microsoft SQL Server database to Azure SQL Database.

You must perform an assessment of databases to determine whether data will move without compatibility issues.

You need to perform the assessment.

Which tool should you use?

- A . Azure SQL Data Sync

- B . SQL Vulnerability Assessment (VA)

- C . SQL Server Migration Assistant (SSMA)

- D . Microsoft Assessment and Planning Toolkit

- E . Data Migration Assistant (DMA)

CORRECT TEXT

Use the following login credentials as needed:

Azure Username: xxxxx

Azure Password: xxxxx

The following information is for technical support purposes only:

Lab Instance: 10543936

You plan to enable Azure Multi-Factor Authentication (MFA).

You need to ensure that [email protected] can manage any databases hosted on an Azure SQL server named SQL10543936 by signing in using his Azure Active Directory (Azure AD) user account.

To complete this task, sign in to the Azure portal.

Latest DP-200 Dumps Valid Version with 242 Q&As

Latest And Valid Q&A | Instant Download | Once Fail, Full Refund

I don’t understand the way to answer the drag and drop questions, and some answers get confused