Microsoft DP-200 Implementing an Azure Data Solution Online Training

Microsoft DP-200 Online Training

The questions for DP-200 were last updated at Feb 14,2026.

- Exam Code: DP-200

- Exam Name: Implementing an Azure Data Solution

- Certification Provider: Microsoft

- Latest update: Feb 14,2026

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this scenario, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

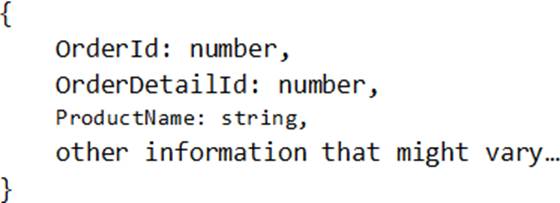

You have a container named Sales in an Azure Cosmos DB database. Sales has 120 GB of data. Each entry in Sales has the following structure.

The partition key is set to the OrderId attribute.

Users report that when they perform queries that retrieve data by ProductName, the queries take longer than expected to complete.

You need to reduce the amount of time it takes to execute the problematic queries.

Solution: You create a lookup collection that uses ProductName as a partition key.

Does this meet the goal?

- A . Yes

- B . No

You have an Azure Stream Analytics job that receives clickstream data from an Azure event hub.

You need to define a query in the Stream Analytics job.

The query must meet the following requirements:

✑ Count the number of clicks within each 10-second window based on the country of a visitor.

✑ Ensure that each click is NOT counted more than once.

How should you define the query?

- A . SELECT Country, Count(*) AS Count FROM ClickStream TIMESTAMP BY CreatedAt GROUP BY Country, TumblingWindow(second, 10)

- B . SELECT Country, Count(*) AS Count FROM ClickStream TIMESTAMP BY CreatedAt GROUP BY Country, SessionWindow(second, 5, 10)

- C . SELECT Country, Avg(*) AS Average FROM ClickStream TIMESTAMP BY CreatedAt GROUP BY Country, SlidingWindow(second, 10)

- D . SELECT Country, Avg(*) AS Average FROM ClickStream TIMESTAMP BY CreatedAt GROUP BY Country, HoppingWindow(second, 10, 2)

You have an Azure Cosmos DB database that uses the SQL API.

You need to delete stale data from the database automatically.

What should you use?

- A . soft delete

- B . Low Latency Analytical Processing (LLAP)

- C . schema on read

- D . Time to Live (TTL)

You need to develop a pipeline for processing data.

The pipeline must meet the following requirements.

• Scale up and down resources for cost reduction.

• Use an in-memory data processing engine to speed up ETL and machine learning operations.

• Use streaming capabilities.

• Provide the ability to code in SQL, Python, Scala, and R.

• Integrate workspace collaboration with Git.

What should you use?

- A . HDInsight Spark Cluster

- B . Azure Stream Analytics

- C . HDInsight Hadoop Cluster

- D . Azure SQL Data Warehouse

You develop data engineering solutions for a company. The company has on-premises Microsoft SQL Server databases at multiple locations.

The company must integrate data with Microsoft Power BI and Microsoft Azure Logic Apps.

The solution must avoid single points of failure during connection and transfer to the cloud.

The solution must also minimize latency.

You need to secure the transfer of data between on-premises databases and Microsoft Azure.

What should you do?

- A . Install a standalone on-premises Azure data gateway at each location

- B . Install an on-premises data gateway in personal mode at each location

- C . Install an Azure on-premises data gateway at the primary location

- D . Install an Azure on-premises data gateway as a cluster at each location

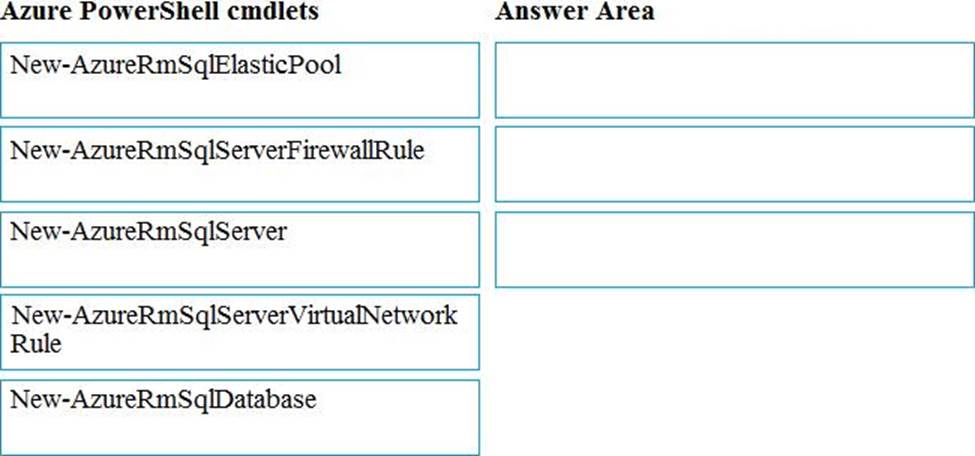

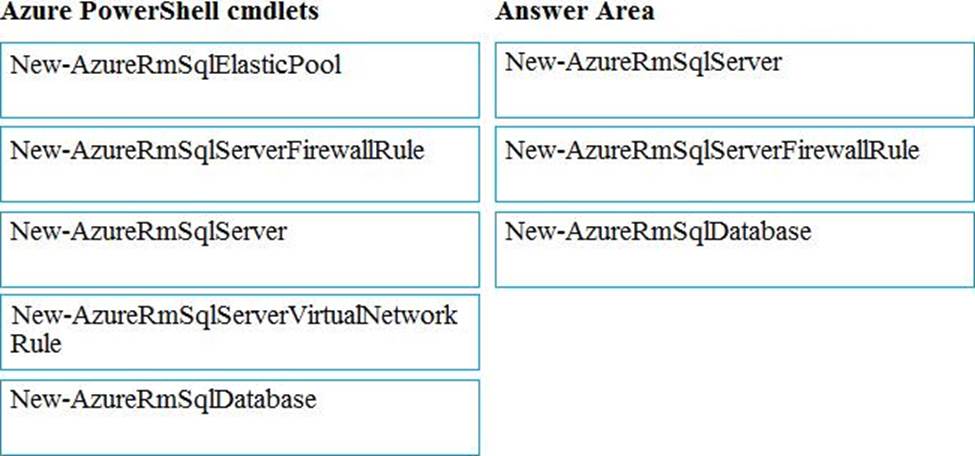

DRAG DROP

You plan to create a new single database instance of Microsoft Azure SQL Database.

The database must only allow communication from the data engineer’s workstation. You must connect directly to the instance by using Microsoft SQL Server Management Studio.

You need to create and configure the Database.

Which three Azure PowerShell cmdlets should you use to develop the solution? To answer, move the appropriate cmdlets from the list of cmdlets to the answer area and arrange them in the correct order.

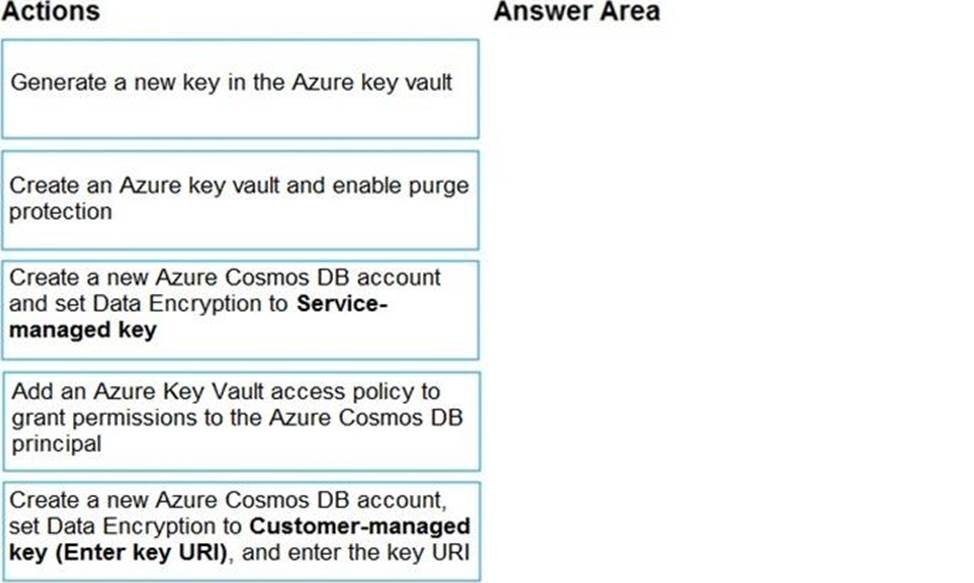

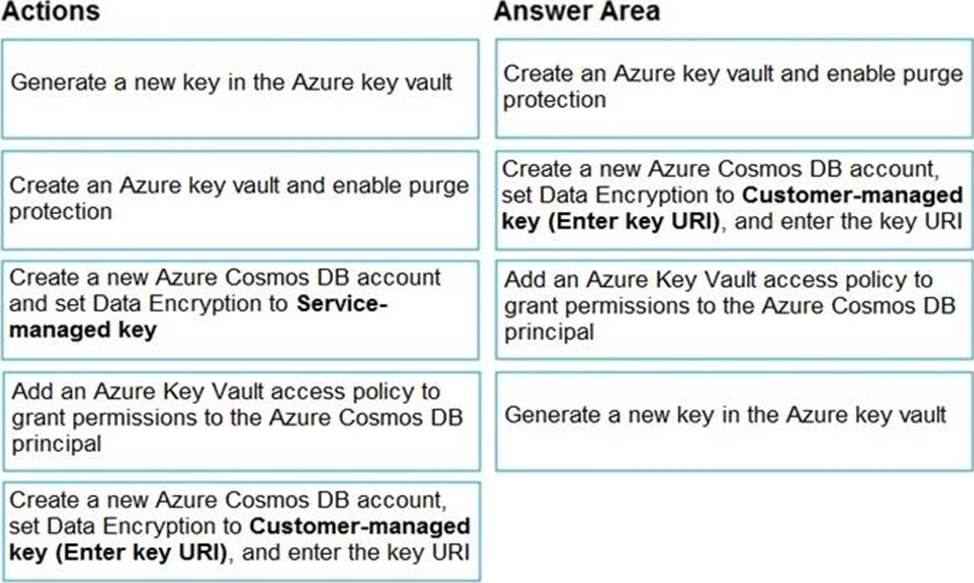

DRAG DROP

You need to create an Azure Cosmos DB account that will use encryption keys managed by your organization.

Which four actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order. NOTE: More than one order of answer choices is correct. You will receive credit for any of the correct orders you select.

You have an Azure subscription that contains an Azure Data Factory version 2 (V2) data factory named df1. Df1 contains a linked service.

You have an Azure Key vault named vault1 that contains an encryption key named key1.

You need to encrypt df1 by using key1.

What should you do first?

- A . Disable purge protection on vault1.

- B . Create a self-hosted integration runtime.

- C . Disable soft delete on vault1.

- D . Remove the linked service from df1.

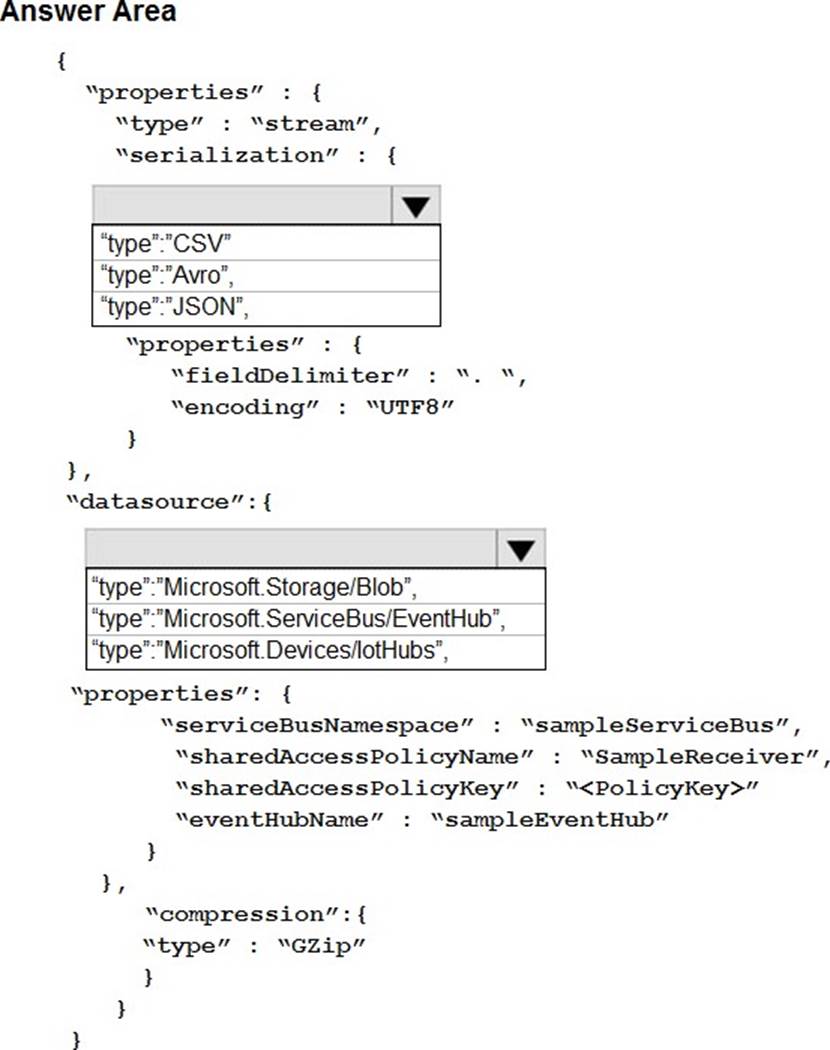

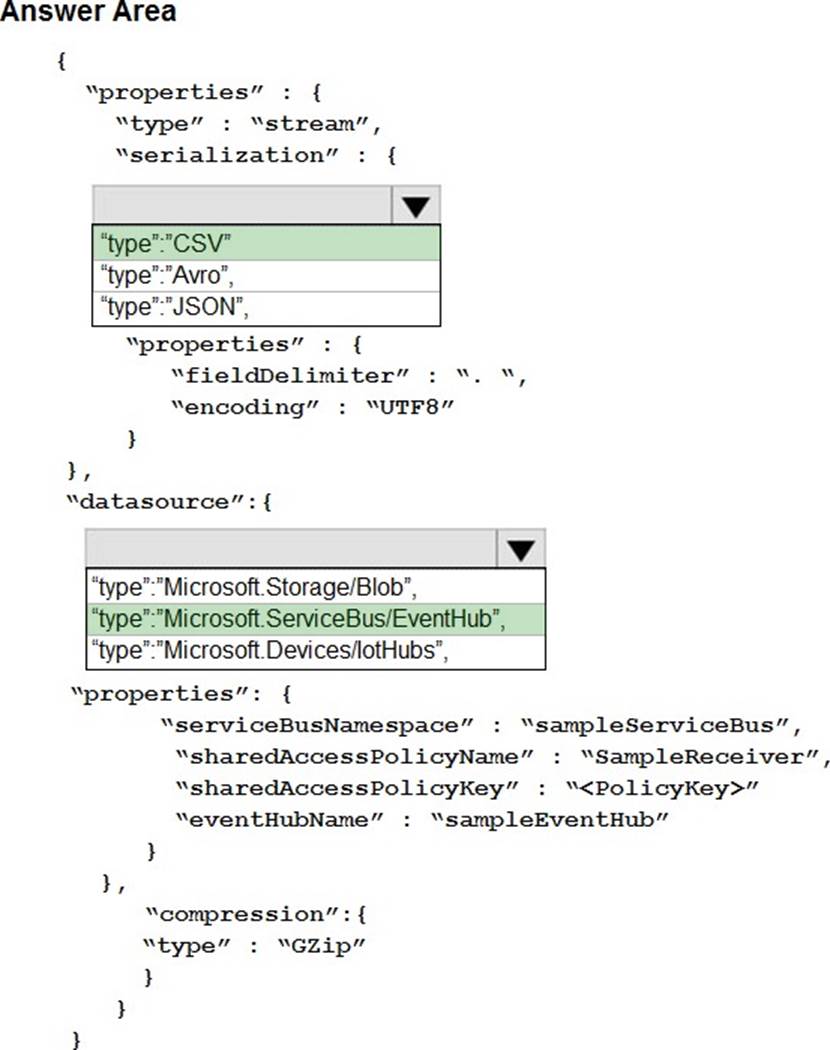

HOTSPOT

A company plans to analyze a continuous flow of data from a social media platform by using Microsoft Azure Stream Analytics. The incoming data is formatted as one record per row.

You need to create the input stream.

How should you complete the REST API segment? To answer, select the appropriate configuration in the answer area. NOTE: Each correct selection is worth one point.

You manage an enterprise data warehouse in Azure Synapse Analytics.

Users report slow performance when they run commonly used queries. Users do not report performance changes for infrequently used queries.

You need to monitor resource utilization to determine the source of the performance issues.

Which metric should you monitor?

- A . Data Warehouse Units (DWU) used

- B . DWU limit

- C . Cache hit percentage

- D . Data IO percentage

Latest DP-200 Dumps Valid Version with 242 Q&As

Latest And Valid Q&A | Instant Download | Once Fail, Full Refund

I don’t understand the way to answer the drag and drop questions, and some answers get confused