Microsoft DP-200 Implementing an Azure Data Solution Online Training

Microsoft DP-200 Online Training

The questions for DP-200 were last updated at Feb 16,2026.

- Exam Code: DP-200

- Exam Name: Implementing an Azure Data Solution

- Certification Provider: Microsoft

- Latest update: Feb 16,2026

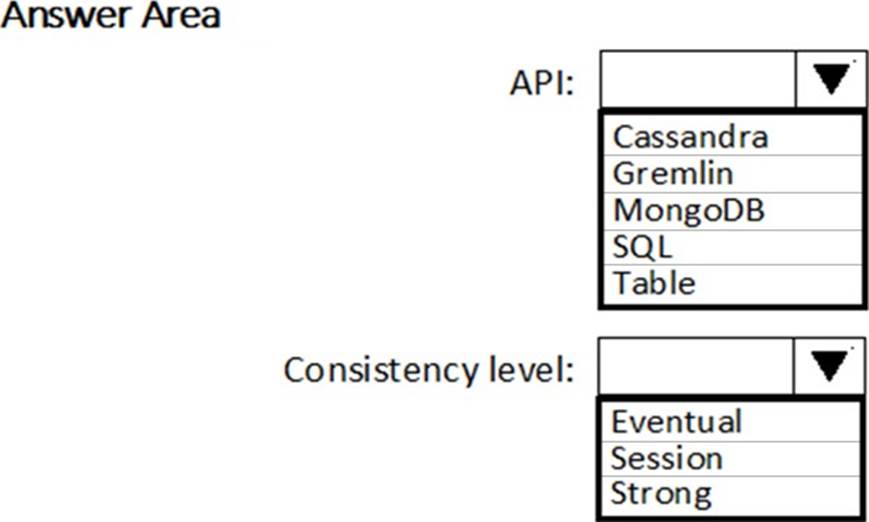

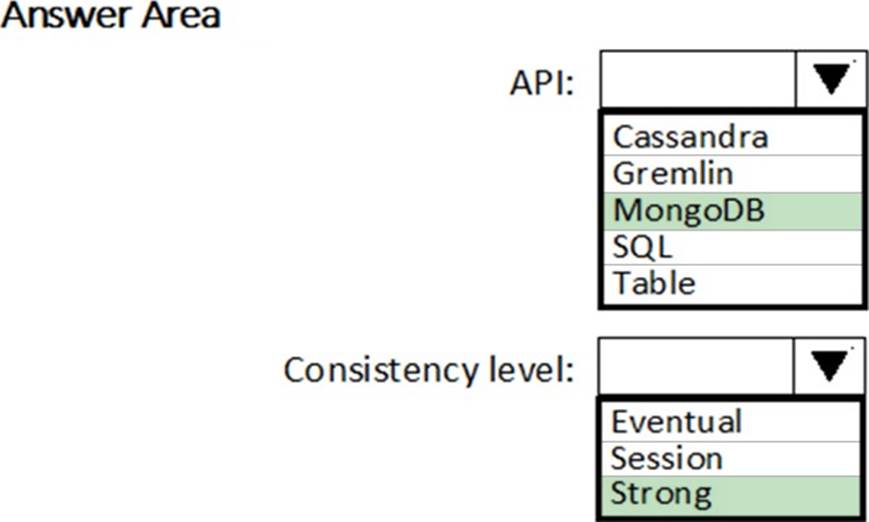

HOTSPOT

You need to build a solution to collect the telemetry data for Race Control.

What should you use? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

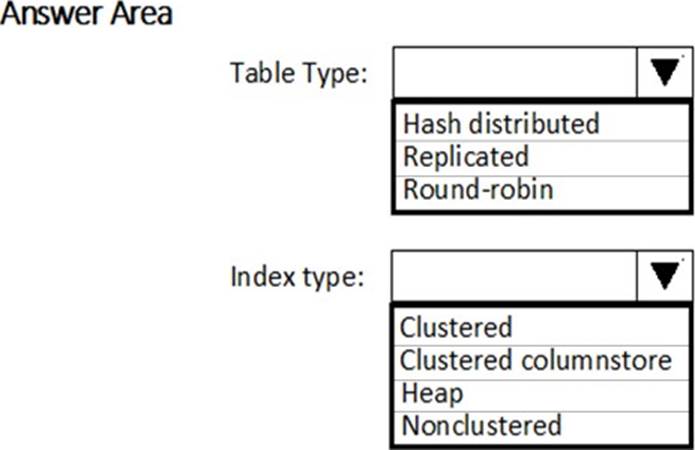

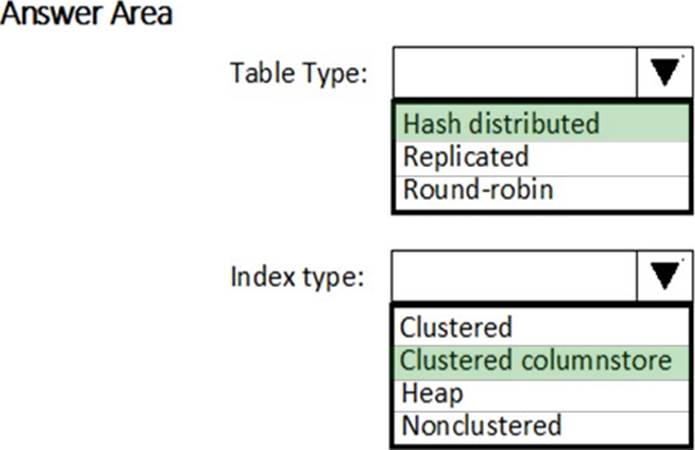

HOTSPOT

You are building the data store solution for Mechanical Workflow.

How should you configure Table1? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

On which data store you configure TDE to meet the technical requirements?

- A . Cosmos DB

- B . SQL Data Warehouse

- C . SQL Database

What should you include in the Data Factory pipeline for Race Central?

- A . a copy activity that uses a stored procedure as a source

- B . a copy activity that contains schema mappings

- C . a delete activity that has logging enabled

- D . a filter activity that has a condition

You are monitoring the Data Factory pipeline that runs from Cosmos DB to SQL Database for Race Central.

You discover that the job takes 45 minutes to run.

What should you do to improve the performance of the job?

- A . Decrease parallelism for the copy activities.

- B . Increase that data integration units.

- C . Configure the copy activities to use staged copy.

- D . Configure the copy activities to perform compression.

Which two metrics should you use to identify the appropriate RU/s for the telemetry data? Each correct answer presents part of the solution. NOTE: Each correct selection is worth one point.

- A . Number of requests

- B . Number of requests exceeded capacity

- C . End to end observed read latency at the 99th percentile

- D . Session consistency

- E . Data + Index storage consumed

- F . Avg Troughput/s

Topic 4, ADatum Corporation

Case study

Overview

ADatum Corporation is a retailer that sells products through two sales channels: retail stores and a website.

Existing Environment

ADatum has one database server that has Microsoft SQL Server 2016 installed. The server hosts three mission-critical databases named SALESDB, DOCDB, and REPORTINGDB.

SALESDB collects data from the stored and the website.

DOCDB stored documents that connect to the sales data in SALESDB. The documents are stored in two different JSON formats based on the sales channel.

REPORTINGDB stores reporting data and contains server columnstore indexes. A daily process creates reporting data in REPORTINGDB from the data in SALESDB. The process is implemented as a SQL Server Integration Services (SSIS) package that runs a stored procedure from SALESDB.

Requirements

Planned Changes

ADatum plans to move the current data infrastructure to Azure.

The new infrastructure has the following requirements:

– Migrate SALESDB and REPORTINGDB to an Azure SQL database.

– Migrate DOCDB to Azure Cosmos DB.

– The sales data including the documents in JSON format, must be gathered as it arrives and analyzed online by using Azure Stream Analytics. The analytic process will perform aggregations that must be done continuously, without gaps, and without overlapping.

– As they arrive, all the sales documents in JSON format must be transformed into one consistent format.

– Azure Data Factory will replace the SSIS process of copying the data from SALESDB to REPORTINGDB.

Technical Requirements

The new Azure data infrastructure must meet the following technical requirements:

– Data in SALESDB must encrypted by using Transparent Data Encryption (TDE). The encryption must use your own key.

– SALESDB must be restorable to any given minute within the past three weeks.

– Real-time processing must be monitored to ensure that workloads are sized properly based on actual usage patterns.

– Missing indexes must be created automatically for REPORTINGDB.

– Disk IO, CPU, and memory usage must be monitored for SALESDB.

You need to implement event processing by using Stream Analytics to produce consistent JSON documents.

Which three actions should you perform? Each correct answer presents part of the solution. NOTE: Each correct selection is worth one point.

- A . Define an output to Cosmos DB.

- B . Define a query that contains a JavaScript user-defined aggregates (UDA) function.

- C . Define a reference input.

- D . Define a transformation query.

- E . Define an output to Azure Data Lake Storage Gen2.

- F . Define a stream input.

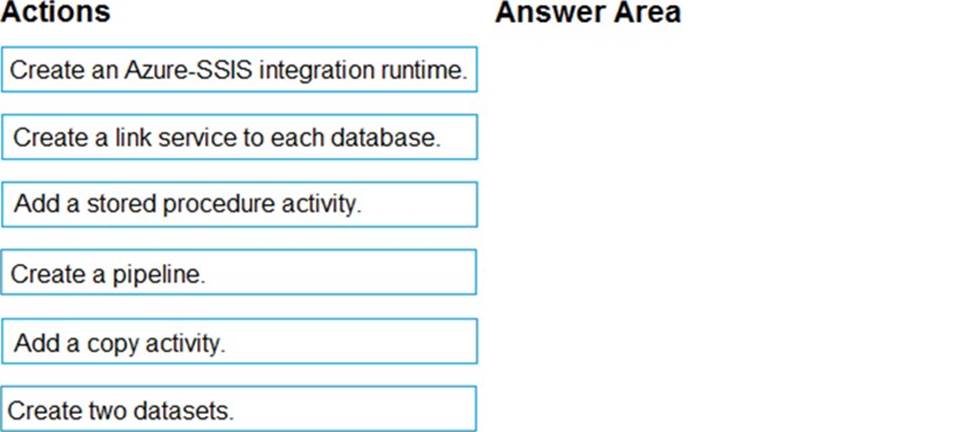

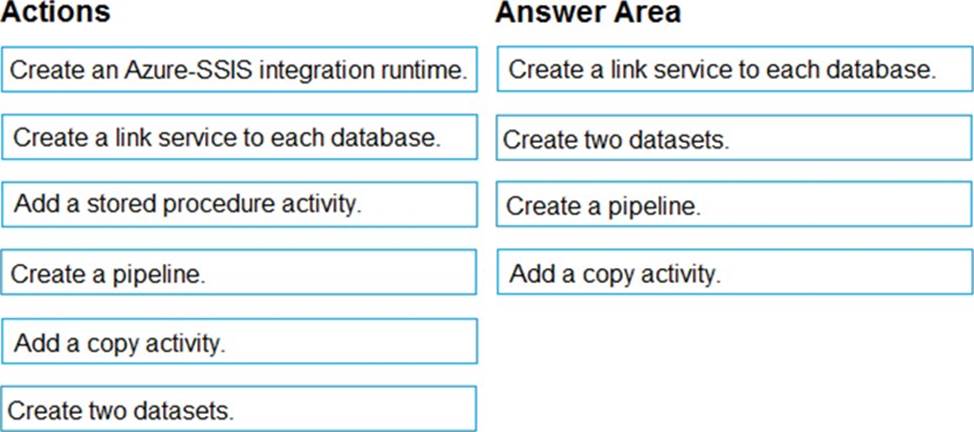

DRAG DROP

You need to replace the SSIS process by using Data Factory.

Which four actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

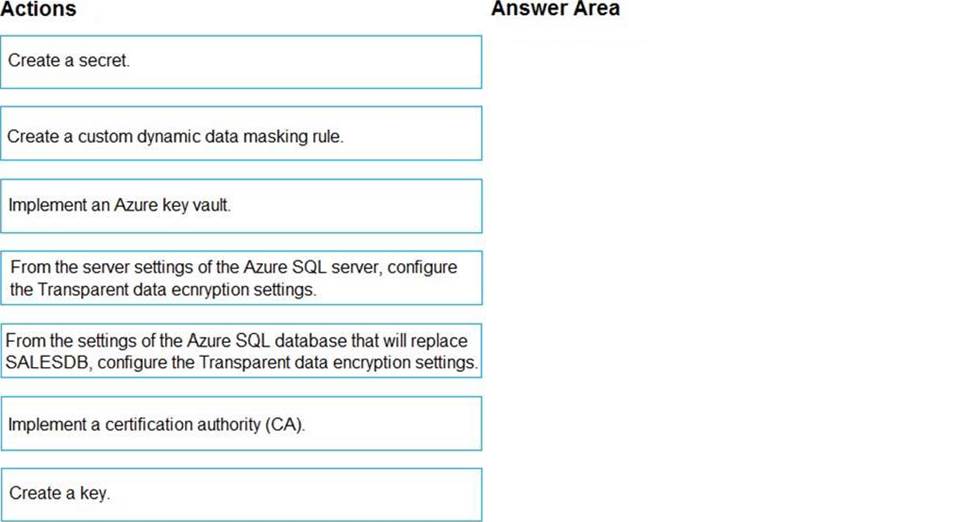

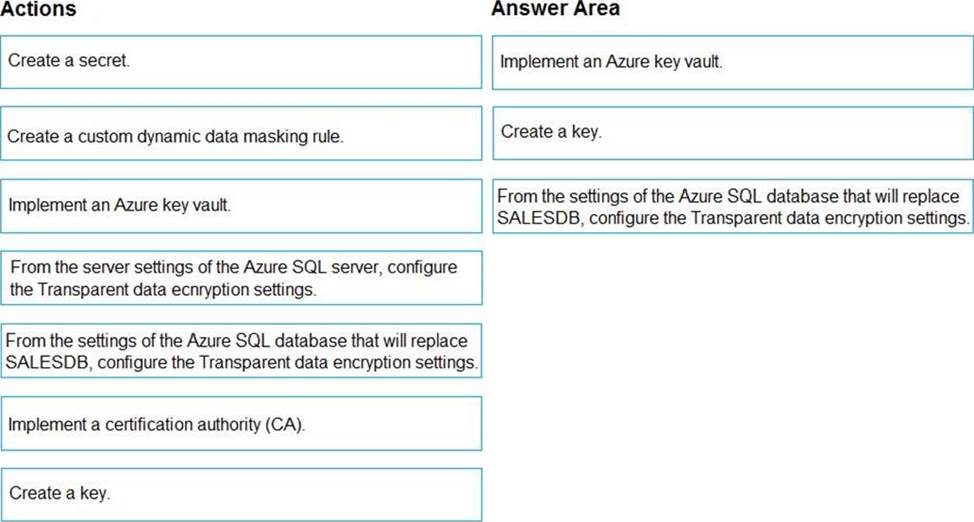

DRAG DROP

You need to implement the encryption for SALESDB.

Which three actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

Which windowing function should you use to perform the streaming aggregation of the sales data?

- A . Tumbling

- B . Hopping

- C . Sliding

- D . Session

Latest DP-200 Dumps Valid Version with 242 Q&As

Latest And Valid Q&A | Instant Download | Once Fail, Full Refund

I don’t understand the way to answer the drag and drop questions, and some answers get confused