Testlet 1

Case Study

This is a case study. Case studies are not timed separately. You can use as much exam time as you would like to complete each case. However, there may be additional case studies and sections on this exam. You must manage your time to ensure that you are able to complete all questions included on this exam in the time provided.

To answer the questions included in a case study, you will need to reference information that is provided in the case study. Case studies might contain exhibits and other resources that provide more information about the scenario that is described in the case study. Each question is independent of the other question on this case study.

At the end of this case study, a review screen will appear. This screen allows you to review your answers and to make changes before you move to the next sections of the exam. After you begin a new section, you cannot return to this section.

To start the case study

To display the first question on this case study, click the Next button. Use the buttons in the left pane to explore the content of the case study before you answer the questions. Clicking these buttons displays information such as business requirements, existing environment, and problem statements. If the case study has an All Information tab, note that the information displayed is identical to the information displayed on the subsequent tabs. When you are ready to answer a question , click the Question button to return to the question.

Background

You are a developer for Proseware, Inc. You are developing an application that applies a set of governance policies for Proseware’s internal services, external services, and applications. The application will also provide a shared library for common functionality.

Requirements

Policy service

You develop and deploy a stateful ASP.NET Core 2.1 web application named Policy service to an Azure App Service Web App. The application reacts to events from Azure Event Grid and performs policy actions based on those events.

The application must include the Event Grid Event ID field in all Application Insights telemetry.

Policy service must use Application Insights to automatically scale with the number of policy actions that it is performing.

Policies

Log Policy

All Azure App Service Web Apps must write logs to Azure Blob storage. All log files should be saved to a container named logdrop . Logs must remain in the container for 15 days.

Authentication events

Authentication events are used to monitor users signing in and signing out. All authentication events must be processed by Policy service. Sign outs must be processed as quickly as possible.

PolicyLib

You have a shared library named PolicyLib that contains functionality common to all ASP.NET Core web services and applications.

The PolicyLib library must:

– Exclude non-user actions from Application Insights telemetry.

– Provide methods that allow a web service to scale itself

– Ensure that scaling actions do not disrupt application usage

Other

Anomaly detection service

You have an anomaly detection service that analyzes log information for anomalies. It is implemented as an Azure Machine Learning model. The model is deployed as a web service.

If an anomaly is detected, an Azure Function that emails administrators is called by using an HTTP WebHook.

Health monitoring

All web applications and services have health monitoring at the /health service endpoint.

Issues

Policy loss

When you deploy Policy service, policies may not be applied if they were in the process of being applied during the deployment.

Performance issue

When under heavy load, the anomaly detection service undergoes slowdowns and rejects connections.

Notification latency

Users report that anomaly detection emails can sometimes arrive several minutes after an anomaly is detected.

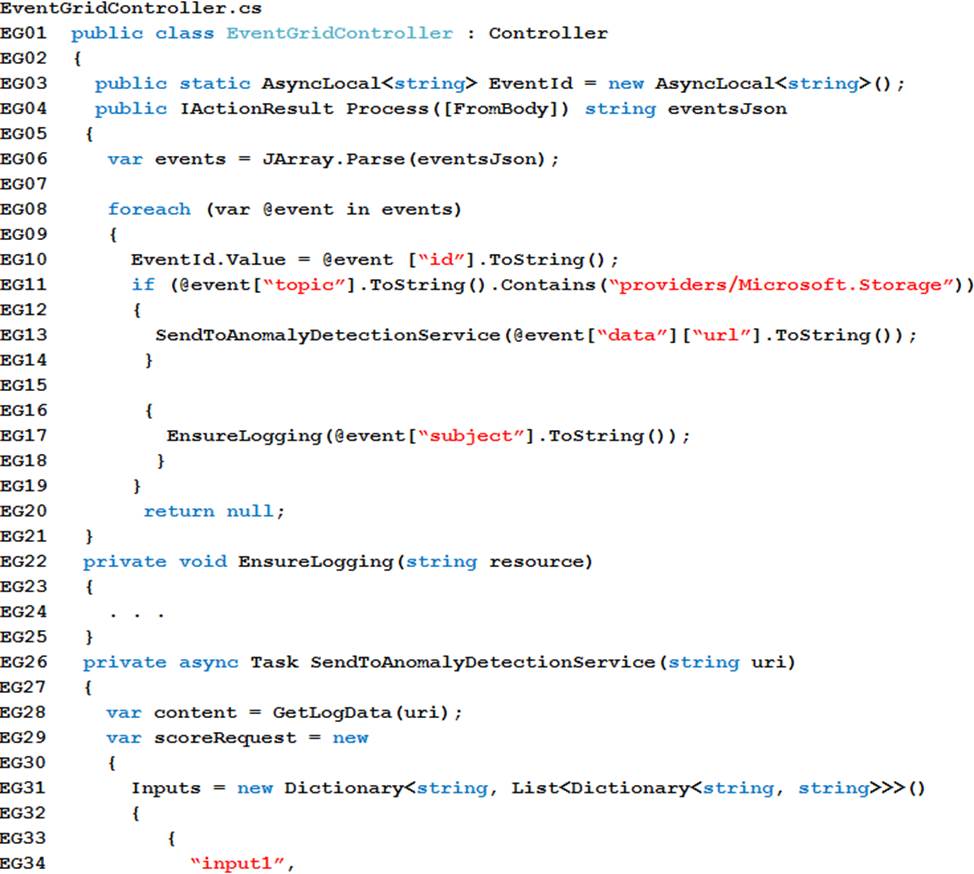

App code

Relevant portions of the app files are shown below. Line numbers are included for reference only and include a two-character prefix that denotes the specific file to which they belong.

Relevant portions of the app files are shown below.

Line numbers are included for reference only and include a two-character prefix that denotes the specific file to which they belong.

You need to resolve a notification latency issue.

Which two actions should you perform? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

- A . Set Always On to false.

- B . Set Always On to true.

- C . Ensure that the Azure Function is set to use a consumption plan.

- D . Ensure that the Azure Function is using an App Service plan.

BD

Explanation:

Azure Functions can run on either a Consumption Plan or a dedicated App Service Plan. If you run in a dedicated mode, you need to turn on the Always On setting for your Function App to run properly. The Function runtime will go idle after a few minutes of inactivity, so only HTTP triggers will actually "wake up" your functions. This is similar to how WebJobs must have Always On enabled.

Scenario: Notification latency: Users report that anomaly detection emails can sometimes arrive several minutes after an anomaly is detected.

Anomaly detection service: You have an anomaly detection service that analyzes log information for anomalies. It is implemented as an Azure Machine Learning model. The model is deployed as a web service.

If an anomaly is detected, an Azure Function that emails administrators is called by using an HTTP WebHook.

References:

https://github.com/Azure/Azure-Functions/wiki/Enable-Always-On-when-running-on-dedicated-App-Service-Plan

Testlet 2

Case Study

This is a case study. Case studies are not timed separately. You can use as much exam time as you would like to complete each case. However, there may be additional case studies and sections on this exam. You must manage your time to ensure that you are able to complete all questions included on this exam in the time provided.

To answer the questions included in a case study, you will need to reference information that is provided in the case study. Case studies might contain exhibits and other resources that provide more information about the scenario that is described in the case study. Each question is independent of the other question on this case study.

At the end of this case study, a review screen will appear. This screen allows you to review your answers and to make changes before you move to the next sections of the exam. After you begin a new section, you cannot return to this section.

To start the case study

To display the first question on this case study, click the Next button. Use the buttons in the left pane to explore the content of the case study before you answer the questions. Clicking these buttons displays information such as business requirements, existing environment, and problem statements. If the case study has an All Information tab, note that the information displayed is identical to the information displayed on the subsequent tabs. When you are ready to answer a question , click the Question button to return to the question.

LabelMaker app

Coho Winery produces bottles, and distributes a variety of wines globally. You are a developer implementing highly scalable and resilient applications to support online order processing by using Azure solutions.

Coho Winery has a LabelMaker application that prints labels for wine bottles. The application sends data to several printers. The application consists of five modules that run independently on virtual machines (VMs). Coho Winery plans to move the application to Azure and continue to support label creation.

External partners send data to the LabelMaker app lication to include artwork and text for custom label designs.

Requirements

Data

You identify the following requirements for data management and manipulation:

• Order data is stored as nonrelational JSON and must be queried using Structured Query Language (SQL).

• Changes to the Order data must reflect immediately across all partitions. All reads to the Order data must fetch the most recent writes.

Security

You have the following security requirements:

• Users of Coho Winery applications must be able to provide access to documents, resources, and applications to external partners.

• External partners must use their own credentials and authenticate with their organization’s identity management solution.

• External partner logins must be audited monthly for application use by a user account administrator to maintain company compliance.

• Storage of e-commerce application settings must be maintained in Azure Key Vault.

• E-commerce application sign-ins must be secured by using Azure App Service authentication and Azure Active Directory (AAD).

• Conditional access policies must be applied at the application level to protect company content.

• The LabelMaker application must be secured by using an AAD account that has full access to all namespaces of the Azure Kubernetes Service (AKS) cluster.

LabelMaker app

Azure Monitor Container Health must be used to monitor the performance of workloads that are deployed to Kubernetes environments and hosted on Azure Kubernetes Service (AKS).

You must use Azure Container Registry to publish images that support the AKS deployment.

Architecture

Issues

Calls to the Printer API App fail periodically due to printer communication timeouts.

Printer communications timeouts occur after 10 seconds. The label printer must only receive up to 5 attempts within one minute.

The order workflow fails to run upon initial deployment to Azure.

Order .json

Relevant portions of the app files are shown below. Line numbers are included for reference only.

This JSON file contains a representation of the data for an order that includes a single item.

HOTSPOT

You need to ensure that you can deploy the LabelMaker application.

How should you complete the CLI commands? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

Explanation:

Box 1: group

Create a resource group with the az group create command. An Azure resource group is a logical group in which Azure resources are deployed and managed.

The following example creates a resource group named myResourceGroup in the westeurope location.

az group create –name myResourceGroup –location westeurope

Box 2: CohoWinterLabelMaker

Use the resource group named, which is used in the second command.

Box 3: aks

The command az aks create, is used to create a new managed Kubernetes cluster.

Box 4: monitoring

Scenario: LabelMaker app

Azure Monitor Container Health must be used to monitor the performance of workloads that are deployed to Kubernetes environments and hosted on Azure Kubernetes Service (AKS).

You must use Azure Container Registry to publish images that sup

Testlet 3

Case Study

This is a case study. Case studies are not timed separately. You can use as much exam time as you would like to complete each case. However, there may be additional case studies and sections on this exam. You must manage your time to ensure that you are able to complete all questions included on this exam in the time provided.

To answer the questions included in a case study, you will need to reference information that is provided in the case study. Case studies might contain exhibits and other resources that provide more information about the scenario that is described in the case study. Each question is independent of the other question on this case study.

At the end of this case study, a review screen will appear. This screen allows you to review your answers and to make changes before you move to the next sections of the exam. After you begin a new section, you cannot return to this section.

To start the case study

To display the first question on this case study, click the Next button. Use the buttons in the left pane to explore the content of the case study before you answer the questions. Clicking these buttons displays information such as business requirements, existing environment, and problem statements. If the case study has an All Information tab, note that the information displayed is identical to the information displayed on the subsequent tabs. When you are ready to answer a question, click the Question button to return to the question.

Background

Wide World Importers is moving all their datacenters to Azure. The company has developed several applications and services to support supply chain operations and would like to leverage serverless computing where possible.

Current environment

Windows Server 2016 virtual machine

This virtual machine (VM) runs Biz Talk Server 2016.

The VM runs the following workflows:

– Ocean Transport C This workflow gathers and validates container information including container contents and arrival notices at various shipping ports.

– Inland Transport C This workflow gathers and validates trucking information including fuel usage, number of stops, and routes.

The VM supports the following REST API calls:

– Container API C This API provides container information including weight, contents, and other attributes.

– Location API C This API provides location information regarding shipping ports of call and truck stops.

– Shipping REST API C This API provides shipping information for use and display on the shipping website.

Shipping Data

The application uses MongoDB JSON document storage database for all container and transport information.

Shipping Web Site

The site displays shipping container tracking information and container contents. The site is located at http://shipping.wideworldimporters.com

Proposed solution

The on-premises shipping application must be moved to Azure. The VM has been migrated to a new Standard_D16s_v3 Azure VM by using Azure Site Recovery and must remain running in Azure to complete the BizTalk component migrations. You create a Standard_D16s_v3 Azure VM to host BizTalk Server.

The Azure architecture diagram for the proposed solution is shown below:

Shipping Logic App

The Shipping Logic app must meet the following requirements:

– Support the ocean transport and inland transport workflows by using a Logic App.

– Support industry-standard protocol X12 message format for various messages including vessel content details and arrival notices.

– Secure resources to the corporate VNet and use dedicated storage resources with a fixed costing model.

– Maintain on-premises connectivity to support legacy applications and final BizTalk migrations.

Shipping Function app

Implement secure function endpoints by using app-level security and include Azure Active Directory (Azure AD).

REST APIs

The REST API’s that support the solution must meet the following requirements:

– Secure resources to the corporate VNet.

– Allow deployment to a testing location within Azure while not incurring additional costs.

– Automatically scale to double capacity during peak shipping times while not causing application downtime.

– Minimize costs when selecting an Azure payment model.

Shipping data

Data migration from on-premises to Azure must minimize costs and downtime.

Shipping website

Use Azure Content Delivery Network (CDN) and ensure maximum performance for dynamic content while minimizing latency and costs.

Issues

Windows Server 2016 VM

The VM shows high network latency, jitter, and high CPU utilization. The VM is critical and has not been backed up in the past. The VM must enable a quick restore from a 7-day snapshot to include in-place restore of disks in case of failure.

Shipping website and REST APIs

The following error message displays while you are testing the website:

You need to support the requirements for the Shipping Logic App.

What should you use?

- A . Azure Active Directory Application Proxy

- B . Point-to-Site (P2S) VPN connection

- C . Site-to-Site (S2S) VPN connection

- D . On-premises Data Gateway

D

Explanation:

Before you can connect to on-premises data sources from Azure Logic Apps, download and install the on-premises data gateway on a local computer. The gateway works as a bridge that provides quick data transfer and encryption between data sources on premises (not in the cloud) and your logic apps.

The gateway supports BizTalk Server 2016.

Note: Microsoft have now fully incorporated the Azure BizTalk Services capabilities into Logic Apps and Azure App Service Hybrid Connections.

Logic Apps Enterprise Integration pack bring some of the enterprise B2B capabilities like AS2 and X12, EDI standards support

Scenario: The Shipping Logic app must meet the following requirements:

– Support the ocean transport and inland transport workflows by using a Logic App.

– Support industry standard protocol X12 message format for various messages including vessel content details and arrival notices.

– Secure resources to the corporate VNet and use dedicated storage resources with a fixed costing model.

– Maintain on-premises connectivity to support legacy applications and final BizTalk migrations.

References: https://docs.microsoft.com/en-us/azure/logic-apps/logic-apps-gateway-install

HOTSPOT

You need to configure Azure App Service to support the REST API requirements.

Which values should you use? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

Explanation:

Plan: Standard

Standard support auto-scaling

Instance Count: 10

Max instances for standard is 10.

Scenario:

The REST API’s that support the solution must meet the following requirements:

– Allow deployment to a testing location within Azure while not incurring additional costs.

– Automatically scale to double capacity during peak shipping times while not causing application downtime.

– Minimize costs when selecting an Azure payment model.

References: https://azure.microsoft.com/en-us/pricing/details/app-service/plans/

Question Set 4

You are writing code to create and run an Azure Batch job. You have created a pool of compute nodes. You need to choose the right class and its method to submit a batch job to the Batch service.

Which method should you use?

- A . JobOperations.EnableJobAsync(String, IEnumerable<BatchClientBehavior>,CancellationToken)

- B . JobOperations.CreateJob()

- C . CloudJob.Enable(IEnumerable<BatchClientBehavior>)

- D . JobOperations.EnableJob(String,IEnumerable<BatchClientBehavior>)

- E . CloudJob.CommitAsync(IEnumerable<BatchClientBehavior>, CancellationToken)

E

Explanation:

A Batch job is a logical grouping of one or more tasks. A job includes settings common to the tasks, such as priority and the pool to run tasks on. The app uses the BatchClient.JobOperations.CreateJob method to create a job on your pool.

The Commit method submits the job to the Batch service. Initially the job has no tasks.

{

CloudJob job =

batchClient.JobOperations.CreateJob();

job.Id = JobId;

job.PoolInformation =

new PoolInformation { PoolId = PoolId };

job.Commit();

}

…

References: https://docs.microsoft.com/en-us/azure/batch/quick-run-dotnet

DRAG DROP

You are developing Azure WebJobs. You need to recommend a WebJob type for each scenario.

Which WebJob type should you recommend? To answer, drag the appropriate WebJob types to the correct scenarios. Each WebJob type may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content. NOTE: Each correct selection is worth one point.

Explanation:

Box 1: Continuous

Continuous runs on all instances that the web app runs on. You can optionally restrict the WebJob to a single instance.

Box 2: Triggered

Triggered runs on a single instance that Azure selects for load balancing.

Box 3: Continuous

Continuous supports remote debugging.

Note:

The following table describes the differences between continuous and triggered WebJobs.

References:

https://docs.microsoft.com/en-us/azure/app-service/web-sites-create-web-jobs

DRAG DROP

You are developing a software solution for an autonomous transportation system. The solution uses large data sets and Azure Batch processing to simulate navigation sets for entire fleets of vehicles. You need to create compute nodes for the solution on Azure Batch.

What should you do? Put the actions in the correct order.

Explanation:

With the Azure CLI:

Step 1: In the Azure CLI, run the command: az batch account create

First we create a batch account.

Step 2: In Azure CLI, run the command: az batch pool create

Now that you have a Batch account, create a sample pool of Linux compute nodes using the az batch pool create command.

Step 3: In Azure CLI, run the command: az batch job create

Now that you have a pool, create a job to run on it. A Batch job is a logical group for one or more tasks. A job includes settings common to the tasks, such as priority and the pool to run tasks on. Create a Batch job by using the az batch job create command.

Step 4: In Azure CLI, run the command: az batch task create

Now use the az batch task create command to create some tasks to run in the job.

References: https://docs.microsoft.com/en-us/azure/batch/quick-create-cli

DRAG DROP

You are deploying an Azure Kubernetes Services (AKS) cluster that will use multiple containers.

You need to create the cluster and verify that the services for the containers are configured correctly and available.

Which four commands should you use to develop the solution? To answer, move the appropriate command segments from the list of command segments to the answer area and arrange them in the correct order.

Explanation:

Step 1: az group create

Create a resource group with the az group create command. An Azure resource group is a logical group in which Azure resources are deployed and managed.

Example: The following example creates a resource group named myAKSCluster in the eastus location.

az group create –name myAKSCluster –location eastus

Step 2: az aks create

Use the az aks create command to create an AKS cluster.

Step 3: kubectl apply

To deploy your application, use the kubectl apply command. This command parses the manifest file and creates the defined Kubernetes objects.

Step 4: az aks get-credentials

Configure it with the credentials for the new AKS cluster. Example:

az aks get-credentials –name aks-cluster –resource-group aks-resource-group

References:

https://docs.bitnami.com/azure/get-started-aks/

DRAG DROP

You are preparing to deploy a medical records application to an Azure virtual machine (VM). The application will be deployed by using a VHD produced by an on-premises build server.

You need to ensure that both the application and related data are encrypted during and after deployment to Azure.

Which three actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

Explanation:

Step 1: Encrypt the on-premises VHD by using BitLocker without a TPM. Upload the VM to Azure Storage

Step 2: Run the Azure PowerShell command Set-AzureRMVMOSDisk

To use an existing disk instead of creating a new disk you can use the Set-AzureRMVMOSDisk command.

Example:

$osDiskName = $vmname+’_osDisk’

$osDiskCaching = ‘ReadWrite’

$osDiskVhdUri = "https://$stoname.blob.core.windows.net/vhds/"+$vmname+"_os.vhd"

$vm = Set-AzureRmVMOSDisk -VM $vm -VhdUri $osDiskVhdUri -name $osDiskName -Create

Step 3: Run the Azure PowerShell command Set-AzureRmVMDiskEncryptionExtension

Use the Set-AzVMDiskEncryptionExtension cmdlet to enable encryption on a running IaaS virtual machine in Azure.

Incorrect:

Not TPM: BitLocker can work with or without a TPM. A TPM is a tamper resistant security chip on the system board that will hold the keys for encryption and check the integrity of the boot sequence and allows the most secure BitLocker implementation. A VM does not have a TPM.

References:

https://www.itprotoday.com/iaaspaas/use-existing-vhd-azurerm-vm

DRAG DROP

You plan to create a Docker image that runs as ASP.NET Core application named ContosoApp. You have a setup script named setupScript.ps1 and a series of application files including ContosoApp.dll.

You need to create a Dockerfile document that meets the following requirements:

– Call setupScript.ps1 when the container is built.

– Run ContosoApp.dll when the container starts.

The Dockerfile document must be created in the same folder where ContosoApp.dll and setupScript.ps1 are stored.

Which four commands should you use to develop the solution? To answer, move the appropriate commands from the list of commands to the answer area and arrange them in the correct order.

Explanation:

Step 1: WORKDIR /apps/ContosoApp

Step 2: COPY ./-

The Docker document must be created in the same folder where ContosoApp.dll and setupScript.ps1 are stored.

Step 3: EXPOSE ./ContosApp/ /app/ContosoApp

Step 4: CMD powershell ./setupScript.ps1

ENTRYPOINT ["dotnet", "ContosoApp.dll"]

You need to create a Dockerfile document that meets the following requirements:

– Call setupScript.ps1 when the container is built.

– Run ContosoApp.dll when the container starts.

References:

https://docs.microsoft.com/en-us/azure/app-service/containers/tutorial-custom-docker-image

DRAG DROP

You are creating a script that will run a large workload on an Azure Batch pool. Resources will be reused and do not need to be cleaned up after use.

You have the following parameters:

You need to write an Azure CLI script that will create the jobs, tasks, and the pool.

In which order should you arrange the commands to develop the solution? To answer, move the appropriate commands from the list of command segments to the answer area and arrange them in the correct order.

Explanation:

Step 1: az batch pool create

# Create a new Linux pool with a virtual machine configuration.

az batch pool create

–id mypool

–vm-size Standard_A1

–target-dedicated 2

–image

canonical:ubuntuserver:16.04-LTS

–node-agent-sku-id

"batch.node.ubuntu 16.04"

Step 2: az batch job create

# Create a new job to encapsulate the tasks that are added.

az batch job create

–id myjob

–pool-id mypool

Step 3: az batch task create

# Add tasks to the job. Here the task is a basic shell command.

az batch task create

–job-id myjob

–task-id task1

–command-line "/bin/bash -c

‘printenv AZ_BATCH_TASK_WORKING_DIR’"

Step 4: for i in {1..$numberOfJobs} do

References:

https://docs.microsoft.com/bs-latn-ba/azure/batch/scripts/batch-cli-sample-run-job

HOTSPOT

You are developing an Azure Function App by using Visual Studio. The app will process orders input by an Azure Web App. The web app places the order information into Azure Queue Storage.

You need to review the Azure Function App code shown below.

NOTE: Each correct selection is worth one point.

Explanation:

Box 1: No

ExpirationTime – The time that the message expires.

InsertionTime – The time that the message was added to the queue.

Box 2: Yes

maxDequeueCount – The number of times to try processing a message before moving it to the poison queue. Default value is 5.

Box 3: Yes

When there are multiple queue messages waiting, the queue trigger retrieves a batch of messages and invokes function instances concurrently to process them. By default, the batch size is 16. When the number being processed gets down to 8, the runtime gets another batch and starts processing those messages. So the maximum number of concurrent messages being processed per function on one virtual machine (VM) is 24.

Box 4: Yes

References:

https://docs.microsoft.com/en-us/azure/azure-functions/functions-bindings-storage-queue

DRAG DROP

You are developing a Docker/Go using Azure App Service Web App for Containers. You plan to run the container in an App Service on Linux. You identify a Docker container image to use.

None of your current resource groups reside in a location that supports Linux. You must minimize the number of resource groups required.

You need to create the application and perform an initial deployment.

Which three Azure CLI commands should you use to develop the solution? To answer, move the appropriate commands from the list of commands to the answer area and arrange them in the correct order.

Explanation:

You can host native Linux applications in the cloud by using Azure Web Apps. To create a Web App for Containers, you must run Azure CLI commands that create a group, then a service plan, and finally the web app itself.

Step 1: az group create

In the Cloud Shell, create a resource group with the az group create command.

Step 2: az appservice plan create

In the Cloud Shell, create an App Service plan in the resource group with the az appservice plan create command.

Step 3: az webapp create

In the Cloud Shell, create a web app in the myAppServicePlan App Service plan with the az webapp create command. Don’t forget to replace with a unique app name, and <docker-ID> with your Docker ID.

References:

https://docs.microsoft.com/mt-mt/azure/app-service/containers/quickstart-docker-go?view=sql-server-ver15

DRAG DROP

You are preparing to deploy an Azure virtual machine (VM)-based application.

The VMs that run the application have the following requirements:

– When a VM is provisioned the firewall must be automatically configured before it can access Azure resources

– Supporting services must be installed by using an Azure PowerShell script that is stored in Azure Storage

You need to ensure that the requirements are met.

Which features should you use? To answer, drag the appropriate features to the correct requirements. Each feature may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content. NOTE: Each correct selection is worth one point.

Explanation:

References: https://docs.microsoft.com/en-us/azure/automation/automation-hybrid-runbook-worker

https://docs.microsoft.com/en-us/azure/virtual-machines/windows/run-command

DRAG DROP

You are developing a microservices solution. You plan to deploy the solution to a multinode Azure Kubernetes Service (AKS) cluster.

You need to deploy a solution that includes the following features:

– reverse proxy capabilities

– configurable traffic routing

– TLS termination with a custom certificate

Which components should you use? To answer, drag the appropriate components to the correct requirements. Each component may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content. NOTE: Each correct selection is worth one point.

Explanation:

Box 1: Helm

To create the ingress controller, use Helm to install nginx-ingress.

Box 2: kubectl

To find the cluster IP address of a Kubernetes pod, use the kubectl get pod command on your local machine, with the option -o wide .

Box 3: Ingress Controller

An ingress controller is a piece of software that provides reverse proxy, configurable traffic routing, and TLS termination for Kubernetes services. Kubernetes ingress resources are used to configure the ingress rules and routes for individual Kubernetes services.

Incorrect Answers:

Virtual Kubelet: Virtual Kubelet is an open-source Kubernetes kubelet implementation that masquerades as a kubelet. This allows Kubernetes nodes to be backed by Virtual Kubelet providers such as serverless cloud container platforms.

CoreDNS: CoreDNS is a flexible, extensible DNS server that can serve as the Kubernetes cluster DNS. Like Kubernetes, the CoreDNS project is hosted by the CNCF.

Reference:

https://docs.microsoft.com/bs-cyrl-ba/azure/aks/ingress-basic

https://www.digitalocean.com/community/tutorials/how-to-inspect-kubernetes-networking

HOTSPOT

You are configuring a development environment for your team. You deploy the latest Visual Studio image from the Azure Marketplace to your Azure subscription. The development environment requires several software development kits (SDKs) and third-party components to support application development across the organization. You install and customize the deployed virtual machine (VM) for your development team. The customized VM must be saved to allow provisioning of a new team member development environment.

You need to save the customized VM for future provisioning.

Which tools or services should you use? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

Explanation:

Box 1: Azure Powershell

Creating an image directly from the VM ensures that the image includes all of the disks associated with the VM, including the OS disk and any data disks.

Before you begin, make sure that you have the latest version of the Azure PowerShell module.

You use Sysprep to generalize the virtual machine, then use Azure PowerShell to create the image.

Box 2: Azure Blob Storage

References:

https://docs.microsoft.com/en-us/azure/virtual-machines/windows/capture-image-resource#create-an-image-of-a-vm-using-powershell

DRAG DROP

You are preparing to deploy an application to an Azure Kubernetes Service (AKS) cluster. The application must only be available from within the VNet that includes the cluster. You need to deploy the application.

How should you complete the deployment YAML? To answer, drag the appropriate YAML segments to the correct locations. Each YAML segment may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content. NOTE: Each correct selection is worth one point.

Explanation:

To create an internal load balancer, create a service manifest named internal-lb.yaml with the service type LoadBalancer and the azure-load-balancer-internal annotation as shown in the following example:

YAML:

apiVersion: v1

kind: Service

metadata:

name: internal-app

annotations:

service.beta.kubernetes.io/azure-load-balancer-internal: "true"

spec:

type: LoadBalancer

ports:

– port: 80

selector:

app: internal-app

References: https://docs.microsoft.com/en-us/azure/aks/internal-lb

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this question, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You are developing a solution that will be deployed to an Azure Kubernetes Service (AKS) cluster. The solution will include a custom VNet, Azure Container Registry images, and an Azure Storage account.

The solution must allow dynamic creation and management of all Azure resources within the AKS cluster.

You need to configure an AKS cluster for use with the Azure APIs.

Solution: Enable the Azure Policy Add-on for Kubernetes to connect the Azure Policy service to the GateKeeper admission controller for the AKS cluster. Apply a built-in policy to the cluster.

Does the solution meet the goal?

- A . Yes

- B . No

B

Explanation:

Instead create an AKS cluster that supports network policy. Create and apply a network to allow traffic only from within a defined namespace.

References:

https://docs.microsoft.com/en-us/azure/aks/use-network-policies

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this question, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You are developing a solution that will be deployed to an Azure Kubernetes Service (AKS) cluster. The solution will include a custom VNet, Azure Container Registry images, and an Azure Storage account.

The solution must allow dynamic creation and management of all Azure resources within the AKS cluster.

You need to configure an AKS cluster for use with the Azure APIs.

Solution: Create an AKS cluster that supports network policy. Create and apply a network to allow traffic only from within a defined namespace.

Does the solution meet the goal?

- A . Yes

- B . No

A

Explanation:

When you run modern, microservices-based applications in Kubernetes, you often want to control which components can communicate with each other. The principle of least privilege should be applied to how traffic can flow between pods in an Azure Kubernetes Service (AKS) cluster. Let’s say you likely want to block traffic directly to back-end applications. The Network Policy feature in Kubernetes lets you define rules for ingress and egress traffic between pods in a cluster.

References:

https://docs.microsoft.com/en-us/azure/aks/use-network-policies

HOTSPOT

You have an Azure Batch project that processes and converts files and stores the files in Azure storage. You are developing a function to start the batch job.

You add the following parameters to the function.

You must ensure that converted files are placed in the container referenced by the outputContainerSasUrl parameter. Files which fail to convert are placed in the container referenced by the failedContainerSasUrl parameter.

You need to ensure the files are correctly processed.

How should you complete the code segment? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

Box 1: CreateJob

Box 2: TaskSuccess

TaskSuccess: Upload the file(s) only after the task process exits with an exit code of 0.

Incorrect: TaskCompletion: Upload the file(s) after the task process exits, no matter what the exit code was.

Box 3: TaskFailure

TaskFailure:Upload the file(s) only after the task process exits with a nonzero exit code.

Box 4: OutputFiles

To specify output files for a task, create a collection of OutputFile objects and assign it to the CloudTask.OutputFiles property when you create the task.

References:

https://docs.microsoft.com/en-us/dotnet/api/microsoft.azure.batch.protocol.models.outputfileuploadcondition

https://docs.microsoft.com/en-us/azure/batch/batch-task-output-files

DRAG DROP

You are developing a software solution for an autonomous transportation system. The solution uses large data sets and Azure Batch processing to simulate navigation sets for entire fleets of vehicles. You need to create compute nodes for the solution on Azure Batch.

What should you do? Put the actions in the correct order.

Explanation:

With the Azure Portal:

Step 1: In the Azure portal, create a Batch account.

First we create a batch account.

Step 2: In the Azure portal, create a pool of compute nodes

Now that you have a Batch account, create a sample pool of Windows compute nodes for test purposes.

Step 3: In the Azure portal, add a Job.

Now that you have a pool, create a job to run on it. A Batch job is a logical group for one or more tasks. A job includes settings common to the tasks, such as priority and the pool to run tasks on. Initially the job has no tasks.

Step 4: In the Azure portal, create tasks

Now create sample tasks to run in the job. Typically you create multiple tasks that Batch queues and distributes to run on the compute nodes.

References:

https://docs.microsoft.com/en-us/azure/batch/quick-create-portal

DRAG DROP

You are developing a software solution for an autonomous transportation system. The solution uses large data sets and Azure Batch processing to simulate navigation sets for entire fleets of vehicles. You need to create compute nodes for the solution on Azure Batch.

What should you do? Put the actions in the correct order.

Explanation:

With .NET:

Step 1: In the Azure portal, create a Batch account.

First we create a batch account.

Step 2: In a .NET method, call the method: BatchClient.PoolOperations.CreatePool

Now that you have a Batch account, create a sample pool of Windows compute nodes for test purposes. To create a Batch pool, the app uses the BatchClient.PoolOperations.CreatePool method to set the number of nodes, VM size, and a pool configuration.

Step 3: In a .NET method, call the method: BatchClient.PoolOperations.CreateJob

Now that you have a pool, create a job to run on it. A Batch job is a logical group for one or more tasks. A job includes settings common to the tasks, such as priority and the pool to run tasks on. Initially the job has no tasks. The app uses the BatchClient.JobOperations.CreateJob method to create a job on your pool.

Step 4: In a .NET method, call the method: batchClient.JobOperations.AddTask

Now create sample tasks to run in the job. Typically you create multiple tasks that Batch queues and distributes to run on the compute nodes. The app adds tasks to the job with the AddTask method, which queues them to run on the compute nodes.

For example: batchClient.JobOperations.AddTask(JobId, tasks);

References:

https://docs.microsoft.com/en-us/azure/batch/quick-create-portal

https://docs.microsoft.com/en-us/azure/batch/quick-run-dotnet

DRAG DROP

You are developing a software solution for an autonomous transportation system. The solution uses large data sets and Azure Batch processing to simulate navigation sets for entire fleets of vehicles. You need to create compute nodes for the solution on Azure Batch.

What should you do? Put the actions in the correct order.

Explanation:

With Python:

Step 1: In the Azure portal, create a Batch account.

First we create a batch account.

Step 2: In Python, implement the class: PoolAddParameter

Now that you have a Batch account, create a sample pool of Windows compute nodes for test purposes. To create a Batch pool, the app uses the PoolAddParameter class to set the number of nodes, VM size, and a pool configuration.

Step 3: In Python, implement the class: JobAddParameter

Now that you have a pool, create a job to run on it. A Batch job is a logical group for one or more tasks. A job includes settings common to the tasks, such as priority and the pool to run tasks on. Initially the job has no tasks. The app uses the JobAddParameter class to create a job on your pool.

Step 4: In Python, implement the class: TaskAddParameter

Now create sample tasks to run in the job. Typically you create multiple tasks that Batch queues and distributes to run on the compute nodes. The app creates a list of task objects using the TaskAddParameter class.

References:

https://docs.microsoft.com/en-us/azure/batch/quick-create-portal

https://docs.microsoft.com/en-us/azure/batch/quick-run-python

Testlet 1

Case Study

This is a case study. Case studies are not timed separately. You can use as much exam time as you would like to complete each case. However, there may be additional case studies and sections on this exam. You must manage your time to ensure that you are able to complete all questions included on this exam in the time provided.

To answer the questions included in a case study, you will need to reference information that is provided in the case study. Case studies might contain exhibits and other resources that provide more information about the scenario that is described in the case study. Each question is independent of the other question on this case study.

At the end of this case study, a review screen will appear. This screen allows you to review your answers and to make changes before you move to the next sections of the exam. After you begin a new section, you cannot return to this section.

To start the case study

To display the first question on this case study, click the Next button. Use the buttons in the left pane to explore the content of the case study before you answer the questions. Clicking these buttons displays information such as business requirements, existing environment, and problem statements. If the case study has an All Information tab, note that the information displayed is identical to the information displayed on the subsequent tabs. When you are ready to answer a question , click the Question button to return to the question.

Background

You are a developer for Litware Inc., a SaaS company that provides a solution for managing employee expenses. The solution consists of an ASP.NET Core Web API project that is deployed as an Azure Web App.

Overall architecture

Employees upload receipts for the system to process. When processing is complete, the employee receives a summary report email that details the processing results. Employees then use a web application to manage their receipts and perform any additional tasks needed for reimbursement.

Receipt processing

Employees may upload receipts in two ways:

– Uploading using an Azure Files mounted folder

– Uploading using the web application

Data Storage

Receipt and employee information is stored in an Azure SQL database.

Documentation

Employees are provided with a getting started document when they first use the solution. The documentation includes details on supported operating systems for Azure File upload, and instructions on how to configure the mounted folder.

Solution details

Users table

Web Application

You enable MSI for the Web App and configure the Web App to use the security principal name WebAppIdentity.

Processing

Processing is performed by an Azure Function that uses version 2 of the Azure Function runtime. Once processing is completed, results are stored in Azure Blob Storage and an Azure SQL database. Then, an email summary is sent to the user with a link to the processing report. The link to the report must remain valid if the email is forwarded to another user.

Logging

Azure Application Insights is used for telemetry and logging in both the processor and the web application. The processor also has TraceWriter logging enabled. Application Insights must always contain all log messages.

Requirements

Receipt processing

Concurrent processing of a receipt must be prevented.

Disaster recovery

Regional outage must not impact application availability. All DR operations must not be dependent on application running and must ensure that data in the DR region is up to date.

Security

– Users’ SecurityPin must be stored in such a way that access to the database does not allow the viewing of SecurityPins. The web application is the only system that should have access to SecurityPins.

-All certificates and secrets used to secure data must be stored in Azure Key Vault.

-You must adhere to the Least Privilege Principal and provide privileges which are essential to perform the intended function.

– All access to Azure Storage and Azure SQL database must use the application’s Managed Service Identity (MSI)

– Receipt data must always be encrypted at rest.

– All data must be protected in transit.

– User’s expense account number must be visible only to logged in users. All other views of the expense account number should include only the last segment with the remaining parts obscured.

– In the case of a security breach, access to all summary reports must be revoked without impacting other parts of the system.

Issues

Upload format issue

Employees occasionally report an issue with uploading a receipt using the web application. They report that when they upload a receipt using the Azure File Share, the receipt does not appear in their profile. When this occurs, they delete the file in the file share and use the web application, which returns a 500 Internal Server error page.

Capacity issue

During busy periods, employees report long delays between the time they upload the receipt and when it appears in the web application.

Log capacity issue

Developers report that the number of log messages in the trace output for the processor is too high, resulting in lost log messages.

Processing.cs

Database.cs

ReceiptUploader.cs

ConfigureSSE.ps1

HOTSPOT

You need to configure retries in the LoadUserDetails function in the Database class without impacting user experience.

What code should you insert on line DB07? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

Explanation:

Box 1: Policy

RetryPolicy retry = Policy

.Handle<HttpRequestException>()

.Retry(3);

The above example will create a retry policy which will retry up to three times if an action fails with an exception handled by the Policy.

Box 2: WaitAndRetryAsync(3,i => TimeSpan.FromMilliseconds(100* Math.Pow(2,i-1)));

A common retry strategy is exponential backoff: this allows for retries to be made initially quickly, but then at progressively longer intervals, to avoid hitting a subsystem with repeated frequent calls if the subsystem may be struggling.

Example:

Policy

.Handle<SomeExceptionType>()

.WaitAndRetry(3, retryAttempt

=>

TimeSpan.FromSeconds(Math.Pow(2,

retryAttempt))

);

References:

https://github.com/App-vNext/Polly/wiki/Retry

Testlet 2

Case Study

This is a case study. Case studies are not timed separately. You can use as much exam time as you would like to complete each case. However, there may be additional case studies and sections on this exam. You must manage your time to ensure that you are able to complete all questions included on this exam in the time provided.

To answer the questions included in a case study, you will need to reference information that is provided in the case study. Case studies might contain exhibits and other resources that provide more information about the scenario that is described in the case study. Each question is independent of the other question on this case study.

At the end of this case study, a review screen will appear. This screen allows you to review your answers and to make changes before you move to the next sections of the exam. After you begin a new section, you cannot return to this section.

To start the case study

To display the first question on this case study, click the Next button. Use the buttons in the left pane to explore the content of the case study before you answer the questions. Clicking these buttons displays information such as business requirements, existing environment, and problem statements. If the case study has an All Information tab, note that the information displayed is identical to the information displayed on the subsequent tabs. When you are ready to answer a question , click the Question button to return to the question.

LabelMaker app

Coho Winery produces bottles, and distributes a variety of wines globally. You are a developer implementing highly scalable and resilient applications to support online order processing by using Azure solutions.

Coho Winery has a LabelMaker application that prints labels for wine bottles. The application sends data to several printers. The application consists of five modules that run independently on virtual machines (VMs). Coho Winery plans to move the application to Azure and continue to support label creation.

External partners send data to the LabelMaker app lication to include artwork and text for custom label designs.

Requirements

Data

You identify the following requirements for data management and manipulation:

• Order data is stored as nonrelational JSON and must be queried using Structured Query Language (SQL).

• Changes to the Order data must reflect immediately across all partitions. All reads to the Order data must fetch the most recent writes.

Security

You have the following security requirements:

• Users of Coho Winery applications must be able to provide access to documents, resources, and applications to external partners.

• External partners must use their own credentials and authenticate with their organization’s identity management solution.

• External partner logins must be audited monthly for application use by a user account administrator to maintain company compliance.

• Storage of e-commerce application settings must be maintained in Azure Key Vault.

• E-commerce application sign-ins must be secured by using Azure App Service authentication and Azure Active Directory (AAD).

• Conditional access policies must be applied at the application level to protect company content.

• The LabelMaker application must be secured by using an AAD account that has full access to all namespaces of the Azure Kubernetes Service (AKS) cluster.

LabelMaker app

Azure Monitor Container Health must be used to monitor the performance of workloads that are deployed to Kubernetes environments and hosted on Azure Kubernetes Service (AKS).

You must use Azure Container Registry to publish images that support the AKS deployment.

Architecture

Issues

Calls to the Printer API App fall periodically due to printer communication timeouts.

Printer communication timeouts occur after 10 seconds. The label printer must only receive up to 5 attempts within one minute

The order workflow fails to run upon initial deployment to Azure.

Order.Json

Relevant portions oi the app files are shown below. Line numbers are included for reference only. The JSON file contains a representation of the data for an order that includes a single item.

You need to implement the e-commerce checkout API.

Which three actions should you perform? Each correct answer presents part of the solution. NOTE: Each correct selection is worth one point.

- A . Set the function template’s Mode property to Webhook and the Webhook type property to Generic JSON.

- B . Create an Azure Function using the HTTP POST function template.

- C . In the Azure Function App, enable Cross-Origin Resource Sharing (CORS) with all origins permitted.

- D . In the Azure Function App, enable Managed Service Identity (MSI).

- E . Set the function template’s Mode property to Webhook and the Webhook type property to GitHub.

- F . Create an Azure Function using the Generic webhook function template.

ABD

Explanation:

Scenario: E-commerce application sign-ins must be secured by using Azure App Service authentication and Azure Active Directory (AAD).

D: A managed identity from Azure Active Directory allows your app to easily access other AAD-protected resources such as Azure Key Vault.

Incorrect Answers:

C: CORS is an HTTP feature that enables a web application running under one domain to access resources in another domain.

References:

https://docs.microsoft.com/en-us/azure/app-service/overview-managed-identity

DRAG DROP

You need to deploy a new version of the LabelMaker application.

Which three actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

Explanation:

Step 1: Build a new application image by using dockerfile

Step 2: Create an alias if the image with the fully qualified path to the registry

Before you can push the image to a private registry, you’ve to ensure a proper image name. This can be achieved using the docker tag command. For demonstration purpose, we’ll use Docker’s hello world image, rename it and push it to ACR.

# pulls hello-world from the public docker hub

$ docker pull hello-world

# tag the image in order to be able to push it to a private registry

$ docker tag hello-word <REGISTRY_NAME>/hello-world

# push the image

$ docker push <REGISTRY_NAME>/hello-world

Step 3: Log in to the registry and push image

In order to push images to the newly created ACR instance, you need to login to ACR form the Docker CLI. Once logged in, you can push any existing docker image to your ACR instance.

Scenario:

Coho Winery plans to move the application to Azure and continue to support label creation.

LabelMaker app

Azure Monitor Container Health must be used to monitor the performance of workloads that are deployed to Kubernetes environments and hosted on Azure Kubernetes Service (AKS).

You must use Azure Container Registry to publish images that support the AKS deployment.

References:

https://thorsten-hans.com/how-to-use-a-private-azure-container-registry-with-kubernetes-9b86e67b93b6

https://docs.microsoft.com/en-us/azure/container-registry/container-registry-tutorial-quick-task

You need to provision and deploy the order workflow.

Which three components should you include? Each correct answer presents part of the solution. NOTE: Each correct selection is worth one point.

- A . Connections

- B . On-premises Data Gateway

- C . Workflow definition

- D . Resources

- E . Functions

BCE

Explanation:

Scenario: The order workflow fails to run upon initial deployment to Azure.

HOTSPOT

You need to update the order workflow to address the issue when calling the Printer API App.

How should you complete the code? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

Explanation:

Box 1: Fixed

To specify that the action or trigger waits the specified interval before sending the next request, set the <retry-policy-type> to fixed.

Box 2: PT10S

Box 3: 5

Scenario: Calls to the Printer API App fail periodically due to printer communication timeouts. Printer communication timeouts occur after 10 seconds. The label printer must only receive up to 5 attempts within one minute.

Question Set 3

HOTSPOT

You are creating a CLI script that creates an Azure web app and related services in Azure App Service.

The web app uses the following variables:

You need to automatically deploy code from GitHub to the newly created web app.

How should you complete the script? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

Explanation:

Box 1: az appservice plan create

The azure group creates command successfully returns JSON result. Now we can use resource group to create a azure app service plan

Box 2: az webapp create

Create a new web app..

Box 3: –plan $webappname

..with the serviceplan we created in step.

Box 4: az webapp deployment

Continuous Delivery with GitHub. Example:

az webapp deployment source config –name firstsamplewebsite1 –resource-group websites–repo-url $gitrepo –branch master –git-token $token

Box 5: –repo-url $gitrepo –branch master –manual-integration

References:

https://medium.com/@satish1v/devops-your-way-to-azure-web-apps-with-azure-cli-206ed4b3e9b1

HOTSPOT

You are developing an Azure Web App. You configure TLS mutual authentication for the web app.

You need to validate the client certificate in the web app. To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

Explanation:

Accessing the client certificate from App Service. If you are using ASP.NET and configure your app to use client certificate authentication, the certificate will be available through the HttpRequest.ClientCertificate property. For other application stacks, the client cert will be available in your app through a base64 encoded value in the "X-ARR-ClientCert" request header. Your application can create a certificate from this value and then use it for authentication and authorization purposes in your application.

References: https://docs.microsoft.com/en-us/azure/app-service/app-service-web-configure-tls-mutual-auth

DRAG DROP

You are developing a .NET Core model-view controller (MVC) application hosted on Azure for a health care system that allows providers access to their information.

You develop the following code:

You define a role named SysAdmin.

You need to ensure that the application meets the following authorization requirements:

– Allow the ProviderAdmin and SysAdmin roles access to the Partner controller regardless of whether the user holds an editor claim of partner.

– Limit access to the Manage action of the controller to users with an editor claim of partner who are also members of the SysAdmin role.

How should you complete the code? To answer, drag the appropriate code segments to the correct locations. Each code segment may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content. NOTE: Each correct selection is worth one point.

Explanation:

Box 1: Allow the ProviderAdmin and SysAdmin roles access to the Partner controller regardless of whether the user holds an editor claim of partner.

Box 2: Limit access to the Manage action of the controller to users with an editor claim of partner who are also members of the SysAdmin role.

DRAG DROP

You manage several existing Logic Apps. You need to change definitions, add new logic, and optimize these apps on a regular basis.

What should you use? To answer, drag the appropriate tools to the correct functionalities. Each tool may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content. NOTE: Each correct selection is worth one point.

Explanation:

Box 1: Enterprise Integration Pack

After you create an integration account that has partners and agreements, you are ready to create a business to business (B2B) workflow for your logic app with the Enterprise Integration Pack.

Box 2: Code View Editor

To work with logic app definitions in JSON, open the Code View editor when working in the Azure portal or in Visual Studio, or copy the definition into any editor that you want.

Box 3: Logical Apps Designer

You can build your logic apps visually with the Logic Apps Designer, which is available in the Azure portal through your browser and in Visual Studio.

References:

https://docs.microsoft.com/en-us/azure/logic-apps/logic-apps-enterprise-integration-b2b

https://docs.microsoft.com/en-us/azure/logic-apps/logic-apps-author-definitions

https://docs.microsoft.com/en-us/azure/logic-apps/logic-apps-overview

You are implementing an Azure API app that uses built-in authentication and authorization functionality. All app actions must be associated with information about the current user. You need to retrieve the information about the current user.

What are two ways to achieve the goal? Each correct answer presents a complete solution. NOTE: Each correct selection is worth one point.

- A . HTTP headers

- B . environment variables

- C . /.auth/me HTTP endpoint

- D . /.auth/login endpoint

AC

Explanation:

A: After App Service Authentication has been configured, users trying to access your API are prompted to sign in with their organizational account that belongs to the same Azure AD as the Azure AD application used to secure the API. After signing in, you are able to access the information about the current user through the HttpContext.Current.User property.

C: While the server code has access to request headers, client code can access GET /.auth/me to get the same access tokens (

References:

https://docs.microsoft.com/en-us/azure/app-service/app-service-web-tutorial-auth-aad

https://docs.microsoft.com/en-us/sharepoint/dev/spfx/web-parts/guidance/connect-to-api-secured-with-aad

HOTSPOT

You are developing a back-end Azure App Service that scales based on the number of messages contained in a Service Bus queue. A rule already exists to scale up the App Service when the average queue length of unprocessed and valid queue messages is greater than 1000.

You need to add a new rule that will continuously scale down the App Service as long as the scale up condition is not met.

How should you configure the Scale rule? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

Explanation:

Box 1: Service bus queue

You are developing a back-end Azure App Service that scales based on the number of messages contained in a Service Bus queue.

Box 2: ActiveMessage Count

ActiveMessageCount: Messages in the queue or subscription that are in the active state and ready for delivery.

Box 3: Count

Box 4: Less than or equal to

You need to add a new rule that will continuously scale down the App Service as long as the scale up condition is not met.

HOTSPOT

A company is developing a Java web app. The web app code is hosted in a GitHub repository located at https://github.com/Contoso/webapp.

The web app must be evaluated before it is moved to production. You must deploy the initial code release to a deployment slot named staging.

You need to create the web app and deploy the code.

How should you complete the commands? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Explanation:

Box 1: group

# Create a resource group.

az group create –location westeurope –name myResourceGroup

Box 2: appservice plan

# Create an App Service plan in STANDARD tier (minimum required by deployment slots).

az appservice plan create –name $webappname –resource-group myResourceGroup –sku S1

Box 3: webapp

# Create a web app.

az webapp create –name $webappname –resource-group myResourceGroup

–plan $webappname

Box 4: webapp deployment slot

#Create a deployment slot with the name "staging".

az webapp deployment slot create –name $webappname –resource-group myResourceGroup

–slot staging

Box 5: webapp deployment source

# Deploy sample code to "staging" slot from GitHub.

az webapp deployment source config –name $webappname –resource-group myResourceGroup

–slot staging –repo-url $gitrepo –branch master –manual-integration

References:

https://docs.microsoft.com/en-us/azure/app-service/scripts/cli-deploy-staging-environment

DRAG DROP

You have a web app named MainApp. You are developing a triggered App Service background task by using the WebJobs SDK. This task automatically invokes a function in the code whenever any new data is received in a queue.

You need to configure the services.

Which service should you use for each scenario? To answer, drag the appropriate services to the correct scenarios. Each service may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content. NOTE: Each correct selection is worth one point.

Explanation:

Box 1: WebJobs

A WebJob is a simple way to set up a background job, which can process continuously or on a schedule. WebJobs differ from a cloud service as it gives you get less fine-grained control over your processing environment, making it a more true PaaS service.

Box 2: Flow

Incorrect Answers:

Azure Logic Apps is a cloud service that helps you schedule, automate, and orchestrate tasks, business processes, and workflows when you need to integrate apps, data, systems, and services across enterprises or organizations. Logic Apps simplifies how you design and build scalable solutions for app integration, data integration, system integration, enterprise application integration (EAI), and business-to-business (B2B) communication, whether in the cloud, on premises, or both.

References:

https://code.msdn.microsoft.com/Processing-Service-Bus-84db27b4

HOTSPOT

A company is developing a mobile app for field service employees using Azure App Service Mobile Apps as the backend.

The company’s network connectivity varies throughout the day. The solution must support offline use and synchronize changes in the background when the app is online app.

You need to implement the solution.

How should you complete the code segment? To answer, select the appropriate options in the answer area. NOTE: Each correct selection is worth one point.

Explanation:

Box 1: var todoTable = client GetSyncTable<TodoItem>()

To setup offline access, when connecting to your mobile service, use the method GetSyncTable instead of GetTable (example):

IMobileServiceSyncTable todoTable = App.MobileService.GetSyncTable(); /

Box 2: await todoTable.PullAsync("allTodoItems",todo.Table.CreateQuery());

Your app should now use IMobileServiceSyncTable (instead of IMobileServiceTable) for CRUD operations. This will save changes to the local database and also keep a log of the changes. When the app is ready to synchronize its changes with the Mobile Service, use the methods PushAsync and PullAsync (example):

await App.MobileService.SyncContext.PushAsync();

await todoTable.PullAsync();

References:

https://azure.microsoft.com/es-es/blog/offline-sync-for-mobile-services/

A company is developing a solution that allows smart refrigerators to send temperature information to a central location. You have an existing Service Bus.

The solution must receive and store messages until they can be processed. You create an Azure Service Bus instance by providing a name, pricing tier, subscription, resource group, and location.

You need to complete the configuration.

Which Azure CLI or PowerShell command should you run?

A)

B)

C)

D)

- A . Option A

- B . Option B

- C . Option C

- D . Option D

D

Explanation:

A service bus instance has already been created (Step 2 below). Next is step 3, Create a Service Bus queue.

Note:

Steps:

Step 1: # Create a resource group

resourceGroupName="myResourceGroup"

az group create –name $resourceGroupName –location eastus

Step 2: # Create a Service Bus messaging namespace with a unique name

namespaceName=myNameSpace$RANDOM

az servicebus namespace create –resource-group $resourceGroupName –name $namespaceName –location eastus

Step 3: # Create a Service Bus queue

az servicebus queue create –resource-group $resourceGroupName –namespace-name $namespaceName –name BasicQueue

Step 4: # Get the connection string for the namespace

connectionString=$(az servicebus namespace authorization-rule keys list –resource-group $resourceGroupName –namespace-name $namespaceName –name RootManageSharedAccessKey –query primaryConnectionString –output tsv)

References:

https://docs.microsoft.com/en-us/azure/service-bus-messaging/service-bus-quickstart-cli

You are a developer for a SaaS company that offers many web services.

All web services for the company must meet the following requirements:

– Use API Management to access the services

– Use OpenID Connect for authentication.

– Prevent anonymous usage

A recent security audit found that several web services can be called without any authentication.

Which API Management policy should you implement?

- A . validate-jwt

- B . jsonp

- C . authentication-certificate

- D . check-header

A

Explanation:

Add the validate-jwt policy to validate the OAuth token for every incoming request.

Incorrect Answers:

B: The jsonp policy adds JSON with padding (JSONP) support to an operation or an API to allow cross-domain calls from JavaScript browser-based clients. JSONP is a method used in JavaScript programs to request data from a server in a different domain. JSONP bypasses the limitation enforced by most web browsers where access to web pages must be in the same domain.

JSONP – Adds JSON with padding (JSONP) support to an operation or an API to allow cross-domain calls from JavaScript browser-based clients.

References: https://docs.microsoft.com/en-us/azure/api-management/api-management-howto-protectbackend-with-aad

DRAG DROP

A company backs up all manufacturing data to Azure Blob Storage. Admins move blobs from hot storage to archive tier storage every month.

You must automatically move blocks to Archive tier after they have not been accessed for 180 days. The path for any item that is not archived must be placed in an existing queue. This operation must be performed automatically once a month. You set the value of TierAgeInDays to 180.

How should you configure the Logic App? To answer, drag the appropriate triggers or action blocks to the correct trigger or action slots. Each trigger or action block may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content. NOTE: Each correct selection is worth one point.

Explanation:

Box 1: Recurrence

This operation must be performed automatically once a month.

Box 2: Condition

Move blocks to Archive tier after they have not been accessed for 180 days.

Box 3 (if true): Tier Blob

Move blocks to Archive tier after they have not been accessed for 180 days.

Box 4: Put a message in a queue

The path for any item that is not archived must be placed in an existing queue.

References: https://docs.microsoft.com/en-us/azure/logic-apps/logic-apps-perform-data-operations

You develop a website. You plan to host the website in Azure. You expect the website to experience high traffic volumes after it is published.

You must ensure that the website remains available and responsive while minimizing cost.

You need to deploy the website.

What should you do?

- A . Deploy the website to a virtual machine. Configure the virtual machine to automatically scale when the CPU load is high.

- B . Deploy the website to an App Service that uses the Shared service tier. Configure the App service plan to automatically scale when the CPU load is high.

- C . Deploy the website to an App Service that uses the Standard service tier. Configure the App service plan to automatically scale when the CPU load is high.

- D . Deploy the website to a virtual machine. Configure a Scale Set to increase the virtual machine instance count when the CPU load is high.

C

Explanation:

Windows Azure Web Sites (WAWS) offers 3 modes: Standard, Free, and Shared.

Standard mode carries an enterprise-grade SLA (Service Level Agreement) of 99.9% monthly, even for sites with just one instance. Standard mode runs on dedicated instances, making it different from the other ways to buy Windows Azure Web Sites.

Incorrect Answers:

B: Shared and Free modes do not offer the scaling flexibility of Standard, and they have some important limits. Shared mode, just as the name states, also uses shared Compute resources, and also has a CPU limit. So, while neither Free nor Shared is likely to be the best choice for your production environment due to these limits.

HOTSPOT

A company is developing a Node.js web app. The web app code is hosted in a GitHub repository located at https://github.com/TailSpinToys/webapp.

The web app must be reviewed before it is moved to production. You must deploy the initial code release to a deployment slot named review.

You need to create the web app and deploy the code.

How should you complete the commands? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Explanation:

The New-AzResourceGroup cmdlet creates an Azure resource group.

The New-AzAppServicePlan cmdlet creates an Azure App Service plan in a given location

The New-AzWebApp cmdlet creates an Azure Web App in a given a resource group

The New-AzWebAppSlot cmdlet creates an Azure Web App slot.

References:

https://docs.microsoft.com/en-us/powershell/module/az.resources/new-azresourcegroup?view=azps-2.3.2

https://docs.microsoft.com/en-us/powershell/module/az.websites/new-azappserviceplan?view=azps-2.3.2

https://docs.microsoft.com/en-us/powershell/module/az.websites/new-azwebapp?view=azps-2.3.2

https://docs.microsoft.com/en-us/powershell/module/az.websites/new-azwebappslot?view=azps-2.3.2

HOTSPOT

You are implementing a software as a service (SaaS) ASP.NET Core web service that will run as an Azure Web App. The web service will use an on-premises SQL Server database for storage. The web service also includes a WebJob that processes data updates.

Four customers will use the web service.

– Each instance of the WebJob processes data for a single customer and must run as a singleton instance.