If the upstream system is known to occasionally produce duplicate entries for a single order hours apart, which statement is correct?

An upstream source writes Parquet data as hourly batches to directories named with the current date.

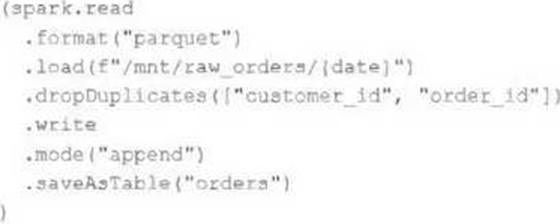

A nightly batch job runs the following code to ingest all data from the previous day as indicated by the date variable:

Assume that the fields customer_id and order_id serve as a composite key to uniquely identify each order.

If the upstream system is known to occasionally produce duplicate entries for a single order hours apart, which statement is correct?

A . Each write to the orders table will only contain unique records, and only those records without duplicates in the target table will be written.

B . Each write to the orders table will only contain unique records, but newly written records may have duplicates already present in the target table.

C . Each write to the orders table will only contain unique records; if existing records with the same key are present in the target table, these records will be overwritten.

D . Each write to the orders table will only contain unique records; if existing records with the same key are present in the target table, the operation will tail.

E . Each write to the orders table will run deduplication over the union of new and existing records,

ensuring no duplicate records are present.

Answer: B

Explanation:

This is the correct answer because the code uses the dropDuplicates method to remove any duplicate records within each batch of data before writing to the orders table. However, this method does not check for duplicates across different batches or in the target table, so it is possible that newly written records may have duplicates already present in the target table. To avoid this, a better approach would be to use Delta Lake and perform an upsert operation using mergeInto.

Verified Reference: [Databricks Certified Data Engineer Professional], under “Delta Lake” section; Databricks Documentation, under “DROP DUPLICATES” section.

Latest Databricks Certified Professional Data Engineer Dumps Valid Version with 222 Q&As

Latest And Valid Q&A | Instant Download | Once Fail, Full Refund