How should you distribute the training examples across the train-test-eval subsets while maintaining the 80-10-10 proportion?

Your team is working on an NLP research project to predict political affiliation of authors based on articles they have written.

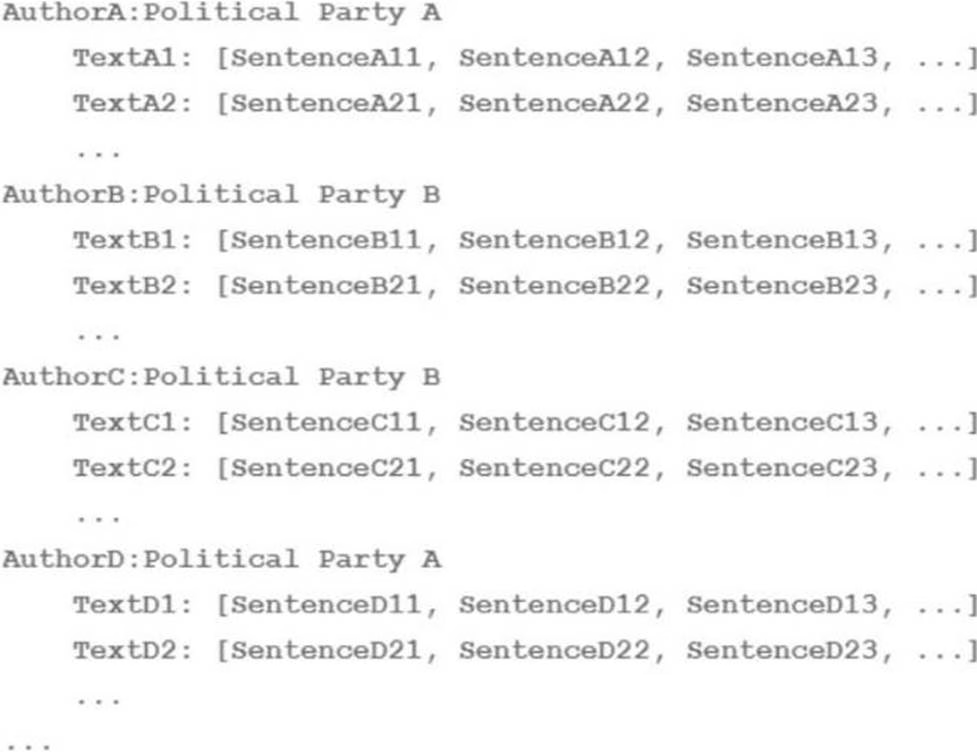

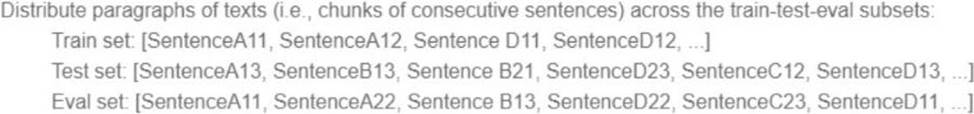

You have a large training dataset that is structured like this:

You followed the standard 80%-10%-10% data distribution across the training, testing, and evaluation subsets.

How should you distribute the training examples across the train-test-eval subsets while maintaining the 80-10-10 proportion?

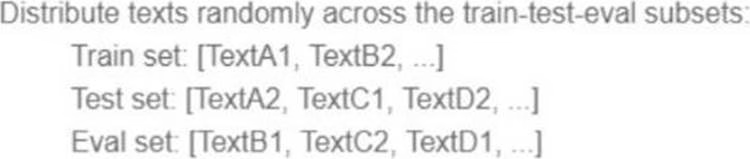

A)

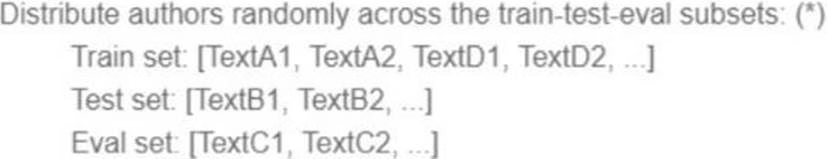

B)

C)

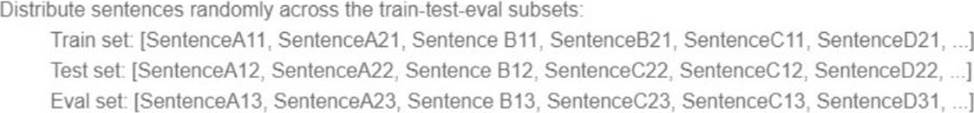

D)

A . Option A

B . Option B

C . Option C

D . Option D

Answer: B

Explanation:

If we just put inside the Training set , Validation set and Test set , randomly Text, Paragraph or sentences the model will have the ability to learn specific qualities about The Author’s use of language beyond just his own articles. Therefore the model will mixed up different opinions. Rather if we divided things up a the author level, so that given authors were only on the training data, or only in the test data or only in the validation data. The model will find more difficult to get a high accuracy on the test validation (What is correct and have more sense!). Because it will need to really focus in author by author articles rather than get a single political affiliation based on a bunch of mixed articles from different authors. https://developers.google.com/machine-learning/crash-course/18th-century-literature

For example, suppose you are training a model with purchase data from a number of stores. You know, however, that the model will be used primarily to make predictions for stores that are not in the training data. To ensure that the model can generalize to unseen stores, you should segregate your data sets by stores. In other words, your test set should include only stores different from the evaluation set, and the evaluation set should include only stores different from the training set. https://cloud.google.com/automl-tables/docs/prepare#ml-use

Latest Professional Machine Learning Engineer Dumps Valid Version with 60 Q&As

Latest And Valid Q&A | Instant Download | Once Fail, Full Refund