How precise is the classifier?

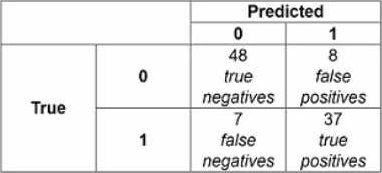

The following confusion matrix is produced when a classifier is used to predict labels on a test dataset.

How precise is the classifier?

A . 48/(48+37)

B . 37/(37+8)

C . 37/(37+7)

D . (48+37)/100

Answer: B

Explanation:

Precision is a measure of how well a classifier can avoid false positives (incorrectly predicted positive cases). Precision is calculated by dividing the number of true positives (correctly predicted positive cases) by the number of predicted positive cases (true positives and false positives). In this confusion matrix, the true positives are 37 and the false positives are 8, so the precision is 37/(37+8) = 0.822.

Latest AIP-210 Dumps Valid Version with 90 Q&As

Latest And Valid Q&A | Instant Download | Once Fail, Full Refund

Subscribe

Login

0 Comments

Inline Feedbacks

View all comments