Google Professional Machine Learning Engineer Google Professional Machine Learning Engineer Online Training

Google Professional Machine Learning Engineer Online Training

The questions for Professional Machine Learning Engineer were last updated at Feb 14,2026.

- Exam Code: Professional Machine Learning Engineer

- Exam Name: Google Professional Machine Learning Engineer

- Certification Provider: Google

- Latest update: Feb 14,2026

Your team trained and tested a DNN regression model with good results. Six months after deployment, the model is performing poorly due to a change in the distribution of the input data.

How should you address the input differences in production?

- A . Create alerts to monitor for skew, and retrain the model.

- B . Perform feature selection on the model, and retrain the model with fewer features

- C . Retrain the model, and select an L2 regularization parameter with a hyperparameter tuning service

- D . Perform feature selection on the model, and retrain the model on a monthly basis with fewer features

You manage a team of data scientists who use a cloud-based backend system to submit training jobs.

This system has become very difficult to administer, and you want to use a managed service instead.

The data scientists you work with use many different frameworks, including Keras, PyTorch, theano.

Scikit-team, and custom libraries.

What should you do?

- A . Use the Al Platform custom containers feature to receive training jobs using any framework

- B . Configure Kubeflow to run on Google Kubernetes Engine and receive training jobs through TFJob

- C . Create a library of VM images on Compute Engine; and publish these images on a centralized repository

- D . Set up Slurm workload manager to receive jobs that can be scheduled to run on your cloud infrastructure.

You are developing a Kubeflow pipeline on Google Kubernetes Engine. The first step in the pipeline is to issue a query against BigQuery. You plan to use the results of that query as the input to the next step in your pipeline. You want to achieve this in the easiest way possible.

What should you do?

- A . Use the BigQuery console to execute your query and then save the query results Into a new BigQuery table.

- B . Write a Python script that uses the BigQuery API to execute queries against BigQuery Execute this script as the first step in your Kubeflow pipeline

- C . Use the Kubeflow Pipelines domain-specific language to create a custom component that uses the Python BigQuery client library to execute queries

- D . Locate the Kubeflow Pipelines repository on GitHub Find the BigQuery Query Component, copy that component’s URL, and use it to load the component into your pipeline. Use the component to execute queries against BigQuery

You are developing ML models with Al Platform for image segmentation on CT scans. You frequently update your model architectures based on the newest available research papers, and have to rerun training on the same dataset to benchmark their performance. You want to minimize computation costs and manual intervention while having version control for your code.

What should you do?

- A . Use Cloud Functions to identify changes to your code in Cloud Storage and trigger a retraining job

- B . Use the gcloud command-line tool to submit training jobs on Al Platform when you update your code

- C . Use Cloud Build linked with Cloud Source Repositories to trigger retraining when new code is pushed to the repository

- D . Create an automated workflow in Cloud Composer that runs daily and looks for changes in code in Cloud Storage using a sensor.

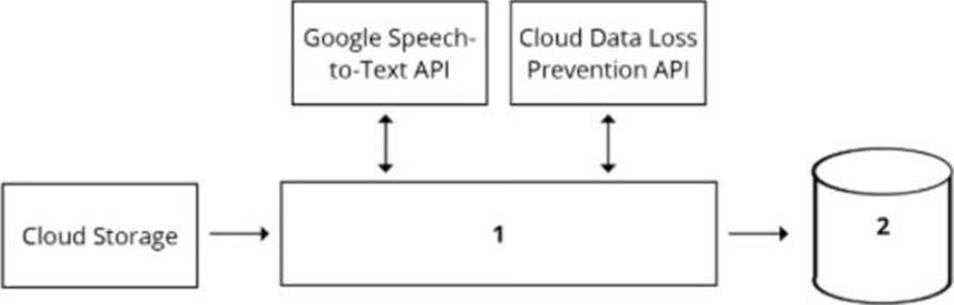

Your organization’s call center has asked you to develop a model that analyzes customer sentiments in each call. The call center receives over one million calls daily, and data is stored in Cloud Storage. The data collected must not leave the region in which the call originated, and no Personally Identifiable Information (Pll) can be stored or analyzed. The data science team has a third-party tool for visualization and access which requires a SQL ANSI-2011 compliant interface. You need to select components for data processing and for analytics.

How should the data pipeline be designed?

- A . 1 Dataflow, 2 BigQuery

- B . 1 Pub/Sub, 2 Datastore

- C . 1 Dataflow, 2 Cloud SQL

- D . 1 Cloud Function, 2 Cloud SQL

You work for an online retail company that is creating a visual search engine. You have set up an end-to-end ML pipeline on Google Cloud to classify whether an image contains your company’s product. Expecting the release of new products in the near future, you configured a retraining functionality in the pipeline so that new data can be fed into your ML models. You also want to use Al Platform’s continuous evaluation service to ensure that the models have high accuracy on your test data set.

What should you do?

- A . Keep the original test dataset unchanged even if newer products are incorporated into retraining

- B . Extend your test dataset with images of the newer products when they are introduced to retraining

- C . Replace your test dataset with images of the newer products when they are introduced to retraining.

- D . Update your test dataset with images of the newer products when your evaluation metrics drop below a pre-decided threshold.

You are responsible for building a unified analytics environment across a variety of on-premises data marts. Your company is experiencing data quality and security challenges when integrating data across the servers, caused by the use of a wide range of disconnected tools and temporary solutions. You need a fully managed, cloud-native data integration service that will lower the total cost of work and reduce repetitive work. Some members on your team prefer a codeless interface for building Extract, Transform, Load (ETL) process.

Which service should you use?

- A . Dataflow

- B . Dataprep

- C . Apache Flink

- D . Cloud Data Fusion

You want to rebuild your ML pipeline for structured data on Google Cloud. You are using PySpark to conduct data transformations at scale, but your pipelines are taking over 12 hours to run. To speed up development and pipeline run time, you want to use a serverless tool and SQL syntax. You have already moved your raw data into Cloud Storage.

How should you build the pipeline on Google Cloud while meeting the speed and processing requirements?

- A . Use Data Fusion’s GUI to build the transformation pipelines, and then write the data into BigQuery

- B . Convert your PySpark into SparkSQL queries to transform the data and then run your pipeline on Dataproc to write the data into BigQuery.

- C . Ingest your data into Cloud SQL convert your PySpark commands into SQL queries to transform the data, and then use federated queries from BigQuery for machine learning

- D . Ingest your data into BigQuery using BigQuery Load, convert your PySpark commands into BigQuery SQL queries to transform the data, and then write the transformations to a new table

You are building a real-time prediction engine that streams files which may contain Personally Identifiable Information (Pll) to Google Cloud. You want to use the Cloud Data Loss Prevention (DLP) API to scan the files.

How should you ensure that the Pll is not accessible by unauthorized individuals?

- A . Stream all files to Google CloudT and then write the data to BigQuery Periodically conduct a bulk scan of the table using the DLP API.

- B . Stream all files to Google Cloud, and write batches of the data to BigQuery While the data is being written to BigQuery conduct a bulk scan of the data using the DLP API.

- C . Create two buckets of data Sensitive and Non-sensitive Write all data to the Non-sensitive bucket Periodically conduct a bulk scan of that bucket using the DLP API, and move the sensitive data to the Sensitive bucket

- D . Create three buckets of data: Quarantine, Sensitive, and Non-sensitive Write all data to the Quarantine bucket.

- E . Periodically conduct a bulk scan of that bucket using the DLP API, and move the data to either the Sensitive or Non-Sensitive bucket

You are designing an ML recommendation model for shoppers on your company’s ecommerce website. You will use Recommendations Al to build, test, and deploy your system.

How should you develop recommendations that increase revenue while following best practices?

- A . Use the "Other Products You May Like" recommendation type to increase the click-through rate

- B . Use the "Frequently Bought Together’ recommendation type to increase the shopping cart size for each order.

- C . Import your user events and then your product catalog to make sure you have the highest quality event stream

- D . Because it will take time to collect and record product data, use placeholder values for the product catalog to test the viability of the model.

Latest Professional Machine Learning Engineer Dumps Valid Version with 60 Q&As

Latest And Valid Q&A | Instant Download | Once Fail, Full Refund