Google Professional Machine Learning Engineer Google Professional Machine Learning Engineer Online Training

Google Professional Machine Learning Engineer Online Training

The questions for Professional Machine Learning Engineer were last updated at Feb 14,2026.

- Exam Code: Professional Machine Learning Engineer

- Exam Name: Google Professional Machine Learning Engineer

- Certification Provider: Google

- Latest update: Feb 14,2026

You are building an ML model to detect anomalies in real-time sensor data. You will use Pub/Sub to handle incoming requests. You want to store the results for analytics and visualization.

How should you configure the pipeline?

- A . 1 Dataflow, 2 – Al Platform, 3 BigQuery

- B . 1 DataProc, 2 AutoML, 3 Cloud Bigtable

- C . 1 BigQuery, 2 AutoML, 3 Cloud Functions

- D . 1 BigQuery, 2 Al Platform, 3 Cloud Storage

You have a functioning end-to-end ML pipeline that involves tuning the hyperparameters of your ML model using Al Platform, and then using the best-tuned parameters for training. Hypertuning is taking longer than expected and is delaying the downstream processes. You want to speed up the tuning job without significantly compromising its effectiveness.

Which actions should you take? Choose 2 answers

- A . Decrease the number of parallel trials

- B . Decrease the range of floating-point values

- C . Set the early stopping parameter to TRUE

- D . Change the search algorithm from Bayesian search to random search.

- E . Decrease the maximum number of trials during subsequent training phases.

You have written unit tests for a Kubeflow Pipeline that require custom libraries. You want to automate the execution of unit tests with each new push to your development branch in Cloud Source Repositories.

What should you do?

- A . Write a script that sequentially performs the push to your development branch and executes the unit tests on Cloud Run

- B . Using Cloud Build, set an automated trigger to execute the unit tests when changes are pushed to your development branch.

- C . Set up a Cloud Logging sink to a Pub/Sub topic that captures interactions with Cloud Source Repositories Configure a Pub/Sub trigger for Cloud Run, and execute the unit tests on Cloud Run.

- D . Set up a Cloud Logging sink to a Pub/Sub topic that captures interactions with Cloud Source Repositories. Execute the unit tests using a Cloud Function that is triggered when messages are sent to the Pub/Sub topic

You have trained a deep neural network model on Google Cloud. The model has low loss on the training data, but is performing worse on the validation data. You want the model to be resilient to overfitting.

Which strategy should you use when retraining the model?

- A . Apply a dropout parameter of 0 2, and decrease the learning rate by a factor of 10

- B . Apply a L2 regularization parameter of 0.4, and decrease the learning rate by a factor of 10.

- C . Run a hyperparameter tuning job on Al Platform to optimize for the L2 regularization and dropout parameters

- D . Run a hyperparameter tuning job on Al Platform to optimize for the learning rate, and increase the number of neurons by a factor of 2.

You are training a Resnet model on Al Platform using TPUs to visually categorize types of defects in automobile engines. You capture the training profile using the Cloud TPU profiler plugin and observe that it is highly input-bound. You want to reduce the bottleneck and speed up your model training process.

Which modifications should you make to the tf .data dataset? Choose 2 answers

- A . Use the interleave option for reading data

- B . Reduce the value of the repeat parameter

- C . Increase the buffer size for the shuffle option.

- D . Set the prefetch option equal to the training batch size

- E . Decrease the batch size argument in your transformation

You work for a public transportation company and need to build a model to estimate delay times for multiple transportation routes. Predictions are served directly to users in an app in real time. Because different seasons and population increases impact the data relevance, you will retrain the model every month. You want to follow Google-recommended best practices.

How should you configure the end-to-end architecture of the predictive model?

- A . Configure Kubeflow Pipelines to schedule your multi-step workflow from training to deploying your model.

- B . Use a model trained and deployed on BigQuery ML and trigger retraining with the scheduled query feature in BigQuery

- C . Write a Cloud Functions script that launches a training and deploying job on Ai Platform that is triggered by Cloud Scheduler

- D . Use Cloud Composer to programmatically schedule a Dataflow job that executes the workflow from training to deploying your model

You are an ML engineer at a global shoe store. You manage the ML models for the company’s website. You are asked to build a model that will recommend new products to the user based on their purchase behavior and similarity with other users.

What should you do?

- A . Build a classification model

- B . Build a knowledge-based filtering model

- C . Build a collaborative-based filtering model

- D . Build a regression model using the features as predictors

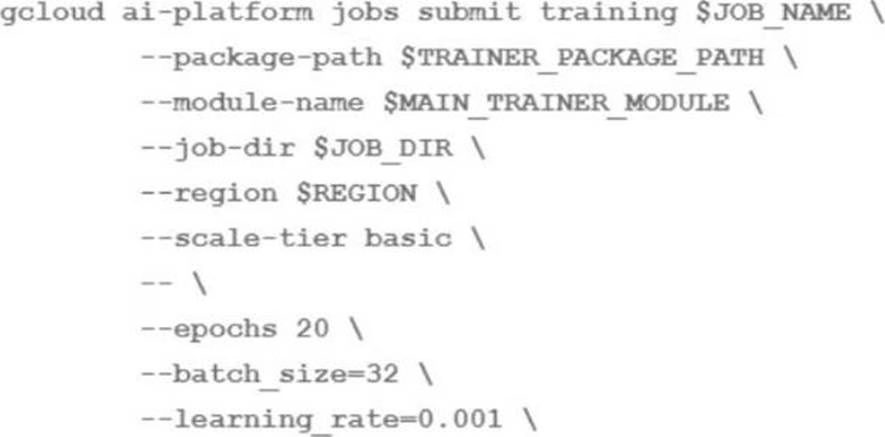

You are training an LSTM-based model on Al Platform to summarize text using the following job submission script:

You want to ensure that training time is minimized without significantly compromising the accuracy of your model.

What should you do?

- A . Modify the ‘epochs’ parameter

- B . Modify the ‘scale-tier’ parameter

- C . Modify the batch size’ parameter

- D . Modify the ‘learning rate’ parameter

You are training a TensorFlow model on a structured data set with 100 billion records stored in several CSV files. You need to improve the input/output execution performance.

What should you do?

- A . Load the data into BigQuery and read the data from BigQuery.

- B . Load the data into Cloud Bigtable, and read the data from Bigtable

- C . Convert the CSV files into shards of TFRecords, and store the data in Cloud Storage

- D . Convert the CSV files into shards of TFRecords, and store the data in the Hadoop Distributed File System (HDFS)

You have deployed multiple versions of an image classification model on Al Platform. You want to monitor the performance of the model versions overtime.

How should you perform this comparison?

- A . Compare the loss performance for each model on a held-out dataset.

- B . Compare the loss performance for each model on the validation data

- C . Compare the receiver operating characteristic (ROC) curve for each model using the What-lf Tool

- D . Compare the mean average precision across the models using the Continuous Evaluation feature

Latest Professional Machine Learning Engineer Dumps Valid Version with 60 Q&As

Latest And Valid Q&A | Instant Download | Once Fail, Full Refund