Google Professional Machine Learning Engineer Google Professional Machine Learning Engineer Online Training

Google Professional Machine Learning Engineer Online Training

The questions for Professional Machine Learning Engineer were last updated at Feb 14,2026.

- Exam Code: Professional Machine Learning Engineer

- Exam Name: Google Professional Machine Learning Engineer

- Certification Provider: Google

- Latest update: Feb 14,2026

You need to design a customized deep neural network in Keras that will predict customer purchases based on their purchase history. You want to explore model performance using multiple model architectures, store training data, and be able to compare the evaluation metrics in the same dashboard.

What should you do?

- A . Create multiple models using AutoML Tables

- B . Automate multiple training runs using Cloud Composer

- C . Run multiple training jobs on Al Platform with similar job names

- D . Create an experiment in Kubeflow Pipelines to organize multiple runs

Your team needs to build a model that predicts whether images contain a driver’s license, passport, or credit card. The data engineering team already built the pipeline and generated a dataset composed of 10,000 images with driver’s licenses, 1,000 images with passports, and 1,000 images with credit cards. You now have to train a model with the following label map: [‘driversjicense’, ‘passport’, ‘credit_card’].

Which loss function should you use?

- A . Categorical hinge

- B . Binary cross-entropy

- C . Categorical cross-entropy

- D . Sparse categorical cross-entropy

You are an ML engineer at a global car manufacturer. You need to build an ML model to predict car sales in different cities around the world.

Which features or feature crosses should you use to train city-specific relationships between car type and number of sales?

- A . Three individual features binned latitude, binned longitude, and one-hot encoded car type

- B . One feature obtained as an element-wise product between latitude, longitude, and car type

- C . One feature obtained as an element-wise product between binned latitude, binned longitude, and one-hot encoded car type

- D . Two feature crosses as a element-wise product the first between binned latitude and one-hot encoded car type, and the second between binned longitude and one-hot encoded car type

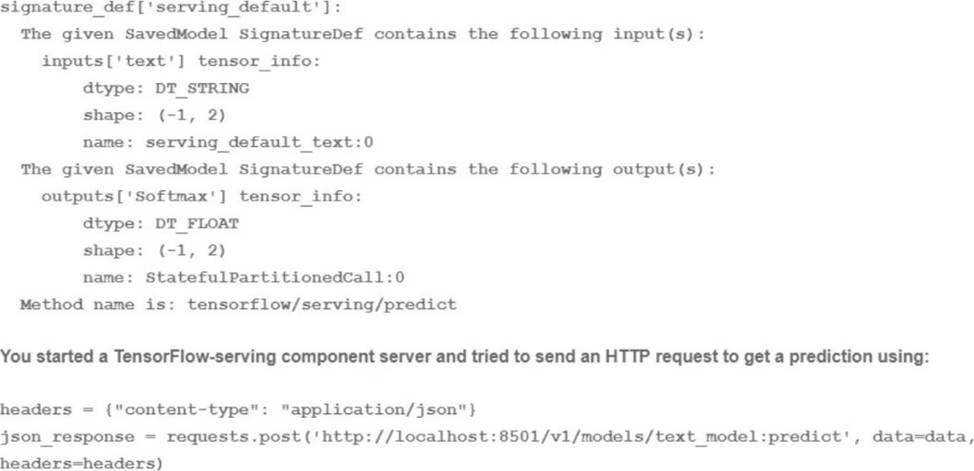

You trained a text classification model.

You have the following SignatureDefs:

What is the correct way to write the predict request?

- A . data json.dumps({"signature_name": "serving_default’ "instances": [fab’, ‘be1, ‘cd’]]})

- B . data json dumps({"signature_name": "serving_default"! "instances": [[‘a’, ‘b’, "c", ‘d’, ‘e’, ‘f’]]})

- C . data json.dumps({"signature_name": "serving_default, "instances": [[‘a’, ‘b ‘c’1, [d ‘e T]]})

- D . data json dumps({"signature_name": f,serving_default", "instances": [[‘a’, ‘b’], [c ‘d’], [‘e T]]})

You work for a social media company. You need to detect whether posted images contain cars. Each training example is a member of exactly one class. You have trained an object detection neural network and deployed the model version to Al Platform Prediction for evaluation. Before deployment, you created an evaluation job and attached it to the Al Platform Prediction model version. You notice that the precision is lower than your business requirements allow.

How should you adjust the model’s final layer softmax threshold to increase precision?

- A . Increase the recall

- B . Decrease the recall.

- C . Increase the number of false positives

- D . Decrease the number of false negatives

You developed an ML model with Al Platform, and you want to move it to production. You serve a few thousand queries per second and are experiencing latency issues. Incoming requests are served by a load balancer that distributes them across multiple Kubeflow CPU-only pods running on Google Kubernetes Engine (GKE). Your goal is to improve the serving latency without changing the underlying infrastructure.

What should you do?

- A . Significantly increase the max_batch_size TensorFlow Serving parameter

- B . Switch to the tensorflow-model-server-universal version of TensorFlow Serving

- C . Significantly increase the max_enqueued_batches TensorFlow Serving parameter

- D . Recompile TensorFlow Serving using the source to support CPU-specific optimizations Instruct GKE to choose an appropriate baseline minimum CPU platform for serving nodes

You built and manage a production system that is responsible for predicting sales numbers. Model accuracy is crucial, because the production model is required to keep up with market changes. Since being deployed to production, the model hasn’t changed; however the accuracy of the model has steadily deteriorated.

What issue is most likely causing the steady decline in model accuracy?

- A . Poor data quality

- B . Lack of model retraining

- C . Too few layers in the model for capturing information

- D . Incorrect data split ratio during model training, evaluation, validation, and test

You are an ML engineer at a large grocery retailer with stores in multiple regions. You have been asked to create an inventory prediction model. Your models features include region, location, historical demand, and seasonal popularity. You want the algorithm to learn from new inventory data on a daily basis.

Which algorithms should you use to build the model?

- A . Classification

- B . Reinforcement Learning

- C . Recurrent Neural Networks (RNN)

- D . Convolutional Neural Networks (CNN)

You need to train a computer vision model that predicts the type of government ID present in a given image using a GPU-powered virtual machine on Compute Engine.

You use the following parameters:

• Optimizer: SGD

• Image shape 224×224

• Batch size 64

• Epochs 10

• Verbose 2

During training you encounter the following error: ResourceExhaustedError: out of Memory (oom) when allocating tensor.

What should you do?

- A . Change the optimizer

- B . Reduce the batch size

- C . Change the learning rate

- D . Reduce the image shape

You have been asked to develop an input pipeline for an ML training model that processes images from disparate sources at a low latency. You discover that your input data does not fit in memory.

How should you create a dataset following Google-recommended best practices?

- A . Create a tf.data.Dataset.prefetch transformation

- B . Convert the images to tf .Tensor Objects, and then run Dataset. from_tensor_slices{).

- C . Convert the images to tf .Tensor Objects, and then run tf. data. Dataset. from_tensors ().

- D . Convert the images Into TFRecords, store the images in Cloud Storage, and then use the tf. data API to read the images for training

Latest Professional Machine Learning Engineer Dumps Valid Version with 60 Q&As

Latest And Valid Q&A | Instant Download | Once Fail, Full Refund