Google Professional Machine Learning Engineer Google Professional Machine Learning Engineer Online Training

Google Professional Machine Learning Engineer Online Training

The questions for Professional Machine Learning Engineer were last updated at Feb 13,2026.

- Exam Code: Professional Machine Learning Engineer

- Exam Name: Google Professional Machine Learning Engineer

- Certification Provider: Google

- Latest update: Feb 13,2026

You work for a toy manufacturer that has been experiencing a large increase in demand. You need to build an ML model to reduce the amount of time spent by quality control inspectors checking for product defects. Faster defect detection is a priority. The factory does not have reliable Wi-Fi. Your company wants to implement the new ML model as soon as possible.

Which model should you use?

- A . AutoML Vision model

- B . AutoML Vision Edge mobile-versatile-1 model

- C . AutoML Vision Edge mobile-low-latency-1 model

- D . AutoML Vision Edge mobile-high-accuracy-1 model

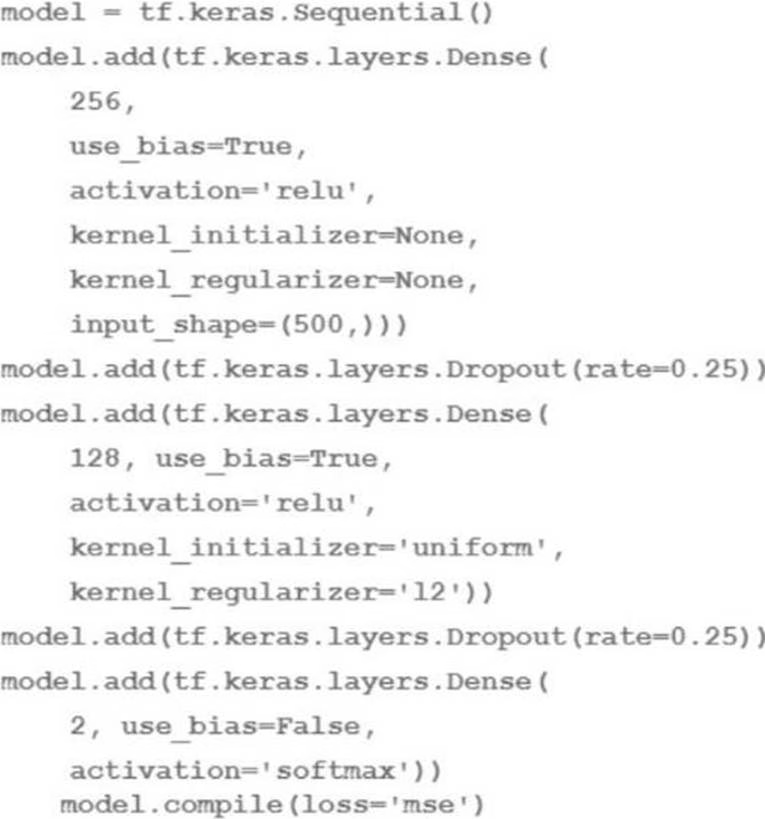

You are going to train a DNN regression model with Keras APIs using this code:

How many trainable weights does your model have? (The arithmetic below is correct.)

- A . 501*256+257*128+2 161154

- B . 500*256+256*128+128*2 161024

- C . 501*256+257*128+128*2161408

- D . 500*256*0 25+256*128*0 25+128*2 40448

You recently joined a machine learning team that will soon release a new project. As a lead on the project, you are asked to determine the production readiness of the ML components. The team has already tested features and data, model development, and infrastructure.

Which additional readiness check should you recommend to the team?

- A . Ensure that training is reproducible

- B . Ensure that all hyperparameters are tuned

- C . Ensure that model performance is monitored

- D . Ensure that feature expectations are captured in the schema

You recently designed and built a custom neural network that uses critical dependencies specific to your organization’s framework. You need to train the model using a managed training service on Google Cloud. However, the ML framework and related dependencies are not supported by Al Platform Training. Also, both your model and your data are too large to fit in memory on a single machine. Your ML framework of choice uses the scheduler, workers, and servers distribution structure.

What should you do?

- A . Use a built-in model available on Al Platform Training

- B . Build your custom container to run jobs on Al Platform Training

- C . Build your custom containers to run distributed training jobs on Al Platform Training

- D . Reconfigure your code to a ML framework with dependencies that are supported by Al Platform Training

You are an ML engineer in the contact center of a large enterprise. You need to build a sentiment analysis tool that predicts customer sentiment from recorded phone conversations. You need to identify the best approach to building a model while ensuring that the gender, age, and cultural differences of the customers who called the contact center do not impact any stage of the model development pipeline and results.

What should you do?

- A . Extract sentiment directly from the voice recordings

- B . Convert the speech to text and build a model based on the words

- C . Convert the speech to text and extract sentiments based on the sentences

- D . Convert the speech to text and extract sentiment using syntactical analysis

You work for an advertising company and want to understand the effectiveness of your company’s latest advertising campaign. You have streamed 500 MB of campaign data into BigQuery. You want to query the table, and then manipulate the results of that query with a pandas dataframe in an Al Platform notebook.

What should you do?

- A . Use Al Platform Notebooks’ BigQuery cell magic to query the data, and ingest the results as a pandas dataframe

- B . Export your table as a CSV file from BigQuery to Google Drive, and use the Google Drive API to ingest the file into your notebook instance

- C . Download your table from BigQuery as a local CSV file, and upload it to your Al Platform notebook instance Use pandas. read_csv to ingest the file as a pandas dataframe

- D . From a bash cell in your Al Platform notebook, use the bq extract command to export the table as a CSV file to Cloud Storage, and then use gsutii cp to copy the data into the notebook Use pandas. read_csv to ingest the file as a pandas dataframe

You have trained a model on a dataset that required computationally expensive preprocessing operations. You need to execute the same preprocessing at prediction time. You deployed the model on Al Platform for high-throughput online prediction.

Which architecture should you use?

- A . • Validate the accuracy of the model that you trained on preprocessed data

• Create a new model that uses the raw data and is available in real time

• Deploy the new model onto Al Platform for online prediction - B . • Send incoming prediction requests to a Pub/Sub topic

• Transform the incoming data using a Dataflow job

• Submit a prediction request to Al Platform using the transformed data

• Write the predictions to an outbound Pub/Sub queue - C . • Stream incoming prediction request data into Cloud Spanner

• Create a view to abstract your preprocessing logic.

• Query the view every second for new records

• Submit a prediction request to Al Platform using the transformed data

• Write the predictions to an outbound Pub/Sub queue. - D . • Send incoming prediction requests to a Pub/Sub topic

• Set up a Cloud Function that is triggered when messages are published to the Pub/Sub topic.

• Implement your preprocessing logic in the Cloud Function

• Submit a prediction request to Al Platform using the transformed data

• Write the predictions to an outbound Pub/Sub queue

You are building a model to predict daily temperatures. You split the data randomly and then transformed the training and test datasets. Temperature data for model training is uploaded hourly. During testing, your model performed with 97% accuracy; however, after deploying to production, the model’s accuracy dropped to 66%.

How can you make your production model more accurate?

- A . Normalize the data for the training, and test datasets as two separate steps.

- B . Split the training and test data based on time rather than a random split to avoid leakage

- C . Add more data to your test set to ensure that you have a fair distribution and sample for testing

- D . Apply data transformations before splitting, and cross-validate to make sure that the transformations are applied to both the training and test sets.

You have a demand forecasting pipeline in production that uses Dataflow to preprocess raw data prior to model training and prediction. During preprocessing, you employ Z-score normalization on data stored in BigQuery and write it back to BigQuery. New training data is added every week. You want to make the process more efficient by minimizing computation time and manual intervention.

What should you do?

- A . Normalize the data using Google Kubernetes Engine

- B . Translate the normalization algorithm into SQL for use with BigQuery

- C . Use the normalizer_fn argument in TensorFlow’s Feature Column API

- D . Normalize the data with Apache Spark using the Dataproc connector for BigQuery

You were asked to investigate failures of a production line component based on sensor readings. After receiving the dataset, you discover that less than 1% of the readings are positive examples representing failure incidents. You have tried to train several classification models, but none of them converge.

How should you resolve the class imbalance problem?

- A . Use the class distribution to generate 10% positive examples

- B . Use a convolutional neural network with max pooling and softmax activation

- C . Downsample the data with upweighting to create a sample with 10% positive examples

- D . Remove negative examples until the numbers of positive and negative examples are equal

Latest Professional Machine Learning Engineer Dumps Valid Version with 60 Q&As

Latest And Valid Q&A | Instant Download | Once Fail, Full Refund