Google Professional Cloud Architect Google Certified Professional – Cloud Architect (GCP) Online Training

Google Professional Cloud Architect Online Training

The questions for Professional Cloud Architect were last updated at Feb 27,2026.

- Exam Code: Professional Cloud Architect

- Exam Name: Google Certified Professional – Cloud Architect (GCP)

- Certification Provider: Google

- Latest update: Feb 27,2026

Your application needs to process credit card transactions. You want the smallest scope of Payment Card Industry (PCI) compliance without compromising the ability to analyze transactional data and trends relating to which payment methods are used .

How should you design your architecture?

- A . Create a tokenizer service and store only tokenized data.

- B . Create separate projects that only process credit card data.

- C . Create separate subnetworks and isolate the components that process credit card data.

- D . Streamline the audit discovery phase by labeling all of the virtual machines (VMs) that process PCI data.

- E . Enable Logging export to Google BigQuery and use ACLs and views to scope the data shared with the auditor.

Your company is moving 75 TB of data into Google Cloud. You want to use Cloud Storage and follow Googlerecommended practices .

What should you do?

- A . Move your data onto a Transfer Appliance. Use a Transfer Appliance Rehydrator to decrypt the data into Cloud Storage.

- B . Move your data onto a Transfer Appliance. Use Cloud Dataprep to decrypt the data into Cloud Storage.

- C . Install gsutil on each server that contains data. Use resumable transfers to upload the data into Cloud Storage.

- D . Install gsutil on each server containing data. Use streaming transfers to upload the data

into Cloud

Storage.

You have been asked to select the storage system for the click-data of your company’s large portfolio of websites. This data is streamed in from a custom website analytics package at a typical rate of 6,000 clicks per minute, with bursts of up to 8,500 clicks per second. It must been stored for future analysis by your data science and user experience

teams .

Which storage infrastructure should you choose?

- A . Google Cloud SQL

- B . Google Cloud Bigtable

- C . Google Cloud Storage

- D . Google cloud Datastore

You need to design a solution for global load balancing based on the URL path being requested. You need to ensure operations reliability and end-to-end in-transit encryption based on Google best practices.

What should you do?

- A . Create a cross-region load balancer with URL Maps.

- B . Create an HTTPS load balancer with URL maps.

- C . Create appropriate instance groups and instances. Configure SSL proxy load balancing.

- D . Create a global forwarding rule. Configure SSL proxy balancing.

You need to set up Microsoft SQL Server on GCP. Management requires that there’s no downtime in case of a data center outage in any of the zones within a GCP region .

What should you do?

- A . Configure a Cloud SQL instance with high availability enabled.

- B . Configure a Cloud Spanner instance with a regional instance configuration.

- C . Set up SQL Server on Compute Engine, using Always On Availability Groups using Windows Failover

Clustering. Place nodes in different subnets. - D . Set up SQL Server Always On Availability Groups using Windows Failover Clustering.

Place nodes in different zones.

You have developed a non-critical update to your application that is running in a managed instance group, and have created a new instance template with the update that you want to release. To prevent any possible impact to the application, you don’t want to update any running instances. You want any new instances that are created by the managed instance group to contain the new update .

What should you do?

- A . Start a new rolling restart operation.

- B . Start a new rolling replace operation.

- C . Start a new rolling update. Select the Proactive update mode.

- D . Start a new rolling update. Select the Opportunistic update mode.

Your company is developing a new application that will allow globally distributed users to

upload pictures and share them with other selected users. The application will support millions of concurrent users. You want to allow developers to focus on just building code without having to create and maintain the underlying infrastructure .

Which service should you use to deploy the application?

- A . App Engine

- B . Cloud Endpoints

- C . Compute Engine

- D . Google Kubernetes Engine

You have developed an application using Cloud ML Engine that recognizes famous paintings from uploaded images. You want to test the application and allow specific people to upload images for the next 24 hours. Not all users have a Google Account .

How should you have users upload images?

- A . Have users upload the images to Cloud Storage. Protect the bucket with a password that expires after 24 hours.

- B . Have users upload the images to Cloud Storage using a signed URL that expires after 24 hours.

- C . Create an App Engine web application where users can upload images. Configure App Engine to disable the application after 24 hours. Authenticate users via Cloud Identity.

- D . Create an App Engine web application where users can upload images for the next 24 hours. Authenticate users via Cloud Identity.

Your development team has installed a new Linux kernel module on the batch servers in Google Compute Engine (GCE) virtual machines (VMs) to speed up the nightly batch process. Two days after the installation, 50% of web application deployed in the same

nightly batch run. You want to collect details on the failure to pass back to the development team .

Which three actions should you take? Choose 3 answers

- A . Use Stackdriver Logging to search for the module log entries.

- B . Read the debug GCE Activity log using the API or Cloud Console.

- C . Use gcloud or Cloud Console to connect to the serial console and observe the logs.

- D . Identify whether a live migration event of the failed server occurred, using in the activity log.

- E . Adjust the Google Stackdriver timeline to match the failure time, and observe the batch server metrics.

- F . Export a debug VM into an image, and run the image on a local server where kernel log messages will be displayed on the native screen.

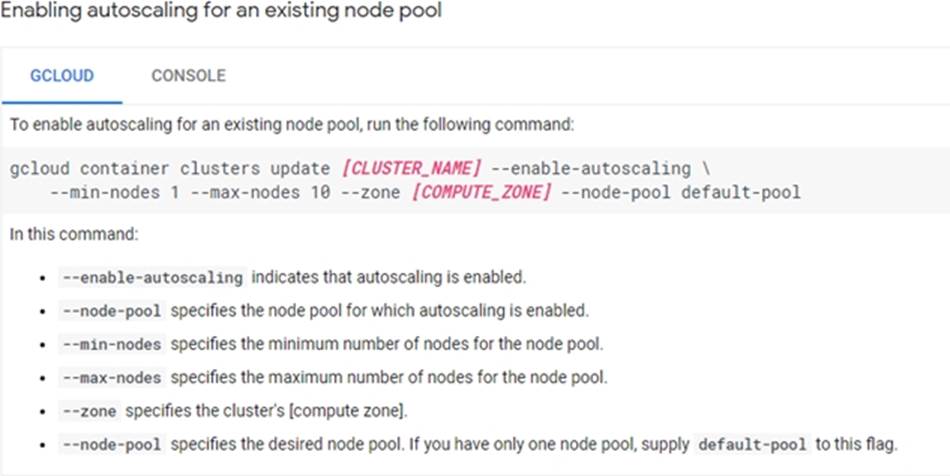

You want to enable your running Google Kubernetes Engine cluster to scale as demand for your application changes.

What should you do?

- A . Add additional nodes to your Kubernetes Engine cluster using the following command:

gcloud container clusters resize

CLUSTER_Name C -size 10 - B . Add a tag to the instances in the cluster with the following command:

gcloud compute instances add-tags

INSTANCE – -tags enable-

autoscaling max-nodes-10 - C . Update the existing Kubernetes Engine cluster with the following command:

gcloud alpha container clusters

update mycluster – -enable

autoscaling – -min-nodes=1 – -max-nodes=10 - D . Create a new Kubernetes Engine cluster with the following command:

gcloud alpha container clusters

create mycluster – -enable-

autoscaling – -min-nodes=1 – -max-nodes=10

and redeploy your application

Latest Professional Cloud Architect Dumps Valid Version with 168 Q&As

Latest And Valid Q&A | Instant Download | Once Fail, Full Refund