Databricks Databricks Machine Learning Associate Databricks Certified Machine Learning Associate Exam Online Training

Databricks Databricks Machine Learning Associate Online Training

The questions for Databricks Machine Learning Associate were last updated at Feb 04,2026.

- Exam Code: Databricks Machine Learning Associate

- Exam Name: Databricks Certified Machine Learning Associate Exam

- Certification Provider: Databricks

- Latest update: Feb 04,2026

What is the name of the method that transforms categorical features into a series of binary indicator feature variables?

- A . Leave-one-out encoding

- B . Target encoding

- C . One-hot encoding

- D . Categorical

- E . String indexing

A data scientist wants to parallelize the training of trees in a gradient boosted tree to speed up the training process. A colleague suggests that parallelizing a boosted tree algorithm can be difficult.

Which of the following describes why?

- A . Gradient boosting is not a linear algebra-based algorithm which is required for parallelization

- B . Gradient boosting requires access to all data at once which cannot happen during parallelization.

- C . Gradient boosting calculates gradients in evaluation metrics using all cores which prevents parallelization.

- D . Gradient boosting is an iterative algorithm that requires information from the previous iteration to perform the next step.

A data scientist wants to efficiently tune the hyperparameters of a scikit-learn model. They elect to use the Hyperopt library’s fmin operation to facilitate this process. Unfortunately, the final model is not very accurate. The data scientist suspects that there is an issue with the objective_function being passed as an argument to fmin.

They use the following code block to create the objective_function:

Which of the following changes does the data scientist need to make to their objective_function in order to produce a more accurate model?

- A . Add test set validation process

- B . Add a random_state argument to the RandomForestRegressor operation

- C . Remove the mean operation that is wrapping the cross_val_score operation

- D . Replace the r2 return value with -r2

- E . Replace the fmin operation with the fmax operation

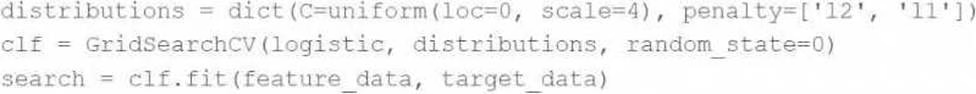

A data scientist is attempting to tune a logistic regression model logistic using scikit-learn. They want to specify a search space for two hyperparameters and let the tuning process randomly select values for each evaluation.

They attempt to run the following code block, but it does not accomplish the desired task:

Which of the following changes can the data scientist make to accomplish the task?

- A . Replace the GridSearchCV operation with RandomizedSearchCV

- B . Replace the GridSearchCV operation with cross_validate

- C . Replace the GridSearchCV operation with ParameterGrid

- D . Replace the random_state=0 argument with random_state=1

- E . Replace the penalty= [’12’, ’11’] argument with penalty=uniform (’12’, ’11’)

Which of the following tools can be used to parallelize the hyperparameter tuning process for single-node machine learning models using a Spark cluster?

- A . MLflow Experiment Tracking

- B . Spark ML

- C . Autoscaling clusters

- D . Autoscaling clusters

- E . Delta Lake

Which of the following describes the relationship between native Spark DataFrames and pandas API on Spark DataFrames?

- A . pandas API on Spark DataFrames are single-node versions of Spark DataFrames with additional metadata

- B . pandas API on Spark DataFrames are more performant than Spark DataFrames

- C . pandas API on Spark DataFrames are made up of Spark DataFrames and additional metadata

- D . pandas API on Spark DataFrames are less mutable versions of Spark DataFrames

- E . pandas API on Spark DataFrames are unrelated to Spark DataFrames

A data scientist has written a data cleaning notebook that utilizes the pandas library, but their colleague has suggested that they refactor their notebook to scale with big data.

Which of the following approaches can the data scientist take to spend the least amount of time refactoring their notebook to scale with big data?

- A . They can refactor their notebook to process the data in parallel.

- B . They can refactor their notebook to use the PySpark DataFrame API.

- C . They can refactor their notebook to use the Scala Dataset API.

- D . They can refactor their notebook to use Spark SQL.

- E . They can refactor their notebook to utilize the pandas API on Spark.

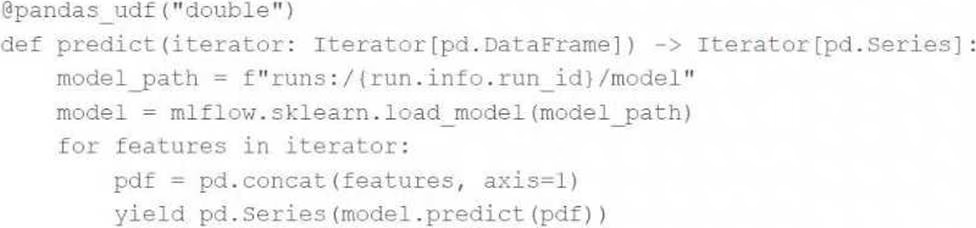

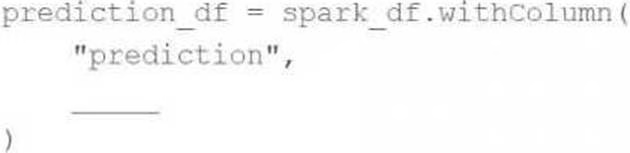

A data scientist has defined a Pandas UDF function predict to parallelize the inference process for a single-node model:

They have written the following incomplete code block to use predict to score each record of Spark DataFrame spark_df:

Which of the following lines of code can be used to complete the code block to successfully complete the task?

- A . predict(*spark_df.columns)

- B . mapInPandas(predict)

- C . predict(Iterator(spark_df))

- D . mapInPandas(predict(spark_df.columns))

- E . predict(spark_df.columns)

Which of the Spark operations can be used to randomly split a Spark DataFrame into a training DataFrame and a test DataFrame for downstream use?

- A . TrainValidationSplit

- B . DataFrame.where

- C . CrossValidator

- D . TrainValidationSplitModel

- E . DataFrame.randomSplit

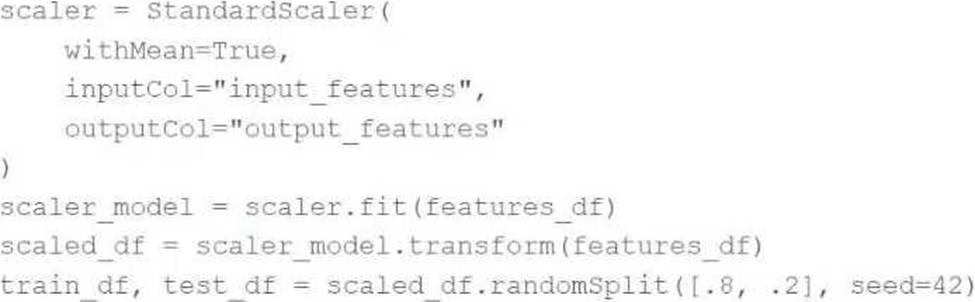

A data scientist is using Spark ML to engineer features for an exploratory machine learning project.

They decide they want to standardize their features using the following code block:

Upon code review, a colleague expressed concern with the features being standardized prior to splitting the data into a training set and a test set.

Which of the following changes can the data scientist make to address the concern?

- A . Utilize the MinMaxScaler object to standardize the training data according to global minimum and maximum values

- B . Utilize the MinMaxScaler object to standardize the test data according to global minimum and maximum values

- C . Utilize a cross-validation process rather than a train-test split process to remove the need for standardizing data

- D . Utilize the Pipeline API to standardize the training data according to the test data’s summary statistics

- E . Utilize the Pipeline API to standardize the test data according to the training data’s summary statistics

Latest Databricks Machine Learning Associate Dumps Valid Version with 74 Q&As

Latest And Valid Q&A | Instant Download | Once Fail, Full Refund