Databricks Databricks Certified Professional Data Engineer Databricks Certified Data Engineer Professional Exam Online Training

Databricks Databricks Certified Professional Data Engineer Online Training

The questions for Databricks Certified Professional Data Engineer were last updated at Feb 20,2026.

- Exam Code: Databricks Certified Professional Data Engineer

- Exam Name: Databricks Certified Data Engineer Professional Exam

- Certification Provider: Databricks

- Latest update: Feb 20,2026

A data architect has designed a system in which two Structured Streaming jobs will concurrently write to a single bronze Delta table. Each job is subscribing to a different topic from an Apache Kafka source, but they will write data with the same schema. To keep the directory structure simple, a data engineer has decided to nest a checkpoint directory to be shared by both streams.

The proposed directory structure is displayed below:

Which statement describes whether this checkpoint directory structure is valid for the given scenario and why?

- A . No; Delta Lake manages streaming checkpoints in the transaction log.

- B . Yes; both of the streams can share a single checkpoint directory.

- C . No; only one stream can write to a Delta Lake table.

- D . Yes; Delta Lake supports infinite concurrent writers.

- E . No; each of the streams needs to have its own checkpoint directory.

A Structured Streaming job deployed to production has been experiencing delays during peak hours of the day. At present, during normal execution, each microbatch of data is processed in less than 3 seconds. During peak hours of the day, execution time for each microbatch becomes very inconsistent, sometimes exceeding 30 seconds. The streaming write is currently configured with a trigger interval of 10 seconds.

Holding all other variables constant and assuming records need to be processed in less than 10 seconds, which adjustment will meet the requirement?

- A . Decrease the trigger interval to 5 seconds; triggering batches more frequently allows idle executors to begin processing the next batch while longer running tasks from previous batches finish.

- B . Increase the trigger interval to 30 seconds; setting the trigger interval near the maximum execution time observed for each batch is always best practice to ensure no records are dropped.

- C . The trigger interval cannot be modified without modifying the checkpoint directory; to maintain the current stream state, increase the number of shuffle partitions to maximize parallelism.

- D . Use the trigger once option and configure a Databricks job to execute the query every 10 seconds; this ensures all backlogged records are processed with each batch.

- E . Decrease the trigger interval to 5 seconds; triggering batches more frequently may prevent records from backing up and large batches from causing spill.

Which statement describes Delta Lake Auto Compaction?

- A . An asynchronous job runs after the write completes to detect if files could be further compacted;

if yes, an optimize job is executed toward a default of 1 GB. - B . Before a Jobs cluster terminates, optimize is executed on all tables modified during the most recent job.

- C . Optimized writes use logical partitions instead of directory partitions; because partition boundaries are only represented in metadata, fewer small files are written.

- D . Data is queued in a messaging bus instead of committing data directly to memory; all data is committed from the messaging bus in one batch once the job is complete.

- E . An asynchronous job runs after the write completes to detect if files could be further compacted; if yes, an optimize job is executed toward a default of 128 MB.

Which statement characterizes the general programming model used by Spark Structured Streaming?

- A . Structured Streaming leverages the parallel processing of GPUs to achieve highly parallel data throughput.

- B . Structured Streaming is implemented as a messaging bus and is derived from Apache Kafka.

- C . Structured Streaming uses specialized hardware and I/O streams to achieve sub-second latency for data transfer.

- D . Structured Streaming models new data arriving in a data stream as new rows appended to an unbounded table.

- E . Structured Streaming relies on a distributed network of nodes that hold incremental state values for cached stages.

Which configuration parameter directly affects the size of a spark-partition upon ingestion of data into Spark?

- A . spark.sql.files.maxPartitionBytes

- B . spark.sql.autoBroadcastJoinThreshold

- C . spark.sql.files.openCostInBytes

- D . spark.sql.adaptive.coalescePartitions.minPartitionNum

- E . spark.sql.adaptive.advisoryPartitionSizeInBytes

A Spark job is taking longer than expected. Using the Spark UI, a data engineer notes that the Min, Median, and Max Durations for tasks in a particular stage show the minimum and median time to complete a task as roughly the same, but the max duration for a task to be roughly 100 times as long as the minimum.

Which situation is causing increased duration of the overall job?

- A . Task queueing resulting from improper thread pool assignment.

- B . Spill resulting from attached volume storage being too small.

- C . Network latency due to some cluster nodes being in different regions from the source data

- D . Skew caused by more data being assigned to a subset of spark-partitions.

- E . Credential validation errors while pulling data from an external system.

Each configuration below is identical to the extent that each cluster has 400 GB total of RAM, 160 total cores and only one Executor per VM.

Given a job with at least one wide transformation, which of the following cluster configurations will result in maximum performance?

- A . • Total VMs; 1

• 400 GB per Executor

• 160 Cores / Executor - B . • Total VMs: 8

• 50 GB per Executor

• 20 Cores / Executor C.

• Total VMs: 4

• 100 GB per Executor

• 40 Cores/Executor D.

• Total VMs:2

• 200 GB per Executor

• 80 Cores / Executor

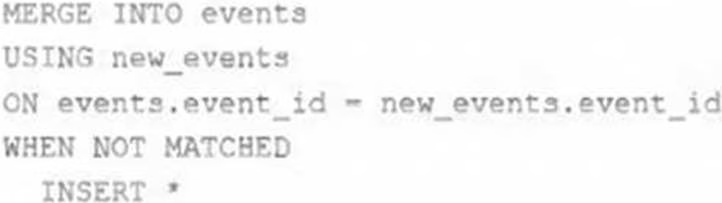

A junior data engineer on your team has implemented the following code block.

The view new_events contains a batch of records with the same schema as the events Delta table.

The event_id field serves as a unique key for this table.

When this query is executed, what will happen with new records that have the same event_id as an existing record?

- A . They are merged.

- B . They are ignored.

- C . They are updated.

- D . They are inserted.

- E . They are deleted.

A junior data engineer seeks to leverage Delta Lake’s Change Data Feed functionality to create a Type

1 table representing all of the values that have ever been valid for all rows in a bronze table created with the property delta.enableChangeDataFeed = true. They plan to execute the following code as a daily job:

Which statement describes the execution and results of running the above query multiple times?

- A . Each time the job is executed, newly updated records will be merged into the target table, overwriting previous values with the same primary keys.

- B . Each time the job is executed, the entire available history of inserted or updated records will be appended to the target table, resulting in many duplicate entries.

- C . Each time the job is executed, the target table will be overwritten using the entire history of inserted or updated records, giving the desired result.

- D . Each time the job is executed, the differences between the original and current versions are calculated; this may result in duplicate entries for some records.

- E . Each time the job is executed, only those records that have been inserted or updated since the last execution will be appended to the target table giving the desired result.

A new data engineer notices that a critical field was omitted from an application that writes its Kafka source to Delta Lake. This happened even though the critical field was in the Kafka source. That field was further missing from data written to dependent, long-term storage. The retention threshold on the Kafka service is seven days. The pipeline has been in production for three months.

Which describes how Delta Lake can help to avoid data loss of this nature in the future?

- A . The Delta log and Structured Streaming checkpoints record the full history of the Kafka producer.

- B . Delta Lake schema evolution can retroactively calculate the correct value for newly added fields, as long as the data was in the original source.

- C . Delta Lake automatically checks that all fields present in the source data are included in the ingestion layer.

- D . Data can never be permanently dropped or deleted from Delta Lake, so data loss is not possible under any circumstance.

- E . Ingestine all raw data and metadata from Kafka to a bronze Delta table creates a permanent, replayable history of the data state.

Latest Databricks Certified Professional Data Engineer Dumps Valid Version with 222 Q&As

Latest And Valid Q&A | Instant Download | Once Fail, Full Refund