Databricks Databricks Certified Data Engineer Professional Databricks Certified Data Engineer Professional Exam Online Training

Databricks Databricks Certified Data Engineer Professional Online Training

The questions for Databricks Certified Data Engineer Professional were last updated at Feb 20,2026.

- Exam Code: Databricks Certified Data Engineer Professional

- Exam Name: Databricks Certified Data Engineer Professional Exam

- Certification Provider: Databricks

- Latest update: Feb 20,2026

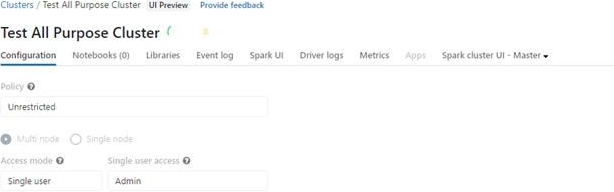

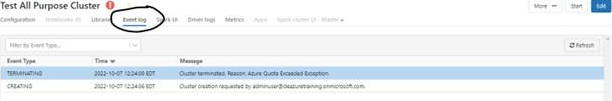

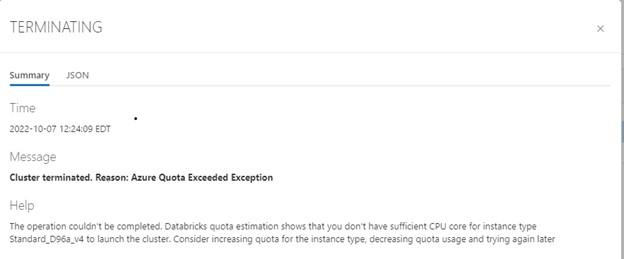

You were asked to setup a new all-purpose cluster, but the cluster is unable to start which of the following steps do you need to take to identify the root cause of the issue and the reason why the cluster was unable to start?

- A . Check the cluster driver logs

- B . Check the cluster event logs

(Correct) - C . Workspace logs

- D . Storage account

- E . Data plane

A SQL Dashboard was built for the supply chain team to monitor the inventory and product orders, but all of the timestamps displayed on the dashboards are showing in UTC format, so they requested to change the time zone to the location of New York.

How would you approach resolving this issue?

- A . Move the workspace from Central US zone to East US Zone

- B . Change the timestamp on the delta tables to America/New_York format

- C . Change the spark configuration of SQL endpoint to format the timestamp to Ameri-ca/New_York

- D . Under SQL Admin Console, set the SQL configuration parameter time zone to Ameri-ca/New_York

- E . Add SET Timezone = America/New_York on every of the SQL queries in the dashboard.

A SQL Dashboard was built for the supply chain team to monitor the inventory and product orders, but all of the timestamps displayed on the dashboards are showing in UTC format, so they requested to change the time zone to the location of New York.

How would you approach resolving this issue?

- A . Move the workspace from Central US zone to East US Zone

- B . Change the timestamp on the delta tables to America/New_York format

- C . Change the spark configuration of SQL endpoint to format the timestamp to Ameri-ca/New_York

- D . Under SQL Admin Console, set the SQL configuration parameter time zone to Ameri-ca/New_York

- E . Add SET Timezone = America/New_York on every of the SQL queries in the dashboard.

A SQL Dashboard was built for the supply chain team to monitor the inventory and product orders, but all of the timestamps displayed on the dashboards are showing in UTC format, so they requested to change the time zone to the location of New York.

How would you approach resolving this issue?

- A . Move the workspace from Central US zone to East US Zone

- B . Change the timestamp on the delta tables to America/New_York format

- C . Change the spark configuration of SQL endpoint to format the timestamp to Ameri-ca/New_York

- D . Under SQL Admin Console, set the SQL configuration parameter time zone to Ameri-ca/New_York

- E . Add SET Timezone = America/New_York on every of the SQL queries in the dashboard.

A SQL Dashboard was built for the supply chain team to monitor the inventory and product orders, but all of the timestamps displayed on the dashboards are showing in UTC format, so they requested to change the time zone to the location of New York.

How would you approach resolving this issue?

- A . Move the workspace from Central US zone to East US Zone

- B . Change the timestamp on the delta tables to America/New_York format

- C . Change the spark configuration of SQL endpoint to format the timestamp to Ameri-ca/New_York

- D . Under SQL Admin Console, set the SQL configuration parameter time zone to Ameri-ca/New_York

- E . Add SET Timezone = America/New_York on every of the SQL queries in the dashboard.

You are currently asked to work on building a data pipeline, you have noticed that you are currently working on a very large scale ETL many data dependencies, which of the following tools can be used to address this problem?

- A . AUTO LOADER

- B . JOBS and TASKS

- C . SQL Endpoints

- D . DELTA LIVE TABLES

- E . STRUCTURED STREAMING with MULTI HOP

You are currently asked to work on building a data pipeline, you have noticed that you are currently working on a very large scale ETL many data dependencies, which of the following tools can be used to address this problem?

- A . AUTO LOADER

- B . JOBS and TASKS

- C . SQL Endpoints

- D . DELTA LIVE TABLES

- E . STRUCTURED STREAMING with MULTI HOP

You are currently asked to work on building a data pipeline, you have noticed that you are currently working on a very large scale ETL many data dependencies, which of the following tools can be used to address this problem?

- A . AUTO LOADER

- B . JOBS and TASKS

- C . SQL Endpoints

- D . DELTA LIVE TABLES

- E . STRUCTURED STREAMING with MULTI HOP

You are currently asked to work on building a data pipeline, you have noticed that you are currently working on a very large scale ETL many data dependencies, which of the following tools can be used to address this problem?

- A . AUTO LOADER

- B . JOBS and TASKS

- C . SQL Endpoints

- D . DELTA LIVE TABLES

- E . STRUCTURED STREAMING with MULTI HOP

You are currently asked to work on building a data pipeline, you have noticed that you are currently working on a very large scale ETL many data dependencies, which of the following tools can be used to address this problem?

- A . AUTO LOADER

- B . JOBS and TASKS

- C . SQL Endpoints

- D . DELTA LIVE TABLES

- E . STRUCTURED STREAMING with MULTI HOP

Latest Databricks Certified Data Engineer Professional Dumps Valid Version with 278 Q&As

Latest And Valid Q&A | Instant Download | Once Fail, Full Refund