Which deployment orchestrator is the MOST SUITABLE for managing and automating your ML workflow?

You are a machine learning engineer at a fintech company tasked with developing and deploying an end-to-end machine learning workflow for fraud detection. The workflow involves multiple steps, including data extraction, preprocessing, feature engineering, model training, hyperparameter tuning, and deployment. The company requires the solution to be scalable, support complex dependencies between tasks, and provide robust monitoring and versioning capabilities. Additionally, the workflow needs to integrate seamlessly with existing AWS services.

Which deployment orchestrator is the MOST SUITABLE for managing and automating your ML workflow?

A . Use AWS Step Functions to build a serverless workflow that integrates with SageMaker for model training and deployment, ensuring scalability and fault tolerance

B . Use AWS Lambda functions to manually trigger each step of the ML workflow, enabling flexible execution without needing a predefined orchestration tool

C . Use Amazon SageMaker Pipelines to orchestrate the entire ML workflow, leveraging its built-in integration with SageMaker features like training, tuning, and deployment

D . Use Apache Airflow to define and manage the workflow with custom DAGs (Directed Acyclic

Graphs), integrating with AWS services through operators and hooks

Answer: C

Explanation:

Correct option:

Use Amazon SageMaker Pipelines to orchestrate the entire ML workflow, leveraging its built-in integration with SageMaker features like training, tuning, and deployment

Amazon SageMaker Pipelines is a purpose-built workflow orchestration service to automate machine learning (ML) development.

SageMaker Pipelines is specifically designed for orchestrating ML workflows. It provides native integration with SageMaker features like model training, tuning, and deployment. It also supports versioning, lineage tracking, and automatic execution of workflows, making it the ideal choice for managing end-to-end ML workflows in AWS.

via – https://docs.aws.amazon.com/sagemaker/latest/dg/pipelines.html

Incorrect options:

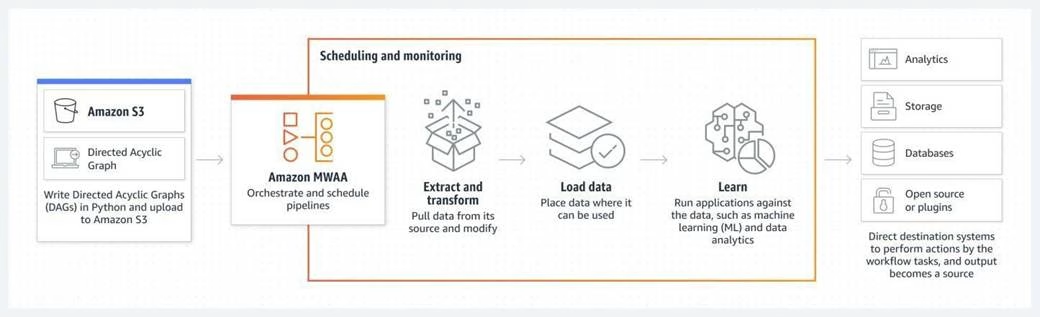

Use Apache Airflow to define and manage the workflow with custom DAGs (Directed Acyclic Graphs), integrating with AWS services through operators and hooks – Apache Airflow is a powerful orchestration tool that allows you to define complex workflows using custom DAGs. However, it requires significant setup and maintenance, and while it can integrate with AWS services, it does not provide the seamless, built-in integration with SageMaker that SageMaker Pipelines offers.

Amazon Managed Workflows for Apache Airflow (Amazon MWAA):

via – https://aws.amazon.com/managed-workflows-for-apache-airflow/

Use AWS Step Functions to build a serverless workflow that integrates with SageMaker for model training and deployment, ensuring scalability and fault tolerance – AWS Step Functions is a serverless orchestration service that can integrate with SageMaker and other AWS services. However, it is more general-purpose and lacks some of the ML-specific features, such as model lineage tracking and hyperparameter tuning, that are built into SageMaker Pipelines.

Use AWS Lambda functions to manually trigger each step of the ML workflow, enabling flexible execution without needing a predefined orchestration tool – AWS Lambda is useful for triggering specific tasks, but manually managing each step of a complex ML workflow without a comprehensive orchestration tool is not scalable or maintainable. It does not provide the task dependency management, monitoring, and versioning required for an end-to-end ML workflow.

References:

https://docs.aws.amazon.com/sagemaker/latest/dg/pipelines.html https://aws.amazon.com/managed-workflows-for-apache-airflow/

Latest MLA-C01 Dumps Valid Version with 125 Q&As

Latest And Valid Q&A | Instant Download | Once Fail, Full Refund