Which technique can be used to improve model performance in terms of accuracy in the validation set?

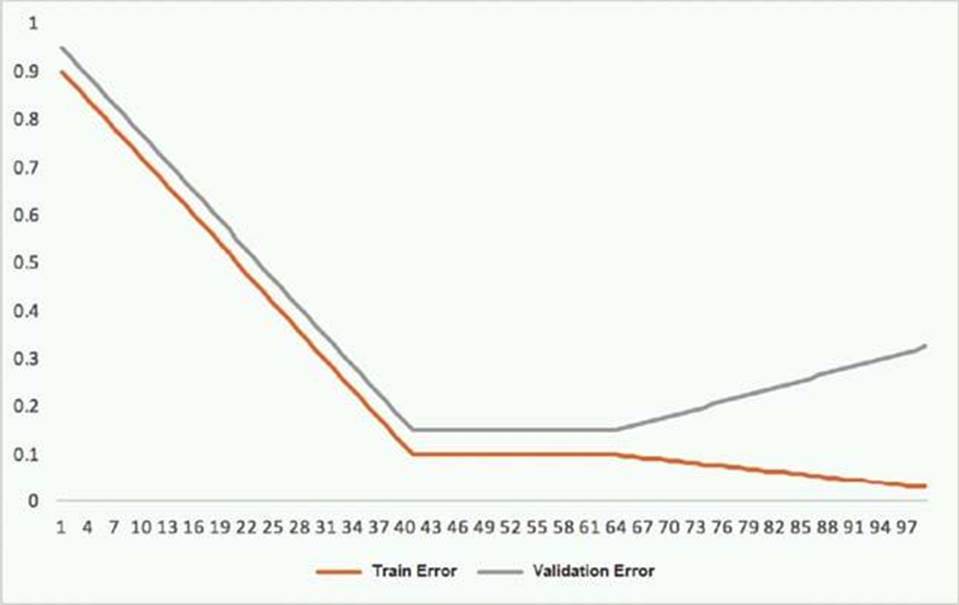

This graph shows the training and validation loss against the epochs for a neural network

The network being trained is as follows

• Two dense layers one output neuron

• 100 neurons in each layer

• 100 epochs

• Random initialization of weights

Which technique can be used to improve model performance in terms of accuracy in the validation set?

A . Early stopping

B . Random initialization of weights with appropriate seed

C . Increasing the number of epochs

D . Adding another layer with the 100 neurons

Answer: A

Explanation:

Early stopping is a technique that can be used to prevent overfitting and improve model performance on the validation set. Overfitting occurs when the model learns the training data too well and fails to generalize to new and unseen data. This can be seen in the graph, where the training loss keeps decreasing, but the validation loss starts to increase after some point. This means that the model is fitting the noise and patterns in the training data that are not relevant for the validation data. Early stopping is a way of stopping the training process before the model overfits the training data. It works by monitoring the validation loss and stopping the training when the validation loss stops decreasing or starts increasing. This way, the model is saved at the point where it has the best performance on the validation set. Early stopping can also save time and resources by reducing the number of epochs needed for training.

Reference: Early Stopping

How to Stop Training Deep Neural Networks At the Right Time Using Early Stopping

Latest MLS-C01 Dumps Valid Version with 104 Q&As

Latest And Valid Q&A | Instant Download | Once Fail, Full Refund